Python3 Html Parser

html.parser — Simple HTML and XHTML parser — Python …

Source code: Lib/html/

This module defines a class HTMLParser which serves as the basis for

parsing text files formatted in HTML (HyperText Mark-up Language) and XHTML.

class (*, convert_charrefs=True)¶

Create a parser instance able to parse invalid markup.

If convert_charrefs is True (the default), all character

references (except the ones in script/style elements) are

automatically converted to the corresponding Unicode characters.

An HTMLParser instance is fed HTML data and calls handler methods

when start tags, end tags, text, comments, and other markup elements are

encountered. The user should subclass HTMLParser and override its

methods to implement the desired behavior.

This parser does not check that end tags match start tags or call the end-tag

handler for elements which are closed implicitly by closing an outer element.

Changed in version 3. 4: convert_charrefs keyword argument added.

Changed in version 3. 5: The default value for argument convert_charrefs is now True.

Example HTML Parser Application¶

As a basic example, below is a simple HTML parser that uses the

HTMLParser class to print out start tags, end tags, and data

as they are encountered:

from import HTMLParser

class MyHTMLParser(HTMLParser):

def handle_starttag(self, tag, attrs):

print(“Encountered a start tag:”, tag)

def handle_endtag(self, tag):

print(“Encountered an end tag:”, tag)

def handle_data(self, data):

print(“Encountered some data:”, data)

parser = MyHTMLParser()

(‘

‘

Parse me!

‘)

The output will then be:

Encountered a start tag: html

Encountered a start tag: head

Encountered a start tag: title

Encountered some data: Test

Encountered an end tag: title

Encountered an end tag: head

Encountered a start tag: body

Encountered a start tag: h1

Encountered some data: Parse me!

Encountered an end tag: h1

Encountered an end tag: body

Encountered an end tag: html

HTMLParser Methods¶

HTMLParser instances have the following methods:

(data)¶

Feed some text to the parser. It is processed insofar as it consists of

complete elements; incomplete data is buffered until more data is fed or

close() is called. data must be str.

()¶

Force processing of all buffered data as if it were followed by an end-of-file

mark. This method may be redefined by a derived class to define additional

processing at the end of the input, but the redefined version should always call

the HTMLParser base class method close().

Reset the instance. Loses all unprocessed data. This is called implicitly at

instantiation time.

Return current line number and offset.

t_starttag_text()¶

Return the text of the most recently opened start tag. This should not normally

be needed for structured processing, but may be useful in dealing with HTML “as

deployed” or for re-generating input with minimal changes (whitespace between

attributes can be preserved, etc. ).

The following methods are called when data or markup elements are encountered

and they are meant to be overridden in a subclass. The base class

implementations do nothing (except for handle_startendtag()):

HTMLParser. handle_starttag(tag, attrs)¶

This method is called to handle the start of a tag (e. g.

The tag argument is the name of the tag converted to lower case. The attrs

argument is a list of (name, value) pairs containing the attributes found

inside the tag’s <> brackets. The name will be translated to lower case,

and quotes in the value have been removed, and character and entity references

have been replaced.

For instance, for the tag ‘)

Decl: DOCTYPE HTML PUBLIC “-//W3C//DTD HTML 4. 01//EN” ”

Parsing an element with a few attributes and a title:

>>> (‘

Start tag: img

attr: (‘src’, ”)

attr: (‘alt’, ‘The Python logo’)

>>>

>>> (‘

Python

‘)

Start tag: h1

Data: Python

End tag: h1

The content of script and style elements is returned as is, without

further parsing:

>>> (‘

‘)

Start tag: style

attr: (‘type’, ‘text/css’)

Data: #python { color: green}

End tag: style

>>> (‘‘)

Start tag: script

attr: (‘type’, ‘text/javascript’)

Data: alert(“hello! “);

End tag: script

Parsing comments:

>>> (‘‘… ‘IE-specific content‘)

Comment: a comment

Comment: [if IE 9]>IE-specific content‘):

>>> (‘>>>’)

Named ent: >

Num ent: >

Feeding incomplete chunks to feed() works, but

handle_data() might be called more than once

(unless convert_charrefs is set to True):

>>> for chunk in [‘

Start tag: span

Data: buff

Data: ered

Data: text

End tag: span

Parsing invalid HTML (e. unquoted attributes) also works:

>>> (‘

Start tag: p

Start tag: a

attr: (‘class’, ‘link’)

attr: (‘href’, ‘#main’)

Data: tag soup

End tag: p

End tag: a

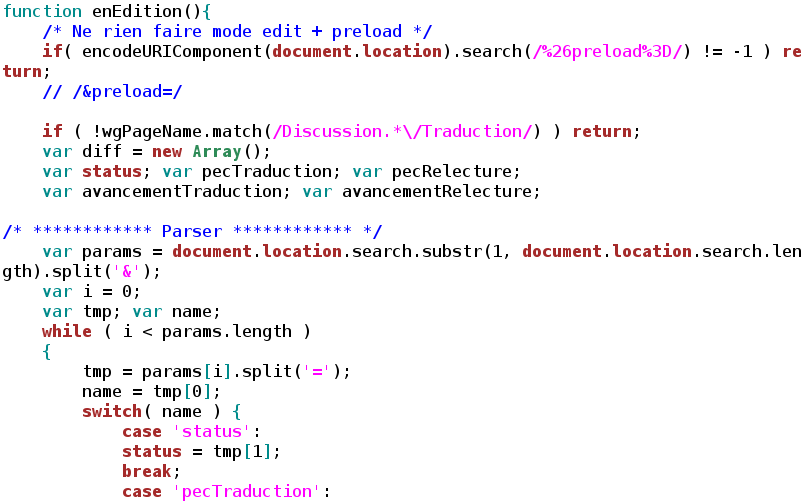

HTMLParser in Python 3.x – AskPython

provides a very simple and efficient way for coders to read through HTML code. This library comes pre-installed in the stdlib. This simplifies our interfacing with the HTMLParser library as we do not need to install additional packages from the Python Package Index (PyPI) for the same is HTMLParser? Essentially, HTMLParser lets us understand HTML code in a nested fashion. The module has methods that are automatically called when specific HTML elements are met with. It simplifies HTML tags and data fed with HTML data, the tag reads through it one tag at a time, going from start tags to the tags within, then the end tags and so to Use HTMLParser? HTMLParser only identifies the tags or data for us but does not output any data when something is identified. We need to add functionality to the methods before they can output the information they if we need to add functionality, what’s the use of the HTMLParser? This module saves us the time of creating the functionality of identifying tags ’re not going to code how to identify the tags, only what to do once they’re identified. Understood? Great! Now let’s get into creating a parser for ourselves! Subclassing the HTMLParserHow can we add functionality to the HTMLParser methods? By subclassing. Also identified as Inheritance, we create a class that retains the behavior of HTMLParser, while adding more bclassing lets us override the default functionality of a method (which in our case, is to return nothing when tags are identified) and add some better functions instead. Let’s see how to work with the HTMLParser nding Names of The Called MethodsThere are many methods available within the module. We’ll go over the ones you’d need frequently and then learn how to make use of MLParser. handle_starttag(tag, attrs) – Called when start tags are found (example ,

, )HTMLParser. handle_endtag(tag) – Called when end tags are found (exampleclass Parse(HTMLParser):

def __init__(self):

#Since Python 3, we need to call the __init__() function

#of the parent class

super(). __init__()

()

#Defining what the methods should output when called by HTMLParser.

def handle_starttag(self, tag, attrs):

print(“Start tag: “, tag)

for a in attrs:

print(“Attributes of the tag: “, a)

def handle_data(self, data):

print(“Here’s the data: “, data)

def handle_endtag(self, tag):

print(“End tag: “, tag)

testParser = Parse()

(“

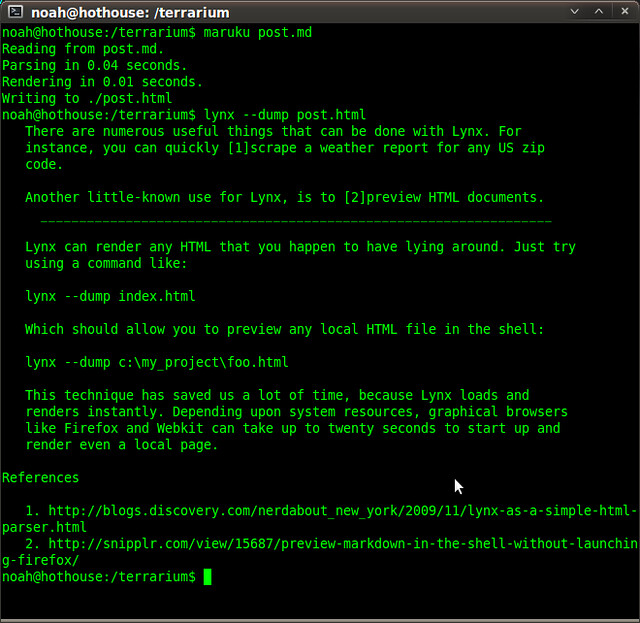

HTMLParser OutputWhat Can HTMLParser Be Used For? Web data is what most people would need the HTMLParser module for. Not to say that it cannot be used for anything else, but when you need to read loads of websites and find specific information, this module will make the task a cakewalk for MLParser Real World ExampleI’m going to pull every single link from the Python Wikipedia page for this it manually, by right-clicking on a link, copying and pasting it in a word file, and then moving on to the next is possible too. But that would take hours if there are lots of links on the page which is a typical situation with Wikipedia we’ll be spending 5 minutes to code an HTMLParser and get the time needed to finish the task from hours to a few seconds. Let’s do it! from import HTMLParser

import quest

#Import HTML from a URL

url = quest. urlopen(“(programming_language)”)

html = ()()

#Since Python 3, we need to call the __init__() function of the parent class

#Defining what the method should output when called by HTMLParser.

# Only parse the ‘anchor’ tag.

if tag == “a”:

for name, link in attrs:

if name == “href” and artswith(“”):

print (link)

p = Parse()

(html)

Python HTMLParser Web ScaperThe Python programming page on Wikipedia has more than 300 links. I’m sure it would have taken me at least an hour to make sure we had all of them. But with this simple script, it took <5 seconds to output every single link without missing any of them! ConclusionThis module is really fun to play around with. We ended up scraping tons of data from the web using this simple module in the process of writing this there are other modules like BeautifulSoup which are more well known. But for quick and simple tasks, HTMLParser does a really amazing job!

Best library to parse HTML with Python 3 and example?

I’m new to Python completely and am using Python 3. 1 on Windows (pywin). I need to parse some HTML, to essentially extra values between specific HTML tags and am confused at my array of options, and everything I find is suited for Python 2. x. I’ve read raves about Beautiful Soup, HTML5Lib and lxml, but I cannot figure out how to install any of these on Windows.

Questions:

What HTML parser do you recommend?

How do I install it? (Be gentle, I’m completely new to Python and remember I’m on Windows)

Do you have a simple example on how to use the recommended library to snag HTML from a specific URL and return the value out of say something like this:

| foo |

(say we want to return “/blahblah”)

asked Mar 24 ’10 at 2:54

TMCTMC8, 02812 gold badges49 silver badges71 bronze badges

Web-scraping in Python 3 is currently very poorly supported; all the decent libraries work only with Python 2. If you must web scrape in Python, use Python 2.

Although Beautiful Soup is oft recommended (every question regarding web scraping with Python in Stack Overflow suggests it), it’s not as good for Python 3 as it is for Python 2; I couldn’t even install it as the installation code was still Python 2.

As for adequate and simple-to-install solutions for Python 3, you can try the library’s HTML parser, although quite barebones, it comes with Python 3.

answered Jun 29 ’10 at 22:13

Humphrey BogartHumphrey Bogart6, 91312 gold badges50 silver badges58 bronze badges

2

If your HTML is well formed, you have many options, such as sax and dom. If it is not well formed you need a fault tolerant parser such as Beautiful soup, element tidy, or lxml’s HTML parser. No parser is perfect, when presented with a variety of broken HTML, sometimes I have to try more then one. Lxml and Elementree use a mostly compatible api that is more of a standard than Beautiful soup.

In my opinion, lxml is the best module for working with xml documents, but the ElementTree included with python is still pretty good. In the past I have used Beautiful soup to convert HTML to xml and construct ElementTree for processing the data.

answered Mar 24 ’10 at 3:23

mikerobimikerobi19. 3k5 gold badges44 silver badges42 bronze badges

I’m currently using lxml, and on Windows I used the installation binary from

import

page = (… )

title = (‘//head/title/text()’)[0]

answered Nov 17 ’11 at 19:54

MárcioMárcio6578 silver badges15 bronze badges

you might try beautifulsoup4 which is compatible with both python2 and python3 you can use it easily by

from bs4 import BeautifulSoup

soup = BeautifulSoup(“

SomebadHTML”)

answered Dec 15 ’20 at 7:13

xiaojueguanxiaojueguan5094 silver badges13 bronze badges

Not the answer you’re looking for? Browse other questions tagged python-3. x or ask your own question.

Frequently Asked Questions about python3 html parser

How do I parse HTML in Python?

Examplefrom html. parser import HTMLParser.class Parser(HTMLParser):# method to append the start tag to the list start_tags.def handle_starttag(self, tag, attrs):global start_tags.start_tags. append(tag)# method to append the end tag to the list end_tags.def handle_endtag(self, tag):More items…

Which Python library did we use to parse HTML?

Beautiful Soup (bs4) is a Python library that is used to parse information out of HTML or XML files. It parses its input into an object on which you can run a variety of searches. To start parsing an HTML file, import the Beautiful Soup library and create a Beautiful Soup object as shown in the following code example.Jan 7, 2021

Does the Python language support the HTML language?

Python has the capability to process the HTML files through the HTMLParser class in the html. parser module. It can detect the nature of the HTML tags their position and many other properties of the tags.Jan 12, 2021