Reverse Proxy Gateway

What is a Reverse Proxy vs. Load Balancer? – NGINX

Reverse proxy servers and load balancers are components in a client-server computing architecture. Both act as intermediaries in the communication between the clients and servers, performing functions that improve efficiency. They can be implemented as dedicated, purpose-built devices, but increasingly in modern web architectures they are software applications that run on commodity hardware.

The basic definitions are simple:

A reverse proxy accepts a request from a client, forwards it to a server that can fulfill it, and returns the server’s response to the client.

A load balancer distributes incoming client requests among a group of servers, in each case returning the response from the selected server to the appropriate client.

But they sound pretty similar, right? Both types of application sit between clients and servers, accepting requests from the former and delivering responses from the latter. No wonder there’s confusion about what’s a reverse proxy vs. load balancer. To help tease them apart, let’s explore when and why they’re typically deployed at a website.

Load Balancing

Load balancers are most commonly deployed when a site needs multiple servers because the volume of requests is too much for a single server to handle efficiently. Deploying multiple servers also eliminates a single point of failure, making the website more reliable. Most commonly, the servers all host the same content, and the load balancer’s job is to distribute the workload in a way that makes the best use of each server’s capacity, prevents overload on any server, and results in the fastest possible response to the client.

A load balancer can also enhance the user experience by reducing the number of error responses the client sees. It does this by detecting when servers go down, and diverting requests away from them to the other servers in the group. In the simplest implementation, the load balancer detects server health by intercepting error responses to regular requests. Application health checks are a more flexible and sophisticated method in which the load balancer sends separate health-check requests and requires a specified type of response to consider the server healthy.

Another useful function provided by some load balancers is session persistence, which means sending all requests from a particular client to the same server. Even though HTTP is stateless in theory, many applications must store state information just to provide their core functionality – think of the shopping basket on an e-commerce site. Such applications underperform or can even fail in a load-balanced environment, if the load balancer distributes requests in a user session to different servers instead of directing them all to the server that responded to the initial request.

Reverse Proxy

Whereas deploying a load balancer makes sense only when you have multiple servers, it often makes sense to deploy a reverse proxy even with just one web server or application server. You can think of the reverse proxy as a website’s “public face. ” Its address is the one advertised for the website, and it sits at the edge of the site’s network to accept requests from web browsers and mobile apps for the content hosted at the website. The benefits are two-fold:

Increased security – No information about your backend servers is visible outside your internal network, so malicious clients cannot access them directly to exploit any vulnerabilities. Many reverse proxy servers include features that help protect backend servers from distributed denial-of-service (DDoS) attacks, for example by rejecting traffic from particular client IP addresses (blacklisting), or limiting the number of connections accepted from each client.

Increased scalability and flexibility – Because clients see only the reverse proxy’s IP address, you are free to change the configuration of your backend infrastructure. This is particularly useful In a load-balanced environment, where you can scale the number of servers up and down to match fluctuations in traffic volume.

Another reason to deploy a reverse proxy is for web acceleration – reducing the time it takes to generate a response and return it to the client. Techniques for web acceleration include the following:

Compression – Compressing server responses before returning them to the client (for instance, with gzip) reduces the amount of bandwidth they require, which speeds their transit over the network.

SSL termination – Encrypting the traffic between clients and servers protects it as it crosses a public network like the Internet. But decryption and encryption can be computationally expensive. By decrypting incoming requests and encrypting server responses, the reverse proxy frees up resources on backend servers which they can then devote to their main purpose, serving content.

Caching – Before returning the backend server’s response to the client, the reverse proxy stores a copy of it locally. When the client (or any client) makes the same request, the reverse proxy can provide the response itself from the cache instead of forwarding the request to the backend server. This both decreases response time to the client and reduces the load on the backend server.

How Can NGINX Plus Help?

NGINX Plus and NGINX are the best-in-class reverse proxy and load balancing solutions used by high-traffic websites such as Dropbox, Netflix, and Zynga. More than 400 million websites worldwide rely on NGINX Plus and NGINX Open Source to deliver their content quickly, reliably, and securely.

NGINX Plus performs all the load-balancing and reverse proxy functions discussed above and more, improving website performance, reliability, security, and scale. As a software-based load balancer, NGINX Plus is much less expensive than hardware-based solutions with similar capabilities. The comprehensive load-balancing and reverse-proxy capabilities in NGINX Plus enable you to build a highly optimized application delivery network.

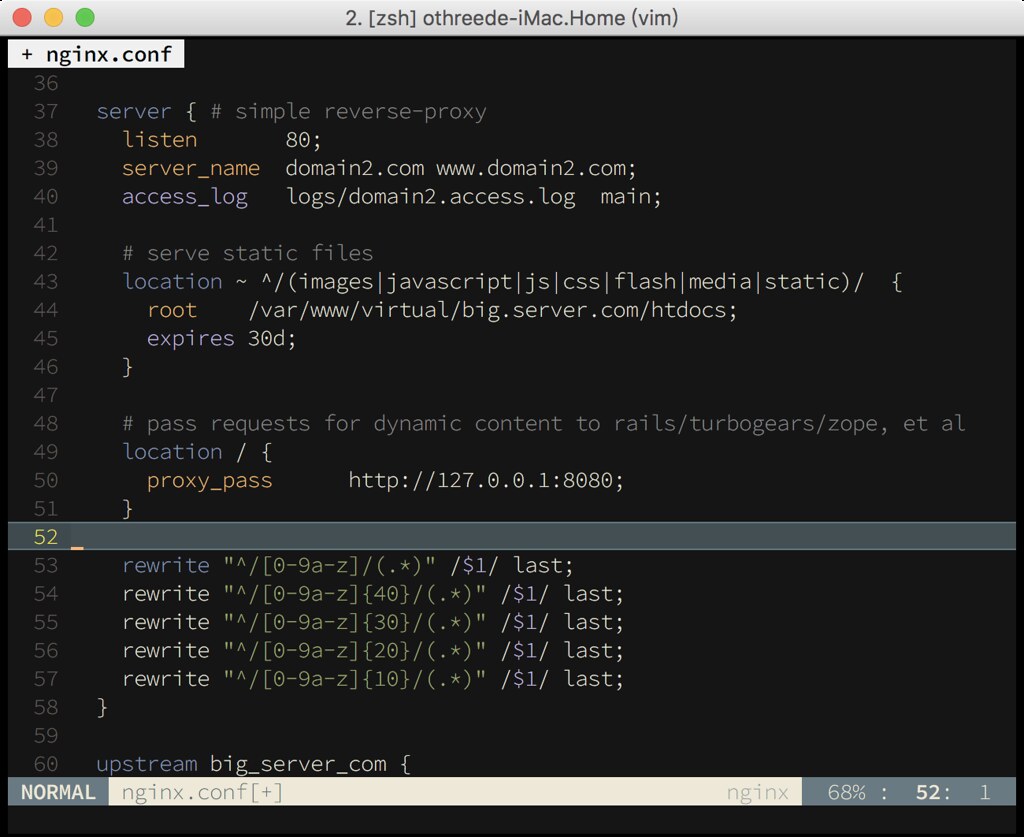

For details about how NGINX Plus implements the features described here, check out these resources:

Application Load Balancing with NGINX Plus

Application Health Checks with NGINX Plus

Session Persistence with NGINX Plus

Mitigating DDoS Attacks with NGINX and NGINX Plus

Compression and Decompression

SSL termination for HTTP and TCP

Content Caching in NGINX Plus

HTTP – Gateway (Reverse Proxy) – Datacadamia

Datacadamia – Data all the things

(World Wide) Web – (W3|WWW)

(HTTP|HTTPS) – Hypertext Transfer Protocol

About

A reverse proxy (or gateway) is a proxy that is configured to appear to the client just like an ordinary web server.

Traffic from the internet at large enters system through reverse proxy, which then routes it to the service.

The client makes ordinary requests that are going to the proxy. The proxy then decides (based on several routing criterion) where to send internally those requests and returns the content as if it were itself the origin.

The proxy configuration that do the inverse (ie receives internal request and routes them to external service such as a website) is called a forward proxy.

To be honest a gateway also forward request to internal service.

Articles Related

Usage

A typical usage of a reverse proxy is:

to enable encrypted HTTPS connections

to provide caching for a slower back-end server (performance)

to bring several servers into the same URL space.

to enable password-protection content

to inject code in the page (Example: browser-sync)

to send the request to a mock server for testing purpose.

A CDN is a reverse proxy Internet wise that provides caching.

Management

An HTTP reverse proxy uses non-standard headers to inform the upstream server about the user’s IP address and other request properties.

See IP in HTTP

Example Apache

Documentation / Reference

API gateway vs. reverse proxy – Stack Overflow

In order to deal with the microservice architecture, it’s often used alongside a Reverse Proxy (such as nginx or apache d) and for cross cutting concerns implementation API gateway pattern is used. Sometimes Reverse proxy does the work of API gateway.

It will be good to see clear differences between these two approaches.

It looks like the potential benefit of API gateway usage is invoking multiple microservices and aggregating the results. All other responsibilities of API gateway can be implemented using Reverse Proxy. Such as:

Authentication (It can be done using nginx LUA scripts);

Transport security. It itself Reverse Proxy task;

Load balancing…

So based on this there are several questions:

Does it make sense to use API gateway and Reverse proxy simultaneously (as example request -> API gateway -> reverse proxy(nginx) -> concrete microservice)? In what cases?

What are the other differences that can be implemented using API gateway and can’t be implemented by Reverse proxy and vice versa?

Alex Herman2, 2653 gold badges21 silver badges44 bronze badges

asked Mar 2 ’16 at 19:44

It is easier to think about them if you realize they aren’t mutually exclusive. Think of an API gateway as a specific type reverse proxy implementation.

In regards to your questions, it is not uncommon to see the both used in conjunction where the API gateway is treated as an application tier that sits behind a reverse proxy for load balancing and health checking. An example would be something like a WAF sandwich architecture in that your Web Application Firewall/API Gateway is sandwiched by reverse proxy tiers, one for the WAF itself and the other for the individual microservices it talks to.

Regarding the differences, they are very similar. It’s just nomenclature. As you take a basic reverse proxy setup and start bolting on more pieces like authentication, rate limiting, dynamic config updates, and service discovery, people are more likely to call that an API gateway.

answered May 17 ’16 at 1:04

Justin TalbottJustin Talbott1, 3902 gold badges11 silver badges8 bronze badges

3

I believe, API Gateway is a reverse proxy that can be configured dynamically via API and potentially via UI, while traditional reverse proxy (like Nginx, HAProxy or Apache) is configured via config file and has to be restarted when configuration changes. Thus, API Gateway should be used when routing rules or other configuration often changes. To your questions:

It makes sense as long as every component in this sequence serves its purpose.

Differences are not in feature list but in the way configuration changes applied.

Additionally, API Gateway is often provided in form of SAAS, like Apigee or Tyk for example.

Also, here’s my tutorial on how to create a simple API Gateway with

Hope it helps.

answered Apr 6 ’16 at 0:51

API Gateways usually operate as a L7 construct.

API Gateways provide additional functionality as compared to a plain reverse proxy. If you consider some of the portals out there they can provide:

full API Lifecycle Management including documentation

a portal which can be used as the source of truth for various client applications and where you can provide client governance, rate limiting etc.

routing to different versions of the API including canary/beta versions

detecting usage patterns, register apps, retrieve client credentials etc.

However with the advent of service meshes like Istio, Consul a lot of the functionality of API Gateways will be subsumed by meshes.

answered Aug 11 ’20 at 23:47

pcodexpcodex1, 3679 silver badges13 bronze badges

API gateway acts as a reverse proxy to accept all application programming interface (API) calls, aggregate the various services required to fulfill them, and return the appropriate result.

An API gateway has a more robust set of features — especially around security and monitoring — than an API proxy. I would say API gateway pattern also called as Backend for frontend (BFF) is widely used in Microservices development. Checkout the article for the benefits and features of API Gateway pattern in Microservice world.

On the other hand API proxy is basically a lightweight API gateway. It includes some basic security and monitoring capabilities. So, if you already have an API and your needs are simple, an API proxy will work fine.

The below image will provide you the clear picture of the difference between API Gateway and Reverse proxy.

answered Aug 15 at 7:19

Not the answer you’re looking for? Browse other questions tagged nginx reverse-proxy microservices aws-api-gateway tyk or ask your own question.

Frequently Asked Questions about reverse proxy gateway

What is reverse proxy gateway?

A reverse proxy (or gateway) is a proxy that is configured to appear to the client just like an ordinary web server. Traffic from the internet at large enters system through reverse proxy , which then routes it to the service. … To be honest a gateway also forward request to internal service.

Is reverse proxy same as API gateway?

API gateway acts as a reverse proxy to accept all application programming interface (API) calls, aggregate the various services required to fulfill them, and return the appropriate result. An API gateway has a more robust set of features — especially around security and monitoring — than an API proxy.Apr 6, 2016

What is a proxy gateway?

A proxy server, also known as a “proxy” or “application-level gateway”, is a computer that acts as a gateway between a local network (for example, all the computers at one company or in one building) and a larger-scale network such as the internet. Proxy servers provide increased performance and security.Nov 15, 2018