Python Requests Crawler

Web Scraping 101 in Python with Requests & BeautifulSoup

Intro

Information is everywhere online. Unfortunately, some of it is hard to access programmatically. While many websites offer an API, they are often expensive or have very strict rate limits, even if you’re working on an open-source and/or non-commercial project or product.

That’s where web scraping can come into play. Wikipedia defines web scraping as follows:

Web scraping, web harvesting, or web data extraction data scraping used for extracting data from websites. Web scraping software may access the World Wide Web directly using the Hypertext Transfer Protocol [HTTP], or through a web browser. “Web scraping”

In practice, web scraping encompasses any method allowing a programmer to access the content of a website programmatically, and thus, (semi-) automatically.

Here are three approaches (i. e. Python libraries) for web scraping which are among the most popular:

Sending an HTTP request, ordinarily via Requests, to a webpage and then parsing the HTML (ordinarily using BeautifulSoup) which is returned to access the desired information. Typical Use Case: Standard web scraping problem, refer to the case tools ordinarily used for automated software testing, primarily Selenium, to access a websites‘ content programmatically. Typical Use Case: Websites which use Javascript or are otherwise not directly accessible through, which can be thought of as more of a general web scraping framework, which can be used to build spiders and scrape data from various websites whilst minimizing repetition. Typical Use Case: Scraping Amazon Reviews.

While you could scrape data using any other programming language as well, Python is commonly used due to its ease of syntax as well as the large variety of libraries available for scraping purposes in Python.

After this short intro, this post will move on to some web scraping ethics, followed by some general information on the libraries which will be used in this post. Lastly, everything we have learned so far will be applied to a case study in which we will acquire the data of all companies in the portfolio of Sequoia Capital, one of the most well-known VC firms in the US. After checking their website and their, scraping Sequoia’s portfolio seems to be allowed; refer to the section on and the case study for details on how I went about determining this.

In the scope of this blog post, we will only be able to have a look at one of the three methods above. Since the standard combination of Requests + BeautifulSoup is generally the most flexible and easiest to pick up, we will give it a go in this post. Note that the tools above are not mutually exclusive; you might, for example, get some HTML text with Scrapy or Selenium and then parse it with BeautifulSoup.

Web Scraping Ethics

One factor that is extremely relevant when conducting web scraping is ethics and legality. I’m not a lawyer, and specific laws tend to vary considerably by geography anyway, but in general web scraping tends to fall into a grey area, meaning it is usually not strictly prohibited, but also not generally legal (i. not legal under all circumstances). It tends to depend on the specific data you are scraping.

In general, websites may ban your IP address anytime you are scraping something they don’t want you to scrape. We here at STATWORX don’t condone any illegal activity and encourage you to always check explicitly when you’re not sure if it’s okay to scrape something. For that, the following section will come in handy.

Understanding

The robot exclusion standard is a protocol which is read explicitly by web crawlers (such as the ones used by big search engines, i. mostly Google) and tells them which parts of a website may be indexed by the crawler and which may not. In general, crawlers or scrapers aren’t forced to follow the limitations set forth in a, but it would be highly unethical (and potentially illegal) to not do so.

The following shows an example file taken from Hackernews, a social newsfeed run by YCombinator which is popular with many people in startups.

The Hackernews specifies that all user agents (thus the * wildcard) may access all URLs, except the URLs that are explicitly disallowed. Because only certain URLs are disallowed, this implicitly allows everything else. An alternative would be to exclude everything and then explicitly specify only certain URLs which can be accessed by crawlers or other bots.

Also, notice the crawl delay of 30 seconds which means that each bot should only send one request every 30 seconds. It is good practice, in general, to let your crawler or scraper sleep in regular (rather large) intervals since too many requests can bring down sites down, even when they come from human users.

When looking at the of Hackernews, it is also quite logical why they disallowed some specific URLs: They don’t want bots to pose as users by for example submitting threads, voting or replying. Anything else (e. g. scraping threads and their contents) is fair game, as long as you respect the crawl delay. This makes sense when you consider the mission of Hackernews, which is mostly to disseminate information. Incidentally, they also offer an API that is quite easy to use, so if you really needed information from HN, you would just use their API.

Refer to the Gist below for the of Google, which is (obviously) much more restrictive than that of Hackernews. Check it out for yourself, since it is much longer than shown below, but essentially, no bots are allowed to perform a search on Google, specified on the first two lines. Only certain parts of a search are allowed, such as „about“ and „static“. If there is a general URL which is disallowed, it is overwritten if a more specific URL is allowed (e. the disallowing of /search is overridden by the more specific allowing of /search/about).

Moving on, we will take a look at the specific Python packages which will be used in the scope of this case study, namely Requests and BeautifulSoup.

Requests

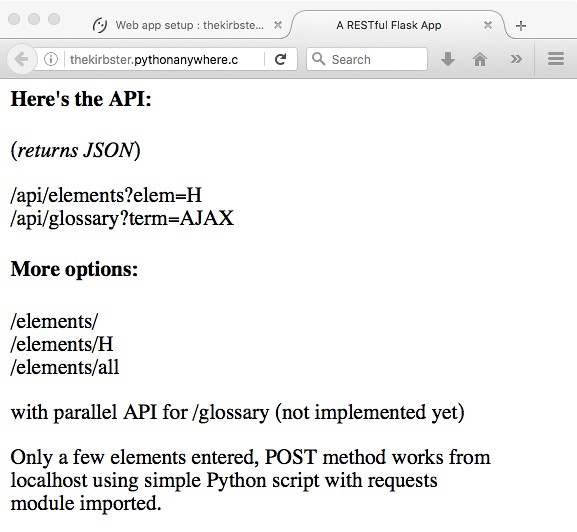

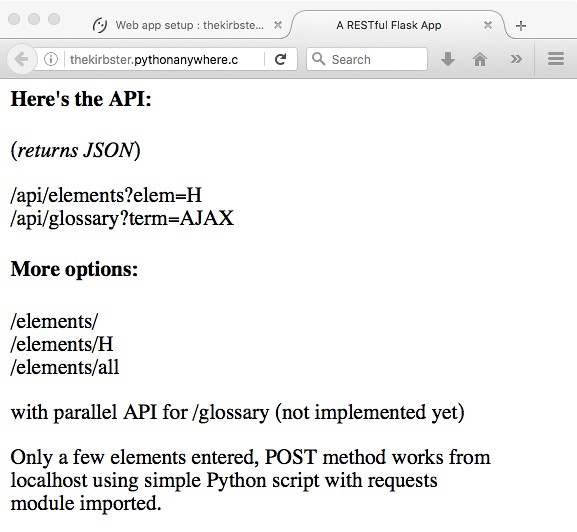

Requests is a Python library used to easily make HTTP requests. Generally, Requests has two main use cases, making requests to an API and getting raw HTML content from websites (i. e., scraping).

Whenever you send any type of request, you should always check the status code (especially when scraping), to make sure your request was served successfully. You can find a useful overview of status codes here. Ideally, you want your status code to be 200 (meaning your request was successful). The status code can also tell you why your request was not served, for example, that you sent too many requests (status code 429) or the infamous not found (status code 404).

Use Case 1: API Requests

The Gist above shows a basic API request directed to the NYT API. If you want to replicate this request on your own machine, you have to first create an account at the NYT Dev page and then assign the key you receive to the KEY constant.

The data you receive from a REST API will be in JSON format, which is represented in Python as a dict data structure. Therefore, you will still have to „parse“ this data a bit before you actually have it in a table format which can be represented in e. a CSV file, i. you have to select which data is relevant for you.

Use Case 2: Scraping

The following lines request the HTML of Wikipedia’s page on web scraping. The status code attribute of the Response object contains the status code related to the request.

After executing these lines, you still only have the raw HTML with all tags included. This is usually not very useful, since most of the time when scraping with Requests, we are looking for specific information and text only, as human readers are not interested in HTML tags or other markups. This is where BeautifulSoup comes in.

BeautifulSoup

BeautifulSoup is a Python library used for parsing documents (i. mostly HTML or XML files). Using Requests to obtain the HTML of a page and then parsing whichever information you are looking for with BeautifulSoup from the raw HTML is the quasi-standard web scraping „stack“ commonly used by Python programmers for easy-ish tasks.

Going back to the Gist above, parsing the raw HTML returned by Wikipedia for the web scraping site would look similar to the below.

In this case, BeautifulSoup extracts all headlines, i. all headlines in the Contents section at the top of the page. Try it out for yourself!

As you can see below, you can easily find the class attribute of an HTML element using the inspector of any web browser.

Figure 1: Finding HTML elements on Wikipedia using the Chrome inspector.

This kind of matching is (in my opinion), one of the easiest ways to use BeautifulSoup: You simply specify the HTML tag (in this case, span) and another attribute of the content which you want to find (in this case, this other attribute is class). This allows you to match arbitrary sections of almost any webpage. For more complicated matches, you can also use regular expressions (REGEX).

Once you have the elements, from which you would like to extract the text, you can access the text by scraping their text attribute.

Inspector

As a short interlude, it’s important to give a brief introduction to the Dev tools in Chrome (they are available in any browser, I just chose to use Chrome), which allows you to use the inspector, that gives you access to a websites HTML and also lets you copy attributes such as the XPath and CSS selector. All of these can be helpful or even necessary in the scraping process (especially when using Selenium). The workflow in the case study should give you a basic idea of how to work with the Inspector. For more detailed information on the Inspector, the official Google website linked above contains plenty of information.

Figure 2 shows the basic interface of the Inspector in Chrome.

Figure 2: Chrome Inspector on Wikipedia.

Sequoia Capital Case Study

I actually first wanted to do this case study with the New York Times, since they have an API and thus the results received from the API could have been compared to the results from scraping. Unfortunately, most news organizations have very restrictive, specifically not permitting searching for articles. Therefore, I decided to scrape the portfolio of one of the big VC firms in the US, Sequoia, since their is permissive and I also think that startups and the venture capital scene are very interesting in general.

First, let’s have a look at Sequoia’s

Luckily, they permit access of various kinds – except three URLs, which is fine for our purposes. We will still build in a crawl delay of 15-30 seconds between each request.

Next, let’s scope out the actual data which we are trying to scrape. We are interested in the portfolio of Sequoia, so is the URL we are after.

Figure 3: Sequoia’s portfolio.

The companies are nicely laid out in a grid, making them pretty easy to scrape. Upon clicking, the page shows the details of each company. Also, notice how the URL changes in Figure 4 when clicking on a company! This is important for Requests especially.

Figure 4: Detail page on one of Sequoia’s portfolio companies.

Let’s aim for collecting the following basic information on each company and outputting them as a CSV file:

Name of the companyURL of the companyDescriptionMilestonesTeamPartner

If any of this information is not available for a company, we will simply append the string „NA“ instead.

Time to start inspecting!

Scraping Process

Company Name

Upon inspecting the grid it looks like the information on each company is contained within a div tag with the class companies _company js-company. Thus we should just be able to look for this combination with BeautifulSoup.

Figure 5: Grid structure of each company.

This still leaves us with all the other information missing though, meaning we have to somehow access the detail page of each company. Notice how in Figure 5 above, each company div has an attribute called data-url. For 100 Thieves, for example, onclick has the value /companies/100-thieves/. That’s just what we need!

Now, all we have to do is to append this data-URL attribute for each company to the base URL (which is just) and now we can send yet another request to access the detail page of each company.

So let’s write some code to first get the company name and then send another request to the detail page of each company: I will write code interspersed with text here. For a full script, check my Github.

First of all, we take care of all the imports and set up any variables we might need. We also send our first request to the base URL which contains the grid with all companies and instantiates a BeautifulSoup parser.

After we have taken care of basic bookkeeping and setup the dictionary in which we want to scrape the data, we can start working on the actual scraping, first parsing the class shown in Figure 5. As you can see in Figure 5, once we have selected the div tag with the matching class, we have to go to its first div child and then select its text, which then contains the name of the company.

On the detail page, we have basically everything we wanted. Since we already have the name of the company, all we still need are URL, description, milestones, team and the respective partner from Sequoia.

Company URL

For the URL, we should just be able to find elements by their class and then select the first element, since it seems like the website is always the first social link. You can see the inspector view in Figure 6.

Figure 6: The first social link ordinarily contains the company URL.

But wait – what if there are no social links or the company website is not provided? In the case that the website is not provided, but a social media page is, we will simply consider this social media link the company’s de facto website. If there are no social links provided at all, we have to append an NA. This is why we check explicitly for the number of objects found because we cannot access the href attribute of a tag that doesn’t exist. An example of a company without a URL is shown in Figure 7.

Figure 7: Company without social links.

Company description

As you can see in Figure 8, the p tag containing the company description does not have any additional identifiers, therefore we are forced to first access the div tag above it and then go down to the p tag containing the description and selecting its text attribute.

Figure 8: Company description.

Milestones, Team & Partner(s)

For the last three elements, they are all located in the same structure and can thus be accessed in the same manner. We will simply match the text of their parent element and then work our way down from there.

Since the specific text elements do not have good identifying characteristics, we match the text of their parent element. Once we have the text, we go up two levels, by using the parent attribute. This brings us to the div tag belonging to this specific category (e. Milestones or Team). Now all that is left to do is go down to the ul tag containing the actual text we are interested in and getting its text.

Figure 9: Combining a text match with the parent attributes allows the acquisition of text without proper identifying characteristics.

One issue with using text match is the fact that only exact matches are found. This matters in cases where the string you are looking for might differ slightly between pages. As you can see in Figure 10, this applies to our case study here for the text Partner. If a company has more than one Sequoia partner assigned to it, the string is „Partners“ instead of „Partner“. Therefore we use a REGEX when searching for the partner element to get around this.

Figure 10: Exact string matching can lead to problems if there are minor differences in between HTML pages.

Last but not least, it is not guaranteed that all the elements we want (i. Milestones, Team, and Partner) are in fact available for each company. Thus before actually selecting the element, we first find all elements matching the string and then check the length. If there are no matching elements, we append NA, otherwise, we get the requisite information.

For a partner, there is always one element, thus we assume no partner information is available if there are one or fewer elements. I believe the reason that one element matching partner always shows up is the „Filter by Partner“ option shown in Figure 11. As you can see, scraping often requires some iterating to find some potential issues with your script.

Figure 11: Filter by partner option.

Writing to disk

To wrap up, we append all the information corresponding to a company to the list it belongs to within our dictionary. Then we convert this dictionary into a pandas DataFrame before writing it to disk.

Success! We just collected all of Seqouia’s portfolio companies and information on them.

* Well, all on their website at least, I believe they have their own respective sites for e. India and Israel.

Let’s have a look at the data we just scraped:

Looks good! We collected 506 companies in total and the data quality looks really good as well. The only thing I noticed is that some companies have a social link but it does not actually go anywhere. Refer to Figure 12 for an example of this with Pixelworks. The issue is that Pixelworks has a social link but this social link does not actually contain a URL (the href target is blank) and simply links back to the Sequoia portfolio.

Figure 12: Company with a social link but without a URL.

I have added code in the script to replace the blanks with NAs but have left the data as is above to illustrate this point.

Conclusion

With this blog post, I wanted to give you a decent introduction to web scraping in general and specifically using Requests & BeautifulSoup. Now go use it in the wild by scraping some data that can be of use to you or someone you know! But always make sure to read and respect both the and the terms and conditions of whichever page you are scraping.

Furthermore, you can check out resources and tutorials on some of the other methods shown above on their official websites, such as Scrapy and Selenium. You should also challenge yourself by scraping some more dynamic sites which you can not scrape using only Requests.

If you have any questions, found a bug or just feel like chatting about all things Python and scraping, feel free to contact me at

David WisselI am a data scientist Intern at STATWORX and enjoy all things Python, Statistics and Computer Science. During my time off, I like running (semi-) long distance races.

STATWORXis a consulting company for data science, statistics, machine learning and artificial intelligence located in Frankfurt, Zurich and Vienna. Sign up for our NEWSLETTER and receive reads and treats from the world of data science and AI. If you have questions or suggestions, please write us an e-mail addressed to blog(at)

Write You a Web Crawler – Unhackathon

Note: This tutorial uses the Unhackathon website () as our example starting point for web crawling. Please be aware that some of the sample outputs may be a bit different, since the Unhackathon website is updated occasionally.

This springboard project will have you build a simple web crawler in Python using the Requests library. Once you have implemented a basic web crawler and understand how it works, you will have numerous opportunities to expand your crawler to solve interesting problems.

Assumptions

This tutorial assumes that you have Python 3 installed on your machine. If you do not have Python installed (or you have an earlier version installed) you can find the latest Python builds at. Make sure you have the correct version in your environment variables.

We will use pip to install packages. Make sure you have that installed as well. Sometimes this is installed as pip3 to differentiate between versions of pip built with Python 2 or Python 3; if this is the case, be mindful to use the pip3 command instead of pip while following along in the tutorial.

We also assume that you’ll be working from the command line. You may use an IDE if you choose, but some aspects of this guide will not apply.

This guide assumes only basic programming ability and knowledge of data structures and Python. If you’re more advanced, feel free to use it as a reference rather than a step by step tutorial. If you haven’t used Python and can’t follow along, check out the official Python tutorial at and/or Codecademy’s Python class at

Setting up your project

Let’s get the basic setup out of the way now. (Next we’ll give a general overview of the project, and then we’ll jump into writing some code. )

If you’re on OS X or Linux, type the following in terminal:

mkdir webcrawler

cd webcrawler

pip3 install virtualenv

virtualenv -p python3 venv

source venv/bin/activate

pip3 install requests

If you are a Windows user, replace source venv/bin/activate with \path\to\venv\Scripts\activate.

If pip and/or virtualenv cannot be found, you’ll need to update your $PATH variable or use the full path to the program.

You’ve just made a directory to hold your project, set up a virtual environment in which your Python packages won’t interfere with those in your system environment, and we’ve installed Requests, the “HTTP for Humans” library for Python, which is the primary library we’ll be using to build our web crawler. If you’re confused by any of this you may want to ask a mentor to explain bash and/or package managers. You might also have issues due to system differences; let us know if you get stuck.

Web crawler overview

Web crawlers are pretty simple, at least at first pass (like most things they get harder once you start to take things like scalability and performance into consideration). Starting from a certain URL (or a list of URLs), they will check the HTML document at each URL for links (and other information) and then follow those links to repeat the process. A web crawler is the basis of many popular tools such as search engines (though search engines such as Google have much harder problems such as “How do we index this information so that it is searchable? ”).

Making our first HTTP request

Before we can continue, we need to know how to make an HTTP request using the Requests library and, also, how to manipulate the data we receive from the response to that request.

In a text editor, create a file ‘’. We’ll now edit that file:

import requests

r = (”)

This code gives us access to the Requests library on line one and uses the get method from that library to create a Response object called r.

If we enter the same code in the Python interactive shell (type python3 in the terminal to access the Python shell) we can examine r in more depth:

python3

>>> import requests

>>> r = (”)

>>> r

Entering the variable r, we get told that we have a response object with status code 200. (If you get a different error code, the Unhackathon website might be down. ) 200 is the standard response for successful HTTP requests. The actual response will depend on the request method used. In a GET request, the response will contain an entity corresponding to the requested resource. In a POST request, the response will contain an entity describing or containing the result of the action. Our method in Python is making a HTTP GET request under the surface, so our response contains the home page of and associated metadata.

>>> dir(r)

[‘__attrs__’, ‘__bool__’, ‘__class__’, ‘__delattr__’, ‘__dict__’, ‘__dir__’, ‘__doc__’, ‘__eq__’, ‘__format__’, ‘__ge__’, ‘__getattribute__’, ‘__getstate__’, ‘__gt__’, ‘__hash__’, ‘__init__’, ‘__iter__’, ‘__le__’, ‘__lt__’, ‘__module__’, ‘__ne__’, ‘__new__’, ‘__nonzero__’, ‘__reduce__’, ‘__reduce_ex__’, ‘__repr__’, ‘__setattr__’, ‘__setstate__’, ‘__sizeof__’, ‘__str__’, ‘__subclasshook__’, ‘__weakref__’, ‘_content’, ‘_content_consumed’, ‘apparent_encoding’, ‘close’, ‘connection’, ‘content’, ‘cookies’, ‘elapsed’, ‘encoding’, ‘headers’, ‘history’, ‘is_permanent_redirect’, ‘is_redirect’, ‘iter_content’, ‘iter_lines’, ‘json’, ‘links’, ‘ok’, ‘raise_for_status’, ‘raw’, ‘reason’, ‘request’, ‘status_code’, ‘text’, ‘url’]

We can examine r in more detail with Python’s built-in function dir as above. From the list of properties above, it looks like ‘content’ might be of interest.

>>> ntent

b’\n\n\n \n \n \n \n \n \n \n\n

Indeed, it is. Our ntent object contains the HTML from the Unhackathon home page. This will be helpful in the next section.

Before we go on though, perhaps you’d like to become acquainted with some of the other properties. Knowing what data is available might help you as you think of ways to expand this project after the tutorial is completed.

Finding URLs

Before we can follow new links, we must extract the URLs from the HTML of the page we’ve already requested. The regular expressions library in Python is useful for this.

>>> import re

>>> links = ndall(‘(. *? )‘, str(ntent))

>>> links

[(‘/code-of-conduct/’, ‘Code of Conduct’), (”, ”), (‘/faq/’, ‘frequently asked questions’), (”, ”)]

We pass the ndall method our regular expression, capturing on the URL and the link text, though we only really need the former. It also gets passed the ntent object that we are searching in, which will need to be cast to a string. We are returned a list of tuples, containing the strings captured by the regular expression.

There are two main things to note here, the first being that some of our URLs are for other protocols than HTTP. It doesn’t

make sense to access these with an HTTP request, and doing so will only result in an exception. So before we move on, you’ll want to eliminate the strings beginning with “mailto”, “ftp”, “file”, etc. Similarly, links pointing to 127. 0. 1 or localhost are of little use to us if we want our results to be publicly accessible.

The second thing to note is that some of our URLs are relative so we can’t use that as our argument to We will need to take the relative URLs and append them to the URL of the page we are on when we find those relative URLs. For example, we found an ‘/faq/’ URL above. This will need to become ‘. This is usually simple string concatenation, but be careful: a relative URL may indicate a parent directory (using two dots) and then things become more complicated. See if you can come up with a solution.

Following URLs

We hopefully now have a list of full URLs. We’ll now recursively request the content at those URLs and extract new URLs from that content.

We’ll want to maintain a list of URLs we’ve already requested (this is mainly what we’re after at this point and it also helps prevents us from getting stuck in a non-terminating loop) and a list of valid URLs we’ve discovered but have yet to request.

import re

def crawl_web(initial_url):

crawled, to_crawl = [], []

(initial_url)

while to_crawl:

current_url = (0)

r = (current_url)

(current_url)

for url in ndall(‘‘, str(ntent)):

if url[0] == ‘/’:

url = current_url + url

pattern = mpile(‘? ‘)

if (url):

(url)

return crawled

print(crawl_web(”))

This code will probably have issues if we feed it a page for the initial URL that is part of a site that isn’t self-contained. (Say, for instance, that links to; we’ll never reach the last page since we’ll always be adding new URLs to our to_crawl list. ) We’ll discuss several strategies to deal with this issue in the next section.

Strategies to prevent never ending processes

Counter

Perhaps we are satisfied once we have crawled n number of sites. Modify crawl_web to take a second argument (an integer n) and add logic before the loop so that we return our list once it’s length meets our requirements.

Timer

Perhaps we have an allotted time in which to run our program. Instead of passing in a maximum number of URLs we can pass in any number of seconds and revise our program to return the list of crawled URLs once that time has passed.

Generators

We can also use generators to receive results as they are discovered, instead of waiting for all of our code to run and return a list at the end. This doesn’t solve the issue of a long running process (though one could terminate the process by hand with Ctrl-C) but it is useful for seeing our script’s progress.

url = current_url + url[1:]

yield current_url

crawl_web_generator = crawl_web(”)

for result in crawl_web_generator:

print(result)

Generators were introduced with PEP 255 (). You can find more about them by Googling for ‘python generators’ or ‘python yield’.

Perhaps you should combine the approach of using generators with another approach. Also, can you think of any other methods that may be of use?

is a standard for asking “robots” (web crawlers and similar tools) not to crawl certain sites or pages. While it’s easy to ignore these requests, it’s generally a nice thing to account for. files are found in the root directory of a site, so before you crawl it’s a simple matter to check for any exclusions. To keep things simple you are looking for the following directives:

User-agent: *

Disallow: /

Pages may also allow/disallow certain pages instead of all pages. Check out for an example.

Further Exercises

Modify your program to follow rules if found.

Right now, our regular expression will not capture links that are more complicated than or . For example, will fail because we do not allow for anything but a space between a and href. Modify the regular expression to make sure we’re following all the links. Check out if you’re having trouble.

Our program involves a graph traversal. Right now our algorithm resembles bread-first search. What simple change can we make to get depth-first search? Can you think of a scenario where this makes a difference?

In addition to each page’s URL, also print its title and the number of child links, in CSV format.

Instead of CSV format, print results in JSON. Can you print a single JSON document while using generators? You can validate your JSON at

Conclusion

This concludes the tutorial. We hope it illustrated the basic concepts at work in building a web crawler. Perhaps now is a good time to step back and review your code. You might want to do some refactoring, or even write some tests to help prevent you from breaking what you have working now as you modify it to expand its functionality.

As you consider where to go next, remember we’re available to answer any questions you might have. Cheers!

This tutorial above was just intended to get you started. Now that you’ve completed it, there are many options for branching off and creating something of your own. Here are some ideas:

Import your JSON into a RethinkDB database and then create an app that queries against that database. Or analyze the data with a number of queries and visualize the results.

Analyze HTTP header fields. For example, one could compile statistics on different languages used on the backend using the X-Powered-By field.

Implement the functionality of Scrapy using a lower level library, such as Requests.

Set up your web crawler to repeatedly crawl a site at a set intervals to check for new pages or changes to content. List the URLs of changed/added/deleted pages or perhaps even a diff of the changes. This could be part of a tool to detect malicious changes on hacked websites or to hold news sites accountable for unannounced edits or retractions.

Use your crawler to monitor a site or forum for mentions of your name or internet handle. Trigger an email or text notification whenever someone uses your name.

Maintain a graph of the links you crawl and visualize the connectedness of certain websites.

Scrape photos of animals from animal shelter websites and create a site displaying their information.

Create a price comparison website. Scrape product names and prices from different online retailers.

Create a tool to map public connections between people (Twitter follows, blogrolls, etc. ) for job recruiting, marketing, or sales purposes.

Look for certain words or phrases across the web to answer questions such as “Which house in Harry Potter is mentioned the most? ”, “How often are hashtags used outside of social media? ”

Starting from a list of startups, crawl their sites for pages mentioning “job”/”careers”/”hiring” and from those scrape job listings. Use these to create a job board.

Or perhaps you have an idea of your own. If so, we look forward to hearing about it!

Web Crawler in Python – TopCoder

With the advent of the era of big data, the need for network information has increased widely. Many different companies collect external data from the Internet for various reasons: analyzing competition, summarizing news stories, tracking trends in specific markets, or collecting daily stock prices to build predictive models. Therefore, web crawlers are becoming more important. Web crawlers automatically browse or grab information from the Internet according to specified assification of web crawlersAccording to the implemented technology and structure, web crawlers can be divided into general web crawlers, focused web crawlers, incremental web crawlers, and deep web workflow of web crawlersBasic workflow of general web crawlersThe basic workflow of a general web crawler is as follows:Get the initial URL. The initial URL is an entry point for the web crawler, which links to the web page that needs to be crawled;While crawling the web page, we need to fetch the HTML content of the page, then parse it to get the URLs of all the pages linked to this these URLs into a queue;Loop through the queue, read the URLs from the queue one by one, for each URL, crawl the corresponding web page, then repeat the above crawling process;Check whether the stop condition is met. If the stop condition is not set, the crawler will keep crawling until it cannot get a new URL. Environmental preparation for web crawlingMake sure that a browser such as Chrome, IE or other has been installed in the wnload and install PythonDownload a suitable IDLThis article uses Visual Studio CodeInstall the required Python packagesPip is a Python package management tool. It provides functions for searching, downloading, installing, and uninstalling Python packages. This tool will be included when downloading and installing Python. Therefore, we can directly use ‘pip install’ to install the libraries we need. 1

2

3

pip install beautifulsoup4

pip install requests

pip install lxml

• BeautifulSoup is a library for easily parsing HTML and XML data. • lxml is a library to improve the parsing speed of XML files. • requests is a library to simulate HTTP requests (such as GET and POST). We will mainly use it to access the source code of any given following is an example of using a crawler to crawl the top 100 movie names and movie introductions on Rotten p100 movies of all time –Rotten TomatoesWe need to extract the name of the movie on this page and its ranking, and go deep into each movie link to get the movie’s introduction. 1. First, you need to import the libraries you need to use. 1

4

import requests

import lxml

from bs4

import BeautifulSoup

2. Create and access URLCreate a URL address that needs to be crawled, then create the header information, and then send a network request to wait for a response. 1

url = ”

f = (url)

When requesting access to the content of a webpage, sometimes you will find that a 403 error will appear. This is because the server has rejected your access. This is the anti-crawler setting used by the webpage to prevent malicious collection of information. At this time, you can access it by simulating the browser header information. 1

5

headers = {

‘User-Agent’: ‘Mozilla/5. 0 (Windows NT 6. 1; WOW64) AppleWebKit/537. 36 (KHTML, like Gecko) Chrome/63. 0. 3239. 132 Safari/537. 36 QIHU 360SE’}

f = (url, headers = headers)

3. Parse webpageCreate a BeautifulSoup object and specify the parser as = BeautifulSoup(ntent, ‘lxml’)4. Extract informationThe BeautifulSoup library has three methods to find elements:findall():find all nodesfind():find a single nodeselect():finds according to the selector CSS SelectorWe need to get the name and link of the top100 movies. We noticed that the name of the movie needed is under. After extracting the page content using BeautifulSoup, we can use the find method to extract the relevant = (‘table’, {‘class’:’table’}). find_all(‘a’)Get an introduction to each movieAfter extracting the relevant information, you also need to extract the introduction of each movie. The introduction of the movie is in the link of each movie, so you need to click on the link of each movie to get the code is:1

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

movies_lst = []

soup = BeautifulSoup(ntent, ‘lxml’)

movies = (‘table’, {

‘class’: ‘table’}). find_all(‘a’)

num = 0

for anchor in movies:

urls = ” + anchor[‘href’]

(urls)

num += 1

movie_url = urls

movie_f = (movie_url, headers = headers)

movie_soup = BeautifulSoup(ntent, ‘lxml’)

movie_content = (‘div’, {

‘class’: ‘movie_synopsis clamp clamp-6 js-clamp’})

print(num, urls, ‘\n’, ‘Movie:’ + ())

print(‘Movie info:’ + ())

The output is:Write the crawled data to ExcelIn order to facilitate data analysis, the crawled data can be written into Excel. We use xlwt to write data into the xlwt xlwt import *Create an empty table. 1

workbook = Workbook(encoding = ‘utf-8’)

table = d_sheet(‘data’)

Create the header of each column in the first row.

(0, 0, ‘Number’)

(0, 1, ‘movie_url’)

(0, 2, ‘movie_name’)

(0, 3, ‘movie_introduction’)

Write the crawled data into Excel separately from the second row.

(line, 0, num)

(line, 1, urls)

(line, 2, ())

(line, 3, ())

line += 1

Finally, save (”)The final code is:1

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

from xlwt

import *

line = 1

(”)

The result is:Click to show preference! Click to show preference!