Data Extraction Jobs

What is Data Extraction? [ Tools & Techniques ]

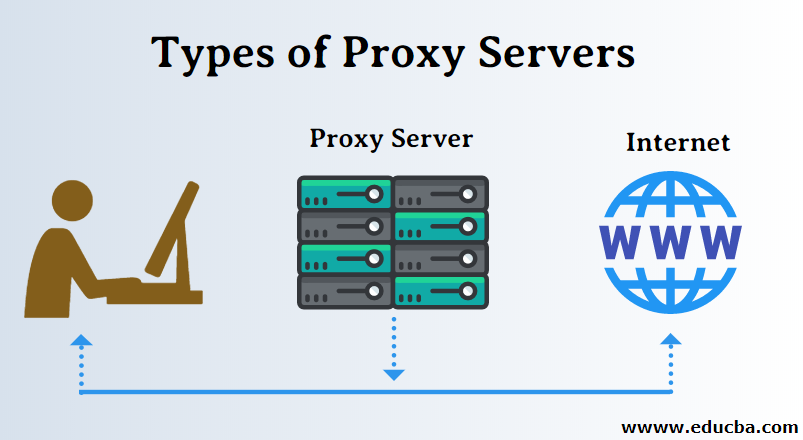

Data extraction is the process of obtaining data from a database or SaaS platform so that it can be replicated to a destination — such as a data warehouse — designed to support online analytical processing (OLAP).

Data extraction is the first step in a data ingestion process called ETL — extract, transform, and load. The goal of ETL is to prepare data for analysis or business intelligence (BI).

Suppose an organization wants to monitor its reputation in the marketplace. It may have data from many sources, including online reviews, social media mentions, and online transactions. An ETL tool can extract data from these sources and load it into a data warehouse where it can be analyzed and mined for insights into brand perception.

Data extraction does not need to be a painful procedure. For you or for your database.

Extraction jobs may be scheduled, or analysts may extract data on demand as dictated by business needs and analysis goals. Data can be extracted in three primary ways:

Update notification

The easiest way to extract data from a source system is to have that system issue a notification when a record has been changed. Most databases provide a mechanism for this so that they can support database replication (change data capture or binary logs), and many SaaS applications provide webhooks, which offer conceptually similar functionality.

Some data sources are unable to provide notification that an update has occurred, but they are able to identify which records have been modified and provide an extract of those records. During subsequent ETL steps, the data extraction code needs to identify and propagate changes. One drawback of incremental extraction is that it may not be able to detect deleted records in source data, because there’s no way to see a record that’s no longer there.

The first time you replicate any source you have to do a full extraction, and some data sources have no way to identify data that has been changed, so reloading a whole table may be the only way to get data from that source. Because full extraction involves high data transfer volumes, which can put a load on the network, it’s not the best option if you can avoid it.

Whether the source is a database or a SaaS platform, the data extraction process involves the following steps:

Check for changes to the structure of the data, including the addition of new tables and columns. Changed data structures have to be dealt with programmatically.

Retrieve the target tables and fields from the records specified by the integration’s replication scheme.

Extract the appropriate data, if any.

Extracted data is loaded into a destination that serves as a platform for BI reporting, such as a cloud data warehouse like Amazon Redshift, Microsoft Azure SQL Data Warehouse, Snowflake, or Google BigQuery. The load process needs to be specific to the destination.

API-specific challenges

While it may be possible to extract data from a database using SQL, the extraction process for SaaS products relies on each platform’s application programming interface (API). Working with APIs can be challenging:

APIs are different for every application.

Many APIs are not well documented. Even APIs from reputable, developer-friendly companies sometimes have poor documentation.

APIs change over time. For example, Facebook’s “move fast and break things” approach means the company frequently updates its reporting APIs – and Facebook doesn’t always notify API users in advance.

ETL: Build-your-own vs. cloud-first

In the past, developers would write their own ETL tools to extract and replicate data. This works fine when there is a single, or only a few, data sources.

However, when sources are more numerous or complex, this approach does not scale well. The more sources there are, the more likelihood that something will require maintenance. How does one deal with changing APIs? What happens when a source or destination changes its format? What if the script has an error that goes unnoticed, leading to decisions being made on bad data? It doesn’t take long for a simple script to become a maintenance headache.

Cloud-based ETL tools allow users to connect sources and destinations quickly without writing or maintaining code, and without worrying about other pitfalls that can compromise data extraction and loading. That in turn makes it easy to provide access to data to anyone who needs it for analytics, including executives, managers, and individual business units.

To reap the benefits of analytics and BI programs, you must understand the context of your data sources and destinations, and use the right tools. For popular data sources, there’s no reason to build a data extraction tool.

Stitch offers an easy-to-use ETL tool to replicate data from sources to destinations; it makes the job of getting data for analysis faster, easier, and more reliable, so that businesses can get the most out of their data analysis and BI programs.

Stitch makes it simple to extract data from more than 90 sources and move it to a target destination. Sign up for a free trial and get your data to its destination in minutes.

Give Stitch a try, on us

Stitch streams all of your data directly to your analytics warehouse.

Set up in minutes

Unlimited data volume during trial

Oil Extractor Salary | Comparably

How much does an Oil Extractor make? The average Oil Extractor in the US makes $30, 117. Oil Extractors make the most in Los Angeles at $30, 117, averaging total compensation 0% greater than the US Comparably for free to anonymously compare compensation and culture Started0%Washington, DC0%San Francisco0%Seattle0%New York0%Portland0%DallasSalary Ranges for Oil Extractors The salaries of Oil Extractors in the US

range from $18, 780 to $49, 960, with a median salary of $27, 950. The middle 50% of Oil Extractors

makes $27, 950, with the top 75%

making $49, much tax will you have to pay as an Oil Extractor For an individual filer in this tax bracket, you would have an estimated average federal tax in 2018 of 12%. After a federal tax rate of 12% has been taken out, Oil Extractors could expect to have a take-home pay of $26, 693/year, with each paycheck equaling approximately $1, 112*. * assuming bi-monthly pay period. Taxes estimated using tax rates for a single filer using 2018 federal and state tax tables. Metro-specific taxes are not considered in calculations. This data is intended to be an estimate, not prescriptive financial or tax advice. Quality of Life for Oil Extractor With a take-home pay of roughly $2, 224/month, and the median 2BR apartment rental price of $2, 506/mo**, an Oil Extractor would pay 112. 66% of their monthly take-home salary towards rent. ** This rental cost was derived according to an online report at Apartment List*** Average cost of living was acquired from Numbeo’s Cost of Living IndexSee Oil Extractors Salaries in Other AreasSee salaries for related job titles

Data Extraction Specialist (ITS 5) – Government Jobs

Data Extraction Specialist (ITS 5) We’re seeking a talented data engineer that thrives in a fast-paced, agile environment whom enjoys the challenge of working with large healthcare data sets, highly complex business scenarios and is passionate about data and analytics. To be successful in this role you will need to have superior analytical, communication, presentation and organizational ideal candidate is bright, responsible, self-motivated and gets stuff done. We look for problem solvers, who can intuitively anticipate problems; look beyond immediate issues; and take initiative to improve solutions. In short, we look for people who take pride in the craft of data engineering and have proven to be great at it. As the Data Extraction Specialist you will provide reports or data sets for HCA business areas or external partners, focusing on large data extracts, reporting/measure requirement needs specifically related to Washington’s Medicaid transformation demonstration project. You’ll be part of a team that can build a new, cloud-based data warehouse and BI solution from start to you ever dreamt of working for an organization where the commitment to delivering quality data AND high employee engagement came together? Are you looking to grow your career and professional development while making a difference in the lives of Washingtonians? Then apply to work for Washington State Health care Authority! About You I am motivated by data driven decision-making I pride myself on the accuracy of my work and believe data tells a story I interact well with technical and non-technical audiences I always develop processes to ensure that security and privacy requirements are followed I know SQL like the back of my hand and can develop complex code for large data extracts and reporting I am the master of accomplishing items on my to-do list I enjoy documenting the work that I do I don’t just tolerate change, I love change I am a problem solverHere is what we are looking for (Required Qualifications):We need a highly motivated and self-started person that can work in an agile environment and adapt to years of information technology experience such as consulting, analyzing, designing, programming, installing and/or maintaining computer software applications, hardware, telecommunications, or network infrastructure equipment, directing projects, providing customer or technical support in information technology; or administering or supervising staff who performed work in any of these information technology years of experience: Working with large data warehouse, databases or operational data stores Writing complex SQLqueries and performance tuning Utilizing testing methodologiesTwo years of experience: Developing reports using Microsoft Reporting Services (SSRS), Tableau or equivalent software Developing and automating data extractions using SQL Server Integration Services (SSIS), Matillion or equivalent software *Experience may be gained concurrently*It would be great if you have the follow experience but it isn’t a deal breaker: Experience with the healthcare data. Experience with cloud computing platforms. AWS related project experience is preferred. Experience with Massively Parallel Processing (MPP) databases or columnar data stores. Amazon Redshift related project experience is preferred. Experience with ETL tools. Matillion related project experience is preferred. Experience with data visualization tools. Tableau related project experience is preferred Experience with programming language such as C#, Java, C++ etc and scripting languages like python, JSON, ruby and Unix shell scripts Experience with Cloud Big Data Platforms. Amazon Elastic MapReduce (EMR) related project experience is preferred Experience with Agile development methodologies. Scrum related project experience is preferred. About the HCA:The Washington State Health Care Authority purchases health care for more than 2 million Washington residents through Apple Health (Medicaid), the Public Employees Benefits Board (PEBB) Program, and, beginning in 2020, the School Employees Benefits Board (SEBB) Program. As the largest health care purchaser in the state, we lead the effort to transform health care, helping ensure Washington residents have access to better health and better care at a lower cost. This position is covered by the Washington Federation of State Employees (WFSE) to Apply: Only candidates who reflect the minimum qualifications on their State application will be considered. Failure to follow the application instructions below may lead to disqualification. To apply for this position you will need to complete your profile and attach: A cover letter Current resume Three professional referencesIf you have questions about the process, or need reasonable accommodation, please contact the recruiter before the posting closes. The candidate pool certified for this recruitment may be used to fill future similar vacancies for up to the next six months. Please Note: This is a project position expected to last until 12/31/2021Washington State is an equal opportunity employer. Persons with disabilities needing assistance in the application process, or those needing this job announcement in an alternative format, may call the Human Resources Office at 360. 725. 1197, or email to a new hire, a background check including criminal record history will be conducted. Information from the background check will not necessarily preclude employment but will be considered in determining the applicant’s suitability and competence to perform in the position.

Frequently Asked Questions about data extraction jobs

What is data extraction job?

Data extraction is the process of obtaining data from a database or SaaS platform so that it can be replicated to a destination — such as a data warehouse — designed to support online analytical processing (OLAP). … The goal of ETL is to prepare data for analysis or business intelligence (BI).

How much do data extractors make?

Salary Ranges for Oil Extractors The salaries of Oil Extractors in the US range from $18,780 to $49,960 , with a median salary of $27,950 . The middle 50% of Oil Extractors makes $27,950, with the top 75% making $49,960.

What does a data extraction specialist do?

As the Data Extraction Specialist you will provide reports or data sets for HCA business areas or external partners, focusing on large data extracts, reporting/measure requirement needs specifically related to Washington’s Medicaid transformation demonstration project.