What Are Crawlers

What is a web crawler? | How web spiders work | Cloudflare

What is a web crawler bot?

A web crawler, spider, or search engine bot downloads and indexes content from all over the Internet. The goal of such a bot is to learn what (almost) every webpage on the web is about, so that the information can be retrieved when it’s needed. They’re called “web crawlers” because crawling is the technical term for automatically accessing a website and obtaining data via a software program.

These bots are almost always operated by search engines. By applying a search algorithm to the data collected by web crawlers, search engines can provide relevant links in response to user search queries, generating the list of webpages that show up after a user types a search into Google or Bing (or another search engine).

A web crawler bot is like someone who goes through all the books in a disorganized library and puts together a card catalog so that anyone who visits the library can quickly and easily find the information they need. To help categorize and sort the library’s books by topic, the organizer will read the title, summary, and some of the internal text of each book to figure out what it’s about.

However, unlike a library, the Internet is not composed of physical piles of books, and that makes it hard to tell if all the necessary information has been indexed properly, or if vast quantities of it are being overlooked. To try to find all the relevant information the Internet has to offer, a web crawler bot will start with a certain set of known webpages and then follow hyperlinks from those pages to other pages, follow hyperlinks from those other pages to additional pages, and so on.

It is unknown how much of the publicly available Internet is actually crawled by search engine bots. Some sources estimate that only 40-70% of the Internet is indexed for search – and that’s billions of webpages.

What is search indexing?

Search indexing is like creating a library card catalog for the Internet so that a search engine knows where on the Internet to retrieve information when a person searches for it. It can also be compared to the index in the back of a book, which lists all the places in the book where a certain topic or phrase is mentioned.

Indexing focuses mostly on the text that appears on the page, and on the metadata* about the page that users don’t see. When most search engines index a page, they add all the words on the page to the index – except for words like “a, ” “an, ” and “the” in Google’s case. When users search for those words, the search engine goes through its index of all the pages where those words appear and selects the most relevant ones.

*In the context of search indexing, metadata is data that tells search engines what a webpage is about. Often the meta title and meta description are what will appear on search engine results pages, as opposed to content from the webpage that’s visible to users.

How do web crawlers work?

The Internet is constantly changing and expanding. Because it is not possible to know how many total webpages there are on the Internet, web crawler bots start from a seed, or a list of known URLs. They crawl the webpages at those URLs first. As they crawl those webpages, they will find hyperlinks to other URLs, and they add those to the list of pages to crawl next.

Given the vast number of webpages on the Internet that could be indexed for search, this process could go on almost indefinitely. However, a web crawler will follow certain policies that make it more selective about which pages to crawl, in what order to crawl them, and how often they should crawl them again to check for content updates.

The relative importance of each webpage: Most web crawlers don’t crawl the entire publicly available Internet and aren’t intended to; instead they decide which pages to crawl first based on the number of other pages that link to that page, the amount of visitors that page gets, and other factors that signify the page’s likelihood of containing important information.

The idea is that a webpage that is cited by a lot of other webpages and gets a lot of visitors is likely to contain high-quality, authoritative information, so it’s especially important that a search engine has it indexed – just as a library might make sure to keep plenty of copies of a book that gets checked out by lots of people.

Revisiting webpages: Content on the Web is continually being updated, removed, or moved to new locations. Web crawlers will periodically need to revisit pages to make sure the latest version of the content is indexed.

requirements: Web crawlers also decide which pages to crawl based on the protocol (also known as the robots exclusion protocol). Before crawling a webpage, they will check the file hosted by that page’s web server. A file is a text file that specifies the rules for any bots accessing the hosted website or application. These rules define which pages the bots can crawl, and which links they can follow. As an example, check out the file.

All these factors are weighted differently within the proprietary algorithms that each search engine builds into their spider bots. Web crawlers from different search engines will behave slightly differently, although the end goal is the same: to download and index content from webpages.

Why are web crawlers called ‘spiders’?

The Internet, or at least the part that most users access, is also known as the World Wide Web – in fact that’s where the “www” part of most website URLs comes from. It was only natural to call search engine bots “spiders, ” because they crawl all over the Web, just as real spiders crawl on spiderwebs.

Should web crawler bots always be allowed to access web properties?

That’s up to the web property, and it depends on a number of factors. Web crawlers require server resources in order to index content – they make requests that the server needs to respond to, just like a user visiting a website or other bots accessing a website. Depending on the amount of content on each page or the number of pages on the site, it could be in the website operator’s best interests not to allow search indexing too often, since too much indexing could overtax the server, drive up bandwidth costs, or both.

Also, developers or companies may not want some webpages to be discoverable unless a user already has been given a link to the page (without putting the page behind a paywall or a login). One example of such a case for enterprises is when they create a dedicated landing page for a marketing campaign, but they don’t want anyone not targeted by the campaign to access the page. In this way they can tailor the messaging or precisely measure the page’s performance. In such cases the enterprise can add a “no index” tag to the landing page, and it won’t show up in search engine results. They can also add a “disallow” tag in the page or in the file, and search engine spiders won’t crawl it at all.

Website owners may not want web crawler bots to crawl part or all of their sites for a variety of other reasons as well. For instance, a website that offers users the ability to search within the site may want to block the search results pages, as these are not useful for most users. Other auto-generated pages that are only helpful for one user or a few specific users should also be blocked.

What is the difference between web crawling and web scraping?

Web scraping, data scraping, or content scraping is when a bot downloads the content on a website without permission, often with the intention of using that content for a malicious purpose.

Web scraping is usually much more targeted than web crawling. Web scrapers may be after specific pages or specific websites only, while web crawlers will keep following links and crawling pages continuously.

Also, web scraper bots may disregard the strain they put on web servers, while web crawlers, especially those from major search engines, will obey the file and limit their requests so as not to overtax the web server.

How do web crawlers affect SEO?

SEO stands for search engine optimization, and it is the discipline of readying content for search indexing so that a website shows up higher in search engine results.

If spider bots don’t crawl a website, then it can’t be indexed, and it won’t show up in search results. For this reason, if a website owner wants to get organic traffic from search results, it is very important that they don’t block web crawler bots.

What web crawler bots are active on the Internet?

The bots from the major search engines are called:

Google: Googlebot (actually two crawlers, Googlebot Desktop and Googlebot Mobile, for desktop and mobile searches)

Bing: Bingbot

Yandex (Russian search engine): Yandex Bot

Baidu (Chinese search engine): Baidu Spider

There are also many less common web crawler bots, some of which aren’t associated with any search engine.

Why is it important for bot management to take web crawling into account?

Bad bots can cause a lot of damage, from poor user experiences to server crashes to data theft. However, in blocking bad bots, it’s important to still allow good bots, such as web crawlers, to access web properties. Cloudflare Bot Management allows good bots to keep accessing websites while still mitigating malicious bot traffic. The product maintains an automatically updated allowlist of good bots, like web crawlers, to ensure they aren’t blocked. Smaller organizations can gain a similar level of visibility and control over their bot traffic with Super Bot Fight Mode, available on Cloudflare Pro and Business plans.

What is a web crawler and how does it work? – Ryte

A crawler is a computer program that automatically searches documents on the Web. Crawlers are primarily programmed for repetitive actions so that browsing is automated. Search engines use crawlers most frequently to browse the internet and build an index. Other crawlers search different types of information such as RSS feeds and email addresses. The term crawler comes from the first search engine on the Internet: the Web Crawler. Synonyms are also “Bot” or “Spider. ” The most well known webcrawler is the Googlebot.

Contents

1 How does a crawler work?

2 Applications

3 Examples of a crawler

4 Crawler vs. Scraper

5 Blocking a crawler

6 Significance for search engine optimization

7 References

8 Web Links

How does a crawler work? [edit]

In principle, a crawler is like a librarian. It looks for information on the Web, which it assigns to certain categories, and then indexes and catalogues it so that the crawled information is retrievable and can be evaluated.

The operations of these computer programs need to be established before a crawl is initiated. Every order is thus defined in advance. The crawler then executes these instructions automatically. An index is created with the results of the crawler, which can be accessed through output software.

The information a crawler will gather from the Web depends on the particular instructions.

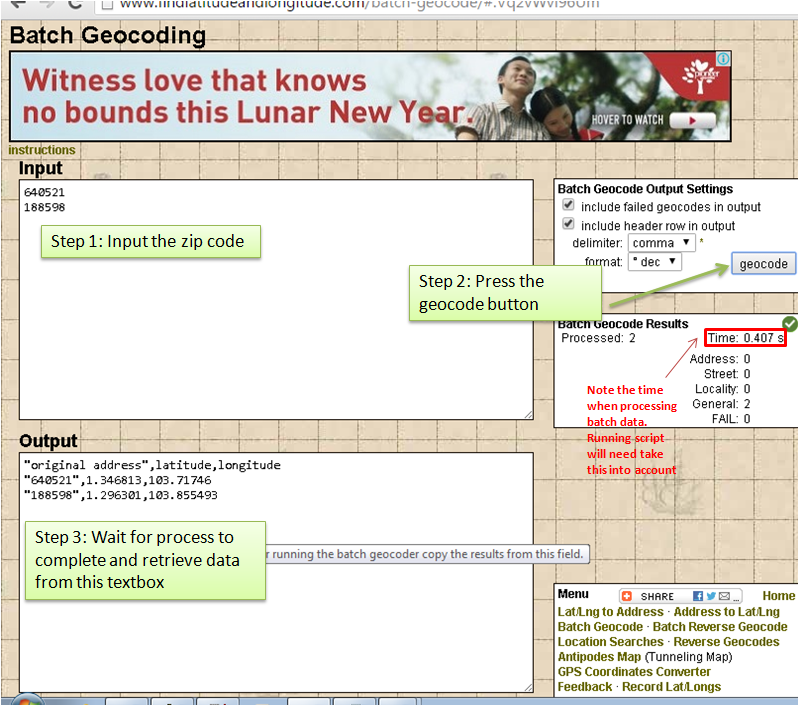

This graphic visualize the link relationships that are uncovered by a crawler:

Applications[edit]

The classic goal of a crawler is to create an index. Thus crawlers are the basis for the work of search engines. They first scour the Web for content and then make the results available to users. Focused crawlers, for example, focus on current, content-relevant websites when indexing.

Web crawlers are also used for other purposes:

Price comparison portals search for information on specific products on the Web, so that prices or data can be compared accurately.

In the area of data mining, a crawler may collect publicly available e-mail or postal addresses of companies.

Web analysis tools use crawlers or spiders to collect data for page views, or incoming or outbound links.

Crawlers serve to provide information hubs with data, for example, news sites.

Examples of a crawler[edit]

The most well known crawler is the Googlebot, and there are many additional examples as search engines generally use their own web crawlers. For example

Bingbot

Slurp Bot

DuckDuckBot

Baiduspider

Yandex Bot

Sogou Spider

Exabot

Alexa Crawler[1]

Crawler vs. Scraper[edit]

Unlike a scraper, a crawler only collects and prepares data. Scraping is, however, a black hat technique, which aims to copy data in the form of content from other sites to place it that way or a slightly modified form of it on one’s own website. While a crawler mostly deals with metadata that is not visible to the user at first glance, a scraper extracts tangible content.

Blocking a crawler[edit]

If you don’t want certain crawlers to browse your website, you can exclude their user agent using However, that cannot prevent content from being indexed by search engines. The noindex meta tag or the canonical tag serves better for this purpose.

Significance for search engine optimization[edit]

Webcrawlers like the Googlebot achieve their purpose of ranking websites in the SERP through crawling and indexing. They follow permanent links in the WWW and on websites. Per website, every crawler has a limited timeframe and budget available. Website owners can utilize the crawl budget of the Googlebot more effectively by optimizing the website structure such as the navigation. URLs deemed more important due to a high number of sessions and trustworthy incoming links are usually crawled more often. There are certain measures for controlling crawlers like the Googlebot such as the, which can provide concrete instructions not to crawl certain areas of a website, and the XML sitemap. This is stored in the Google Search Console, and provides a clear overview of the structure of a website, making it clear which areas should be crawled and indexed.

References[edit]

↑ Web Crawlers. Accessed on May 28, 2019

Web Links[edit]

Google Support – Googlebot

JavaScript Crawling with Ryte

A Study on Different Types of Web Crawlers | SpringerLink

Conference paperFirst Online: 28 August 2019

1

Citations

771

Downloads

Part of the

Advances in Intelligent Systems and Computing

book series (AISC, volume 989)AbstractThe world wide web is a global information medium in which as many people as possible explore the information around the world. Search engine is a place where internet users search for the required content and the results are returned to users through websites, images or videos. Here web crawlers emerged that browses the web to gather and download pages relevant to user topics and store them in a large repository that makes the search engine more efficient. These web crawlers are becoming more important and growing daily. This paper presents the various web crawler types and their architectures. Comparisons are analyzed between these ywordsWeb crawler Focused crawler Incremental crawler Distributed crawler Parallel crawler Hidden web crawler NotesAcknowledgementsThe authors express gratitude towards the assistance provided by Accendere Knowledge Management Services Pvt. Ltd. In preparing the manuscripts. We also thank our mentors and faculty members who guided us throughout the research and helped us in achieving desired, S. B. : The issues and challenges with the web crawlers. Int. J. Inf. Technol. Syst. 1, 1–10 (2012)Google stillo, C. : Effective web crawling. Ph. D. thesis. University of Chile (2004). Accessed 03 Oct 2018Google ebchua, T., Rungsawang, A., Yamana, H. : Adaptive focused website segment crawler. In: 19th International Conference on Network-Based Information Systems, pp. 181–187 (2016)Google, A., Anand, P. : Focused web crawlers and its approaches. In: 2015 1st International Conference on Futuristic Trends on Computational Analysis and Knowledge Management ABLAZE 2015, pp. 619–622 (2015)Google chekotykhin, K., Jannach, D., Friedrich, G. : xCrawl: a high-recall crawling method for web mining. Knowl. 25, 303–326 (2010)CrossRefGoogle, H., Han, J. : PEBL: positive example based learning for web page classification using SVM. In: Proceedings of the Eighth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (2002)Google, S., Gupta, P. : The anatomy of web crawlers. In: International Conference on Computing, Communication and Automation ICCCA 2015, pp. 849–853 (2015)Google, W., De Roure, D., Shadbolt, N. : The evolution of the web and implications for eResearch. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 367, 991–1001 (2009)CrossRefGoogle, F. : Design and implementation of distributed crawler system based on Scrapy. In: IOP Conference Series: Earth and Environmental Science, pp. 1–5 (2018)Google, D., Mishra, R. : Deep web performance enhance on search engine. In: International Conference on Soft Computing Techniques and Implementations, ICSCTI 2015, pp. 137–140 (2015)Google Scholar11. Raghavan, S., Garcia-Molina, H. : Crawling the hidden web. In: 27th VLDB Conference, Roma, Italy, pp. 1–10 (2010)Google ScholarCopyright information© Springer Nature Singapore Pte Ltd. 2020Authors and AffiliationsP. G. Chaitra1Email authorV. Deepthi1K. P. Vidyashree1S. partment of Information Science and EngineeringVidyavardhaka College of EngineeringMysuruIndia

Personalised recommendations

Frequently Asked Questions about what are crawlers

What is a crawler?

A crawler is a computer program that automatically searches documents on the Web. Crawlers are primarily programmed for repetitive actions so that browsing is automated. Search engines use crawlers most frequently to browse the internet and build an index.

What are different types of crawlers?

2 Types of Web Crawler2.1 Focused Web Crawler. Focused web crawler selectively search for web pages relevant to specific user fields or topics. … 2.2 Incremental Web Crawler. … 2.3 Distributed Web Crawler. … 2.4 Parallel Web Crawler. … 2.5 Hidden Web Crawler.Aug 28, 2019

What does a crawler look for?

Crawlers look at webpages and follow links on those pages, much like you would if you were browsing content on the web. They go from link to link and bring data about those webpages back to Google’s servers.