Webscraping Python

Is Web Scraping Illegal? Depends on What the Meaning of the Word Is

Depending on who you ask, web scraping can be loved or hated.

Web scraping has existed for a long time and, in its good form, it’s a key underpinning of the internet. “Good bots” enable, for example, search engines to index web content, price comparison services to save consumers money, and market researchers to gauge sentiment on social media.

“Bad bots, ” however, fetch content from a website with the intent of using it for purposes outside the site owner’s control. Bad bots make up 20 percent of all web traffic and are used to conduct a variety of harmful activities, such as denial of service attacks, competitive data mining, online fraud, account hijacking, data theft, stealing of intellectual property, unauthorized vulnerability scans, spam and digital ad fraud.

So, is it Illegal to Scrape a Website?

So is it legal or illegal? Web scraping and crawling aren’t illegal by themselves. After all, you could scrape or crawl your own website, without a hitch.

Startups love it because it’s a cheap and powerful way to gather data without the need for partnerships. Big companies use web scrapers for their own gain but also don’t want others to use bots against them.

The general opinion on the matter does not seem to matter anymore because in the past 12 months it has become very clear that the federal court system is cracking down more than ever.

Let’s take a look back. Web scraping started in a legal grey area where the use of bots to scrape a website was simply a nuisance. Not much could be done about the practice until in 2000 eBay filed a preliminary injunction against Bidder’s Edge. In the injunction eBay claimed that the use of bots on the site, against the will of the company violated Trespass to Chattels law.

The court granted the injunction because users had to opt in and agree to the terms of service on the site and that a large number of bots could be disruptive to eBay’s computer systems. The lawsuit was settled out of court so it all never came to a head but the legal precedent was set.

In 2001 however, a travel agency sued a competitor who had “scraped” its prices from its Web site to help the rival set its own prices. The judge ruled that the fact that this scraping was not welcomed by the site’s owner was not sufficient to make it “unauthorized access” for the purpose of federal hacking laws.

Two years later the legal standing for eBay v Bidder’s Edge was implicitly overruled in the “Intel v. Hamidi”, a case interpreting California’s common law trespass to chattels. It was the wild west once again. Over the next several years the courts ruled time and time again that simply putting “do not scrape us” in your website terms of service was not enough to warrant a legally binding agreement. For you to enforce that term, a user must explicitly agree or consent to the terms. This left the field wide open for scrapers to do as they wish.

Fast forward a few years and you start seeing a shift in opinion. In 2009 Facebook won one of the first copyright suits against a web scraper. This laid the groundwork for numerous lawsuits that tie any web scraping with a direct copyright violation and very clear monetary damages. The most recent case being AP v Meltwater where the courts stripped what is referred to as fair use on the internet.

Previously, for academic, personal, or information aggregation people could rely on fair use and use web scrapers. The court now gutted the fair use clause that companies had used to defend web scraping. The court determined that even small percentages, sometimes as little as 4. 5% of the content, are significant enough to not fall under fair use. The only caveat the court made was based on the simple fact that this data was available for purchase. Had it not been, it is unclear how they would have ruled. Then a few months back the gauntlet was dropped.

Andrew Auernheimer was convicted of hacking based on the act of web scraping. Although the data was unprotected and publically available via AT&T’s website, the fact that he wrote web scrapers to harvest that data in mass amounted to “brute force attack”. He did not have to consent to terms of service to deploy his bots and conduct the web scraping. The data was not available for purchase. It wasn’t behind a login. He did not even financially gain from the aggregation of the data. Most importantly, it was buggy programing by AT&T that exposed this information in the first place. Yet Andrew was at fault. This isn’t just a civil suit anymore. This charge is a felony violation that is on par with hacking or denial of service attacks and carries up to a 15-year sentence for each charge.

In 2016, Congress passed its first legislation specifically to target bad bots — the Better Online Ticket Sales (BOTS) Act, which bans the use of software that circumvents security measures on ticket seller websites. Automated ticket scalping bots use several techniques to do their dirty work including web scraping that incorporates advanced business logic to identify scalping opportunities, input purchase details into shopping carts, and even resell inventory on secondary markets.

To counteract this type of activity, the BOTS Act:

Prohibits the circumvention of a security measure used to enforce ticket purchasing limits for an event with an attendance capacity of greater than 200 persons.

Prohibits the sale of an event ticket obtained through such a circumvention violation if the seller participated in, had the ability to control, or should have known about it.

Treats violations as unfair or deceptive acts under the Federal Trade Commission Act. The bill provides authority to the FTC and states to enforce against such violations.

In other words, if you’re a venue, organization or ticketing software platform, it is still on you to defend against this fraudulent activity during your major onsales.

The UK seems to have followed the US with its Digital Economy Act 2017 which achieved Royal Assent in April. The Act seeks to protect consumers in a number of ways in an increasingly digital society, including by “cracking down on ticket touts by making it a criminal offence for those that misuse bot technology to sweep up tickets and sell them at inflated prices in the secondary market. ”

In the summer of 2017, LinkedIn sued hiQ Labs, a San Francisco-based startup. hiQ was scraping publicly available LinkedIn profiles to offer clients, according to its website, “a crystal ball that helps you determine skills gaps or turnover risks months ahead of time. ”

You might find it unsettling to think that your public LinkedIn profile could be used against you by your employer.

Yet a judge on Aug. 14, 2017 decided this is okay. Judge Edward Chen of the U. S. District Court in San Francisco agreed with hiQ’s claim in a lawsuit that Microsoft-owned LinkedIn violated antitrust laws when it blocked the startup from accessing such data. He ordered LinkedIn to remove the barriers within 24 hours. LinkedIn has filed to appeal.

The ruling contradicts previous decisions clamping down on web scraping. And it opens a Pandora’s box of questions about social media user privacy and the right of businesses to protect themselves from data hijacking.

There’s also the matter of fairness. LinkedIn spent years creating something of real value. Why should it have to hand it over to the likes of hiQ — paying for the servers and bandwidth to host all that bot traffic on top of their own human users, just so hiQ can ride LinkedIn’s coattails?

I am in the business of blocking bots. Chen’s ruling has sent a chill through those of us in the cybersecurity industry devoted to fighting web-scraping bots.

I think there is a legitimate need for some companies to be able to prevent unwanted web scrapers from accessing their site.

In October of 2017, and as reported by Bloomberg, Ticketmaster sued Prestige Entertainment, claiming it used computer programs to illegally buy as many as 40 percent of the available seats for performances of “Hamilton” in New York and the majority of the tickets Ticketmaster had available for the Mayweather v. Pacquiao fight in Las Vegas two years ago.

Prestige continued to use the illegal bots even after it paid a $3. 35 million to settle New York Attorney General Eric Schneiderman’s probe into the ticket resale industry.

Under that deal, Prestige promised to abstain from using bots, Ticketmaster said in the complaint. Ticketmaster asked for unspecified compensatory and punitive damages and a court order to stop Prestige from using bots.

Are the existing laws too antiquated to deal with the problem? Should new legislation be introduced to provide more clarity? Most sites don’t have any web scraping protections in place. Do the companies have some burden to prevent web scraping?

As the courts try to further decide the legality of scraping, companies are still having their data stolen and the business logic of their websites abused. Instead of looking to the law to eventually solve this technology problem, it’s time to start solving it with anti-bot and anti-scraping technology today.

Get the latest from imperva

The latest news from our experts in the fast-changing world of application, data, and edge security.

Subscribe to our blog

Web Scraping using Python – DataCamp

Web scraping is a term used to describe the use of a program or algorithm to extract and process large amounts of data from the web. Whether you are a data scientist, engineer, or anybody who analyzes large amounts of datasets, the ability to scrape data from the web is a useful skill to have. Let’s say you find data from the web, and there is no direct way to download it, web scraping using Python is a skill you can use to extract the data into a useful form that can be imported.

In this tutorial, you will learn about the following:

• Data extraction from the web using Python’s Beautiful Soup module

• Data manipulation and cleaning using Python’s Pandas library

• Data visualization using Python’s Matplotlib library

The dataset used in this tutorial was taken from a 10K race that took place in Hillsboro, OR on June 2017. Specifically, you will analyze the performance of the 10K runners and answer questions such as:

• What was the average finish time for the runners?

• Did the runners’ finish times follow a normal distribution?

• Were there any performance differences between males and females of various age groups?

Using Jupyter Notebook, you should start by importing the necessary modules (pandas, numpy,, seaborn). If you don’t have Jupyter Notebook installed, I recommend installing it using the Anaconda Python distribution which is available on the internet. To easily display the plots, make sure to include the line%matplotlib inline as shown below.

import pandas as pd

import numpy as np

import as plt

import seaborn as sns%matplotlib inline

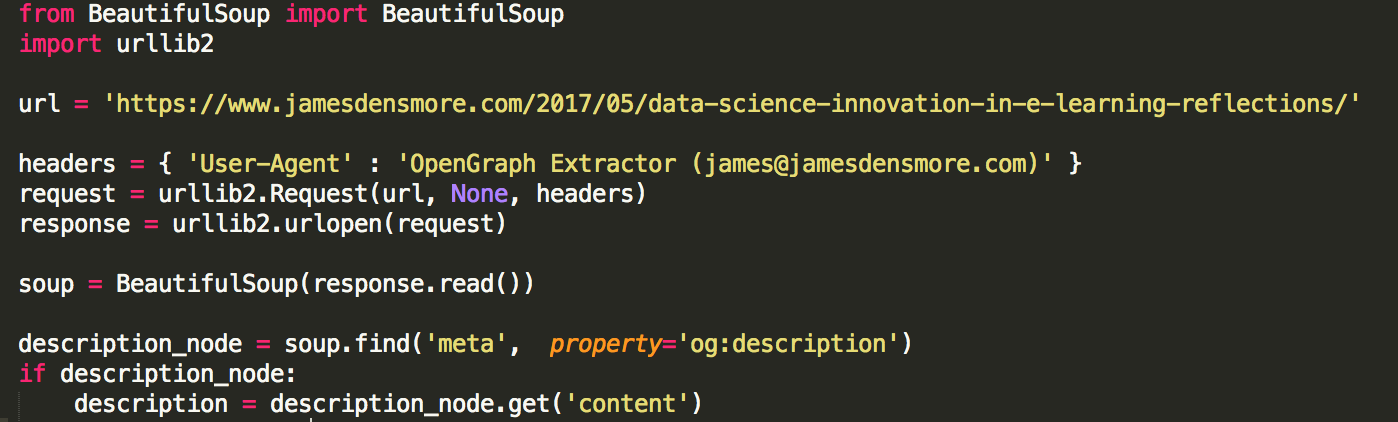

To perform web scraping, you should also import the libraries shown below. The quest module is used to open URLs. The Beautiful Soup package is used to extract data from html files. The Beautiful Soup library’s name is bs4 which stands for Beautiful Soup, version 4.

from quest import urlopen

from bs4 import BeautifulSoup

After importing necessary modules, you should specify the URL containing the dataset and pass it to urlopen() to get the html of the page.

url = ”

html = urlopen(url)

Getting the html of the page is just the first step. Next step is to create a Beautiful Soup object from the html. This is done by passing the html to the BeautifulSoup() function. The Beautiful Soup package is used to parse the html, that is, take the raw html text and break it into Python objects. The second argument ‘lxml’ is the html parser whose details you do not need to worry about at this point.

soup = BeautifulSoup(html, ‘lxml’)

type(soup)

autifulSoup

The soup object allows you to extract interesting information about the website you’re scraping such as getting the title of the page as shown below.

# Get the title

title =

print(title)

You can also get the text of the webpage and quickly print it out to check if it is what you expect.

# Print out the text

text = t_text()

#print()

You can view the html of the webpage by right-clicking anywhere on the webpage and selecting “Inspect. ” This is what the result looks like.

You can use the find_all() method of soup to extract useful html tags within a webpage. Examples of useful tags include < a > for hyperlinks, < table > for tables, < tr > for table rows, < th > for table headers, and < td > for table cells. The code below shows how to extract all the hyperlinks within the webpage.

nd_all(‘a’)

[5K,

Individual Results,

Team Results,

[email protected],

Results,

,

,

Huber Timing,

]

As you can see from the output above, html tags sometimes come with attributes such as class, src, etc. These attributes provide additional information about html elements. You can use a for loop and the get(‘”href”) method to extract and print out only hyperlinks.

all_links = nd_all(“a”)

for link in all_links:

print((“href”))

/results/2017GPTR

#individual

#team

mailto:[email protected]

#tabs-1

None

To print out table rows only, pass the ‘tr’ argument in nd_all().

# Print the first 10 rows for sanity check

rows = nd_all(‘tr’)

print(rows[:10])

[

,

,

,

,

]

The goal of this tutorial is to take a table from a webpage and convert it into a dataframe for easier manipulation using Python. To get there, you should get all table rows in list form first and then convert that list into a dataframe. Below is a for loop that iterates through table rows and prints out the cells of the rows.

for row in rows:

row_td = nd_all(‘td’)

print(row_td)

type(row_td)

[

,

,

,

,

,

,

]

sultSet

The output above shows that each row is printed with html tags embedded in each row. This is not what you want. You can use remove the html tags using Beautiful Soup or regular expressions.

The easiest way to remove html tags is to use Beautiful Soup, and it takes just one line of code to do this. Pass the string of interest into BeautifulSoup() and use the get_text() method to extract the text without html tags.

str_cells = str(row_td)

cleantext = BeautifulSoup(str_cells, “lxml”). get_text()

print(cleantext)

[14TH, INTEL TEAM M, 04:43:23, 00:58:59 – DANIELLE CASILLAS, 01:02:06 – RAMYA MERUVA, 01:17:06 – PALLAVI J SHINDE, 01:25:11 – NALINI MURARI]

Using regular expressions is highly discouraged since it requires several lines of code and one can easily make mistakes. It requires importing the re (for regular expressions) module. The code below shows how to build a regular expression that finds all the characters inside the < td > html tags and replace them with an empty string for each table row.

First, you compile a regular expression by passing a string to match to mpile(). The dot, star, and question mark (. *? ) will match an opening angle bracket followed by anything and followed by a closing angle bracket. It matches text in a non-greedy fashion, that is, it matches the shortest possible string. If you omit the question mark, it will match all the text between the first opening angle bracket and the last closing angle bracket. After compiling a regular expression, you can use the () method to find all the substrings where the regular expression matches and replace them with an empty string. The full code below generates an empty list, extract text in between html tags for each row, and append it to the assigned list.

import re

list_rows = []

cells = nd_all(‘td’)

str_cells = str(cells)

clean = mpile(‘<. *? >‘)

clean2 = ((clean, ”, str_cells))

(clean2)

print(clean2)

type(clean2)

str

The next step is to convert the list into a dataframe and get a quick view of the first 10 rows using Pandas.

df = Frame(list_rows)

(10)

0

[Finishers:, 577]

1

[Male:, 414]

2

[Female:, 163]

3

[]

4

[1, 814, JARED WILSON, M, TIGARD, OR, 00:36:21…

5

[2, 573, NATHAN A SUSTERSIC, M, PORTLAND, OR,…

6

[3, 687, FRANCISCO MAYA, M, PORTLAND, OR, 00:3…

7

[4, 623, PAUL MORROW, M, BEAVERTON, OR, 00:38:…

8

[5, 569, DEREK G OSBORNE, M, HILLSBORO, OR, 00…

9

[6, 642, JONATHON TRAN, M, PORTLAND, OR, 00:39…

The dataframe is not in the format we want. To clean it up, you should split the “0” column into multiple columns at the comma position. This is accomplished by using the () method.

df1 = df[0](‘, ‘, expand=True)

This looks much better, but there is still work to do. The dataframe has unwanted square brackets surrounding each row. You can use the strip() method to remove the opening square bracket on column “0. ”

df1[0] = df1[0](‘[‘)

The table is missing table headers. You can use the find_all() method to get the table headers.

col_labels = nd_all(‘th’)

Similar to table rows, you can use Beautiful Soup to extract text in between html tags for table headers.

all_header = []

col_str = str(col_labels)

cleantext2 = BeautifulSoup(col_str, “lxml”). get_text()

(cleantext2)

print(all_header)

[‘[Place, Bib, Name, Gender, City, State, Chip Time, Chip Pace, Gender Place, Age Group, Age Group Place, Time to Start, Gun Time, Team]’]

You can then convert the list of headers into a pandas dataframe.

df2 = Frame(all_header)

()

[Place, Bib, Name, Gender, City, State, Chip T…

Similarly, you can split column “0” into multiple columns at the comma position for all rows.

df3 = df2[0](‘, ‘, expand=True)

The two dataframes can be concatenated into one using the concat() method as illustrated below.

frames = [df3, df1]

df4 = (frames)

Below shows how to assign the first row to be the table header.

df5 = ([0])

At this point, the table is almost properly formatted. For analysis, you can start by getting an overview of the data as shown below.

Int64Index: 597 entries, 0 to 595

Data columns (total 14 columns):

[Place 597 non-null object

Bib 596 non-null object

Name 593 non-null object

Gender 593 non-null object

City 593 non-null object

State 593 non-null object

Chip Time 593 non-null object

Chip Pace 578 non-null object

Gender Place 578 non-null object

Age Group 578 non-null object

Age Group Place 578 non-null object

Time to Start 578 non-null object

Gun Time 578 non-null object

Team] 578 non-null object

dtypes: object(14)

memory usage: 70. 0+ KB

(597, 14)

The table has 597 rows and 14 columns. You can drop all rows with any missing values.

df6 = (axis=0, how=’any’)

Also, notice how the table header is replicated as the first row in df5. It can be dropped using the following line of code.

df7 = ([0])

You can perform more data cleaning by renaming the ‘[Place’ and ‘ Team]’ columns. Python is very picky about space. Make sure you include space after the quotation mark in ‘ Team]’.

(columns={‘[Place’: ‘Place’}, inplace=True)

(columns={‘ Team]’: ‘Team’}, inplace=True)

The final data cleaning step involves removing the closing bracket for cells in the “Team” column.

df7[‘Team’] = df7[‘Team’](‘]’)

It took a while to get here, but at this point, the dataframe is in the desired format. Now you can move on to the exciting part and start plotting the data and computing interesting statistics.

The first question to answer is, what was the average finish time (in minutes) for the runners? You need to convert the column “Chip Time” into just minutes. One way to do this is to convert the column to a list first for manipulation.

time_list = df7[‘ Chip Time’]()

# You can use a for loop to convert ‘Chip Time’ to minutes

time_mins = []

for i in time_list:

h, m, s = (‘:’)

math = (int(h) * 3600 + int(m) * 60 + int(s))/60

(math)

#print(time_mins)

The next step is to convert the list back into a dataframe and make a new column (“Runner_mins”) for runner chip times expressed in just minutes.

df7[‘Runner_mins’] = time_mins

The code below shows how to calculate statistics for numeric columns only in the dataframe.

scribe(include=[])

Runner_mins

count

577. 000000

mean

60. 035933

std

11. 970623

min

36. 350000

25%

51. 000000

50%

59. 016667

75%

67. 266667

max

101. 300000

Interestingly, the average chip time for all runners was ~60 mins. The fastest 10K runner finished in 36. 35 mins, and the slowest runner finished in 101. 30 minutes.

A boxplot is another useful tool to visualize summary statistics (maximum, minimum, medium, first quartile, third quartile, including outliers). Below are data summary statistics for the runners shown in a boxplot. For data visualization, it is convenient to first import parameters from the pylab module that comes with matplotlib and set the same size for all figures to avoid doing it for each figure.

from pylab import rcParams

rcParams[‘gsize’] = 15, 5

xplot(column=’Runner_mins’)

(True, axis=’y’)

(‘Chip Time’)

([1], [‘Runners’])

([< at 0x570dd106d8>],

)

The second question to answer is: Did the runners’ finish times follow a normal distribution?

Below is a distribution plot of runners’ chip times plotted using the seaborn library. The distribution looks almost normal.

x = df7[‘Runner_mins’]

ax = sns. distplot(x, hist=True, kde=True, rug=False, color=’m’, bins=25, hist_kws={‘edgecolor’:’black’})

The third question deals with whether there were any performance differences between males and females of various age groups. Below is a distribution plot of chip times for males and females.

f_fuko = [df7[‘ Gender’]==’ F’][‘Runner_mins’]

m_fuko = [df7[‘ Gender’]==’ M’][‘Runner_mins’]

sns. distplot(f_fuko, hist=True, kde=True, rug=False, hist_kws={‘edgecolor’:’black’}, label=’Female’)

sns. distplot(m_fuko, hist=False, kde=True, rug=False, hist_kws={‘edgecolor’:’black’}, label=’Male’)

< at 0x570e301fd0>

The distribution indicates that females were slower than males on average. You can use the groupby() method to compute summary statistics for males and females separately as shown below.

g_stats = oupby(” Gender”, as_index=True). describe()

print(g_stats)

Runner_mins \

count mean std min 25% 50%

Gender

F 163. 0 66. 119223 12. 184440 43. 766667 58. 758333 64. 616667

M 414. 0 57. 640821 11. 011857 36. 350000 49. 395833 55. 791667

75% max

F 72. 058333 101. 300000

M 64. 804167 98. 516667

The average chip time for all females and males was ~66 mins and ~58 mins, respectively. Below is a side-by-side boxplot comparison of male and female finish times.

xplot(column=’Runner_mins’, by=’ Gender’)

ptitle(“”)

C:\Users\smasango\AppData\Local\Continuum\anaconda3\lib\site-packages\numpy\core\ FutureWarning: reshape is deprecated and will raise in a subsequent release. Please use (… ) instead

return getattr(obj, method)(*args, **kwds)

Text(0. 5, 0. 98, ”)

In this tutorial, you performed web scraping using Python. You used the Beautiful Soup library to parse html data and convert it into a form that can be used for analysis. You performed cleaning of the data in Python and created useful plots (box plots, bar plots, and distribution plots) to reveal interesting trends using Python’s matplotlib and seaborn libraries. After this tutorial, you should be able to use Python to easily scrape data from the web, apply cleaning techniques and extract useful insights from the data.

If you would like to learn more about Python, take DataCamp’s free Intro to Python for Data Science course.

Python Library For Web Scraping – Analytics Vidhya

Take the Power of Web Scraping in your Hands

The phrase “we have enough data” does not exist in data science parlance. I have never encountered anyone who willingly said no to collecting more data for their machine learning or deep learning project. And there are often situations when the data you have simply isn’t enough.

That’s when the power of web scraping comes to the fore. It is a powerful technique that any analyst or data scientist should possess and will hold you in good stead in the industry (and when you’re sitting for interviews! ).

There are a whole host of Python libraries available to perform web scraping. But how do you decide which one to choose for your particular project? Which Python library holds the most flexibility? I will aim to answer these questions here, through the lens of five popular Python libraries for web scraping that I feel every enthusiast should know about.

Python Libraries for Web Scraping

Web scraping is the process of extracting structured and unstructured data from the web with the help of programs and exporting into a useful format. If you want to learn more about web scraping, here are a couple of resources to get you started:

Hands-On Introduction to Web Scraping in Python: A Powerful Way to Extract Data for your Data Science Project

FREE Course – Introduction to Web Scraping using Python

Alright – let’s see the web scraping libraries in Python!

1. Requests (HTTP for Humans) Library for Web Scraping

Let’s start with the most basic Python library for web scraping. ‘Requests’ lets us make HTML requests to the website’s server for retrieving the data on its page. Getting the HTML content of a web page is the first and foremost step of web scraping.

Requests is a Python library used for making various types of HTTP requests like GET, POST, etc. Because of its simplicity and ease of use, it comes with the motto of HTTP for Humans.

I would say this the most basic yet essential library for web scraping. However, the Requests library does not parse the HTML data retrieved. If we want to do that, we require libraries like lxml and Beautiful Soup (we’ll cover them further down in this article).

Let’s take a look at the advantages and disadvantages of the Requests Python library.

Advantages:

Simple

Basic/Digest Authentication

International Domains and URLs

Chunked Requests

HTTP(S) Proxy Support

Disadvantages:

Retrieves only static content of a page

Can’t be used for parsing HTML

Can’t handle websites made purely with JavaScript

2. lxml Library for Web Scraping

We know the requests library cannot parse the HTML retrieved from a web page. Therefore, we require lxml, a high performance, blazingly fast, production-quality HTML, and XML parsing Python library.

It combines the speed and power of Element trees with the simplicity of Python. It works well when we’re aiming to scrape large datasets. The combination of requests and lxml is very common in web scraping. It also allows you to extract data from HTML using XPath and CSS selectors.

Let’s take a look at the advantages and disadvantages of the lxml Python library.

Faster than most of the parsers out there

Light-weight

Uses element trees

Pythonic API

Does not work well with poorly designed HTML

The official documentation is not very beginner-friendly

3. Beautiful Soup Library for Web Scraping

BeautifulSoup is perhaps the most widely used Python library for web scraping. It creates a parse tree for parsing HTML and XML documents. Beautiful Soup automatically converts incoming documents to Unicode and outgoing documents to UTF-8.

One of the primary reasons the Beautiful Soup library is so popular is that it is easier to work with and well suited for beginners. We can also combine Beautiful Soup with other parsers like lxml. But all this ease of use comes with a cost – it is slower than lxml. Even while using lxml as a parser, it is slower than pure lxml.

One major advantage of the Beautiful Soup library is that it works very well with poorly designed HTML and has a lot of functions. The combination of Beautiful Soup and Requests is quite common in the industry.

Requires a few lines of code

Great documentation

Easy to learn for beginners

Robust

Automatic encoding detection

Slower than lxml

If you want to learn how to scrape web pages using Beautiful Soup, this tutorial is for you:

Beginner’s guide to Web Scraping in Python using Beautiful Soup

4. Selenium Library for Web Scraping

There is a limitation to all the Python libraries we have discussed so far – we cannot easily scrape data from dynamically populated websites. It happens because sometimes the data present on the page is loaded through JavaScript. In simple words, if the page is not static, then the Python libraries mentioned earlier struggle to scrape the data from it.

That’s where Selenium comes into play.

Selenium is a Python library originally made for automated testing of web applications. Although it wasn’t made for web scraping originally, the data science community turned that around pretty quickly!

It is a web driver made for rendering web pages, but this functionality makes it very special. Where other libraries are not capable of running JavaScript, Selenium excels. It can make clicks on a page, fill forms, scroll the page and do many more things.

This ability to run JavaScript in a web page gives Selenium the power to scrape dynamically populated web pages. But there is a trade-off here. It loads and runs JavaScript for every page, which makes it slower and not suitable for large scale projects.

If time and speed is not a concern for you, then you can definitely use Selenium.

Beginner-friendly

Automated web scraping

Can scrape dynamically populated web pages

Automates web browsers

Can do anything on a web page similar to a person

Very slow

Difficult to setup

High CPU and memory usage

Not ideal for large projects

Here is a wonderful article to learn how Selenium works (including Python code):

Data Science Project: Scraping YouTube Data using Python and Selenium to Classify Videos

5. Scrapy

Now it’s time to introduce you to the BOSS of Python web scraping libraries – Scrapy!

Scrapy is not just a library; it is an entire web scraping framework created by the co-founders of Scrapinghub – Pablo Hoffman and Shane Evans. It is a full-fledged web scraping solution that does all the heavy lifting for you.

Scrapy provides spider bots that can crawl multiple websites and extract the data. With Scrapy, you can create your spider bots, host them on Scrapy Hub, or as an API. It allows you to create fully-functional spiders in a matter of a few minutes. You can also create pipelines using Scrapy.

Thes best thing about Scrapy is that it’s asynchronous. It can make multiple HTTP requests simultaneously. This saves us a lot of time and increases our efficiency (and don’t we all strive for that? ).

You can also add plugins to Scrapy to enhance its functionality. Although Scrapy is not able to handle JavaScript like selenium, you can pair it with a library called Splash, a light-weight web browser. With Splash, Scrapy can even extract data from dynamic websites.

Asynchronous

Excellent documentation

Various plugins

Create custom pipelines and middlewares

Low CPU and memory usage

Well designed architecture

A plethora of available online resources

Steep learning curve

Overkill for easy jobs

Not beginner-friendly

If you want to learn Scrapy, which I highly recommend you do, you should read this tutorial:

Web Scraping in Python using Scrapy (with multiple examples)

What’s Next?

I personally find these Python libraries extremely useful for my requirements. I would love to hear your thoughts on these libraries or if you use any other Python library – let me know in the comment section below.

If you liked the article, do share it along in your network and keep practicing these techniques!

Frequently Asked Questions about webscraping python

Is web scraping with Python legal?

So is it legal or illegal? Web scraping and crawling aren’t illegal by themselves. After all, you could scrape or crawl your own website, without a hitch. … Big companies use web scrapers for their own gain but also don’t want others to use bots against them.

What is web scraping in Python?

Web scraping is a term used to describe the use of a program or algorithm to extract and process large amounts of data from the web. … Whether you are a data scientist, engineer, or anybody who analyzes large amounts of datasets, the ability to scrape data from the web is a useful skill to have.Jul 26, 2018

Is Python best for web scraping?

Requests (HTTP for Humans) Library for Web Scraping Requests is a Python library used for making various types of HTTP requests like GET, POST, etc. Because of its simplicity and ease of use, it comes with the motto of HTTP for Humans. I would say this the most basic yet essential library for web scraping.Apr 24, 2020