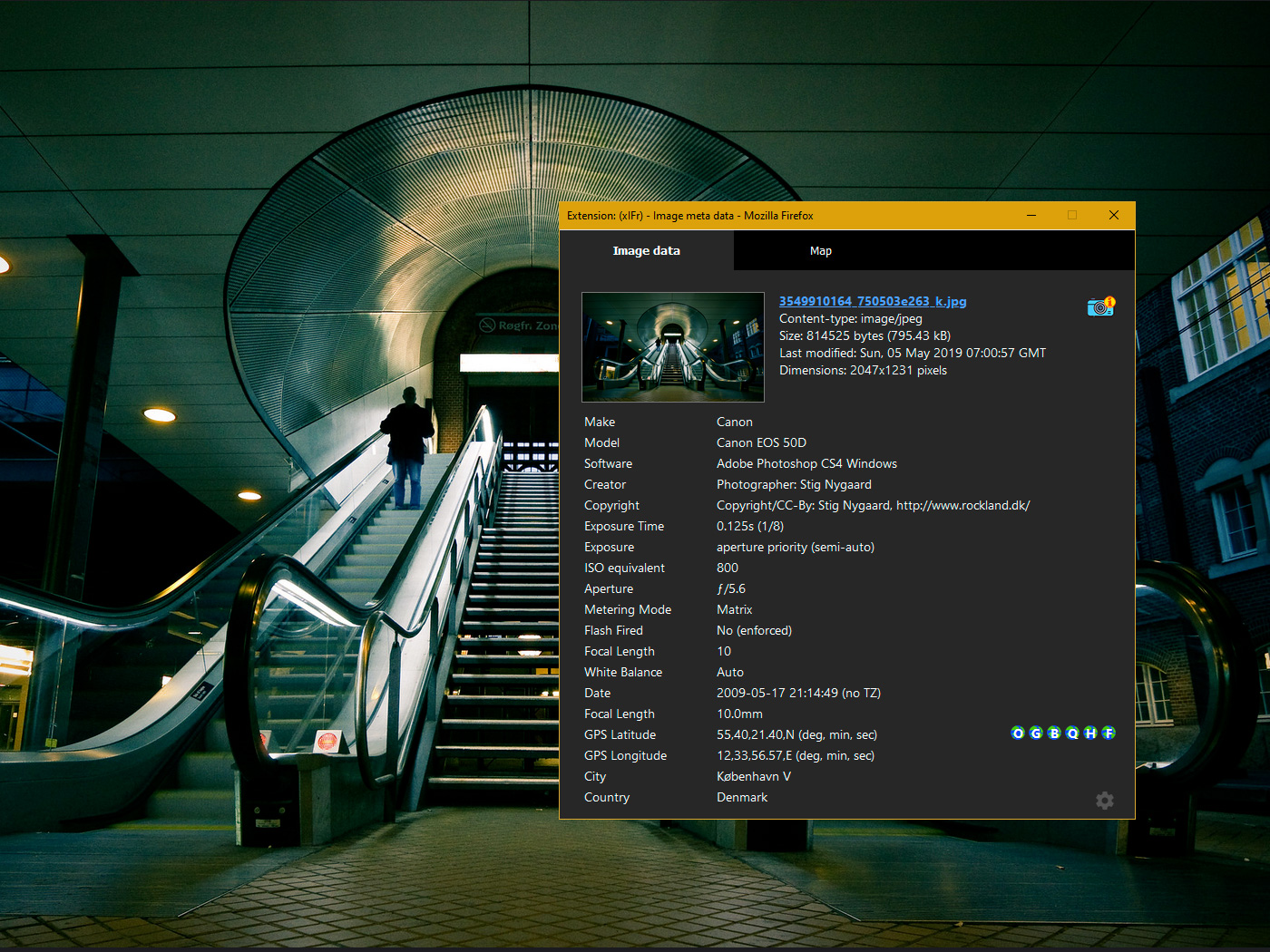

Web Scraper Extension Firefox

Web Scraper – Get this Extension for Firefox (en-US)

Web Scraper is built on modular selector engine. When setting up a scraper (Sitemap) you can use different types of selectors to tailor the scraper for a specific are some of the things that you can do with selectors:

Website navigation with Link SelectorsMultiple record extraction from a single page with Element SelectorsData extraction with Text, Element Attribute, Image, Table SelectorsAdditional data loading with Element click, Element scroll down Selectors tutorialsDocumentationForumPrivacy PolicyHow are you enjoying Web Scraper? If you think this add-on violates Mozilla’s add-on policies or has security or privacy issues, please report these issues to Mozilla using this don’t use this form to report bugs or request add-on features; this report will be sent to Mozilla and not to the add-on add-on needs to:Extend developer tools to access your data in open tabsDisplay notifications to youAccess browser tabsStore unlimited amount of client-side dataAccess browser activity during navigationAccess your data for all websitesFixed element selection in websites that were blocking it with CSP.

Top 30 Free Web Scraping Software in 2021 | Octoparse

Web Scraping & Web Scraping Software

If you are a total newbie in this area, you may find more sources about web scraping at the end of this blog. Simply put, web scraping (also termed web data extraction, screen scraping, or web harvesting) is a technique of extracting data from websites. It turns web data scattered across pages into structured data that can be stored in your local computer in a spreadsheet or transmitted to a database.

It can be difficult to build a web scraper for people who don’t know anything about coding. Luckily, there is web scraping software available for people with or without programming skills. Also, if you’re a data scientist or a researcher, using a web scraper definitely raises your working effectiveness in data collection.

Here is a list of the 30 most popular web scraping software. I just put them together under the umbrella of software, while they range from open-source libraries, browser extensions to desktop software and more.

Top 30 Web Scraping Software

Beautiful Soup

Octoparse

Mozenda

Parsehub

Crawlmonster

Connotate

Common Crawl

Crawly

Content Grabber

Diffbot

Easy Web Extract

FMiner

Scrapy

Helium Scraper

Scrapinghub

Screen-Scraper

ScrapeHero

UniPath

Web Content Extractor

WebHarvy

Web

Web Sundew

Winautomation

Web Robots

1. Beautiful Soup

Who is this for: developers who are proficient at programming to build a web scraper/web crawler to crawl the websites.

Why you should use it: Beautiful Soup is an open-source Python library designed for web-scraping HTML and XML files. It is the top Python parser that have been widely used. If you have programming skills, it works best when you combine this library with Python.

2. Octoparse

Who is this for: Professionals without coding skills who need to scrape web data at scale. The web scraping software is widely used among online sellers, marketers, researchers and data analysts.

Why you should use it: Octoparse is free for life SaaS web data platform. With its intuitive interface, you can scrape web data within points and clicks. It also provides ready-to-use web scraping templates to extract data from Amazon, eBay, Twitter, BestBuy, etc. If you are looking for one-stop data solution, Octoparse also provides web data service.

3.

Who is this for: Enterprises with budget looking for integration solution on web data.

Why you should use it: is a SaaS web data platform. It provides a web scraping solution that allows you to scrape data from websites and organize them into data sets. They can integrate the web data into analytic tools for sales and marketing to gain insight.

4. Mozenda

Who is this for: Enterprises and businesses with scalable data needs.

Why you should use it: Mozenda provides a data extraction tool that makes it easy to capture content from the web. They also provide data visualization services. It eliminates the need to hire a data analyst. And Mozenda team offers services to customize integration options.

5. Parsehub

Who is this for: Data analysts, marketers, and researchers who lack programming skills.

Why you should use it: ParseHub is a visual web scraping tool to get data from the web. You can extract the data by clicking any fields on the website. It also has an IP rotation function that helps change your IP address when you encounter aggressive websites with anti-scraping techniques.

6. Crawlmonster

Who is this for: SEO and marketers

Why you should use it: CrawlMonster is a free web scraping tool. It enables you to scan websites and analyze your website content, source code, page status, etc.

7. ProWebScraper

Who is this for: Enterprise looking for integration solution on web data.

Why you should use it: Connotate has been working together with, which provides a solution for automating web data scraping. It provides web data service that helps you to scrape, collect and handle the data.

8. Common Crawl

Who is this for: Researchers, students, and professors.

Why you should use it: Common Crawl is founded by the idea of open source in the digital age. It provides open datasets of crawled websites. It contains raw web page data, extracted metadata, and text extractions.

9. Crawly

Who is this for: People with basic data requirements.

Why you should use it: Crawly provides automatic web scraping service that scrapes a website and turns unstructured data into structured formats like JSON and CSV. They can extract limited elements within seconds, which include Title Text, HTML, Comments, DateEntity Tags, Author, Image URLs, Videos, Publisher and country.

10. Content Grabber

Who is this for: Python developers who are proficient at programming.

Why you should use it: Content Grabber is a web scraping tool targeted at enterprises. You can create your own web scraping agents with its integrated 3rd party tools. It is very flexible in dealing with complex websites and data extraction.

11. Diffbot

Who is this for: Developers and business.

Why you should use it: Diffbot is a web scraping tool that uses machine learning and algorithms and public APIs for extracting data from web pages. You can use Diffbot to do competitor analysis, price monitoring, analyze consumer behaviors and many more.

12.

Who is this for: People with programming and scraping skills.

Why you should use it: is a browser-based web crawler. It provides three types of robots — Extractor, Crawler, and Pipes. PIPES has a Master robot feature where 1 robot can control multiple tasks. It supports many 3rd party services (captcha solvers, cloud storage, etc) which you can easily integrate into your robots.

13.

Who is this for: Data analysts, Marketers, and researchers who’re lack of programming skills.

Why you should use it: Data Scraping Studio is a free web scraping tool to harvest data from web pages, HTML, XML, and pdf. The desktop client is currently available for Windows only.

Who is this for: Businesses with limited data needs, marketers, and researchers who lack programming skills.

Why you should use it: Easy Web Extract is a visual web scraping tool for business purposes. It can extract the content (text, URL, image, files) from web pages and transform results into multiple formats.

15. FMiner

Who is this for: Data analyst, Marketers, and researchers who’re lack of programming skills.

Why you should use it: FMiner is a web scraping software with a visual diagram designer, and it allows you to build a project with a macro recorder without coding. The advanced feature allows you to scrape from dynamic websites use Ajax and Javascript.

16. Scrapy

Who is this for: Python developers with programming and scraping skills

Why you should use it: Scrapy can be used to build a web scraper. What is great about this product is that it has an asynchronous networking library which allows you to move on to the next task before it finishes.

17. Helium Scraper

Who is this for: Data analysts, Marketers, and researchers who lack programming skills.

Why you should use it: Helium Scraper is a visual web data scraping tool that works pretty well especially on small elements on the website. It has a user-friendly point-and-click interface which makes it easier to use.

18.

Who is this for: People who need scalable data without coding.

Why you should use it: It allows scraped data to be stored on the local drive that you authorize. You can build a scraper using their Web Scraping Language (WSL), which is easy to learn and requires no coding. It is a good choice and worth a try if you are looking for a security-wise web scraping tool.

19. ScraperWiki

Who is this for: A Python and R data analysis environment. Ideal for economists, statisticians and data managers who are new to coding.

Why you should use it: ScraperWiki consists of 2 parts. One is QuickCode which is designed for economists, statisticians and data managers with knowledge of Python and R language. The second part is The Sensible Code Company which provides web data service to turn messy information into structured data.

20. Scrapinghub(Now Zyte)

Who is this for: Python/web scraping developers

Why you should use it: Scraping hub is a cloud-based web platform. It has four different types of tools — Scrapy Cloud, Portia, Crawlera, and Splash. It is great that Scrapinghub offers a collection of IP addresses covering more than 50 countries. This is a solution for IP banning problems.

21. Screen-Scraper

Who is this for: For businesses related to the auto, medical, financial and e-commerce industry.

Why you should use it: Screen Scraper is more convenient and basic compared to other web scraping tools like Octoparse. It has a steep learning curve for people without web scraping experience.

22.

Who is this for: Marketers and sales.

Why you should use it: is a web scraping tool that helps salespeople to gather data from professional network sites like LinkedIn, Angellist, Viadeo.

23. ScrapeHero

Who is this for: Investors, Hedge Funds, Market Analysts

Why you should use it: As an API provider, ScrapeHero enables you to turn websites into data. It provides customized web data services for businesses and enterprises.

24. UniPath

Who is this for: Bussiness in all sizes.

Why you should use it: UiPath is a robotic process automation software for free web scraping. It allows users to create, deploy and administer automation in business processes. It is a great option for business users since it helps you create rules for data management.

25. Web Content Extractor

Why you should use it: Web Content Extractor is an easy-to-use web scraping tool for individuals and enterprises. You can go to their website and try its 14-day free trial.

26. WebHarvy

Why you should use it: WebHarvy is a point-and-click web scraping tool. It’s designed for non-programmers. They provide helpful web scraping tutorials for beginners. However, the extractor doesn’t allow you to schedule your scraping projects.

27. Web

Why you should use it: Web Scraper is a chrome browser extension built for scraping data from websites. It’s a free web scraping tool for scraping dynamic web pages.

28. Web Sundew

Who is this for: Enterprises, marketers, and researchers.

Why you should use it: WebSundew is a visual scraping tool that works for structured web data scraping. The Enterprise edition allows you to run the scraping projects at a remote server and publish collected data through FTP.

29. Winautomation

Who is this for: Developers, business operation leaders, IT professionals

Why you should use it: Winautomation is a Windows web scraping tool that enables you to automate desktop and web-based tasks.

30. Web Robots

Why you should use it: Web Robots is a cloud-based web scraping platform for scraping dynamic Javascript-heavy websites. It has a web browser extension as well as desktop software, making it easy to scrape data from the websites.

Closing Thoughts

To extract data from websites with web scraping tools is a time-saving method, especially for those who don’t have sufficient coding knowledge. There are many factors you should consider when choosing a proper tool to facilitate your web scraping, such as ease of use, API integration, cloud-based extraction, large-scale scraping, scheduling projects, etc. Web scraping software like Octoparse not only provides all the features I just mentioned but also provides data service for teams in all sizes – from start-ups to large enterprises. You can contact us for more information on web scraping.

Good or Evil? What Web Scraping Bots Mean for Your Site – Imperva

The internet is crawling with bots. A bot is a software program that runs automated tasks over the internet, typically performing simple, repetitive tasks at great speeds unattainable, or undesirable by humans. They are responsible for many small jobs that we take for granted such as search engine crawling, website health monitoring, fetching web content, measuring site speed and powering APIs. They can also be used to automate security auditing by scanning your network and websites to find vulnerabilities and help remediate them.

According to our 2015 Bot Traffic Report, almost half of all web traffic is bots, and two thirds of bot traffic we’ve analyzed is malicious. One of the ways that bots can harm businesses is by engaging in web scraping. We work with customers often on this issue and wanted to share what we’ve learned. This post discusses what web scraping is, how it works, and why it’s a problem for website owners.

What is scraping?

Web scraping is the process of automatically collecting information from the web. The most common type of scraping is site scraping, which aims to copy or steal web content for use elsewhere. This repurposing of content may or may not be approved by the website owner.

Typically, bots do this by crawling a website, accessing the source code of the website and then parsing it to remove the key pieces of data they want. After obtaining content, they typically post it elsewhere on the internet.

A more advanced type of scraping is database scraping. Conceptually this is similar to site scraping except that hackers will create a bot which interacts with a target site’s application to retrieve data from its database. An example of database scraping is when a bot targets an insurance website to receive quotes on coverage. The bot will try all possible combinations in the web application to obtain quotes and pricing for all scenarios.

In this example, the bot tells the application it is a 25-year-old male looking for a quote for a Honda, then for a Toyota, then a Ferrari. Each time the bot gets a different result back from the application. Given enough tries, it is possible to obtain entire datasets. Clearly with the number of permutations available in this scenario, a bot would be preferable to a human.

Database scraping can be used to steal intellectual property, price lists, customer lists, insurance pricing and other datasets that would require an effort prohibitively tedious for humans, but perfectly within the range of what bots routinely do.

Consider the case of a rental car agency, if a company created a bot that regularly checked the price of its competitor and slightly undercut them at every price point, it would have a competitive advantage. This lower price would appear in all aggregator sites that compare both companies, and would likely result in more car rental conversions and higher search engine rankings.

To deal with the threat that scraping poses to your business, it’s advisable to employ a solution that adequately detects, identifies and mitigates bots.

Not all web scraping is bad

Scraping isn’t always malicious. There are many cases where data owners want to propagate data to as many people as possible. For example, many government websites provide data for the general public. This data is frequently available over APIs but because of the scale of work required to achieve this scrapers must sometimes be employed to gather that data.

Another example of legitimate scraping – which is often powered by bots – includes aggregation websites such as travel sites, hotel booking portals and concert ticket websites. Bots that distribute content from these sites obtain data through an API or by scraping, and tend to drive traffic to the data owners’ websites. In this case bots may function as a critical part of their business model.

Are bots legal? According to Eric Goldman, a professor of law at Santa Clara University School of Law, who writes about internet law,

Although scraping is ubiquitous, it’s not clearly legal. A variety of laws may apply to unauthorized scraping, including contract, copyright and trespass to chattels laws. (“Trespass to chattels” protects against unauthorized use of someone’s personal property, such as computer servers). The fact that so many laws restrict scraping means it is legally dubious.

Since scraping bots may also harm your business as we mentioned, it’s important to create an ecosystem that is both bot-friendly and also able to block malicious automated clients. Website owners can significantly improve security of their website by blocking bad bots without excluding legitimate bots.

Four things you can do to detect and stop site scraping

Site scraping can be a powerful tool. In the right hands, it automates the gathering and dissemination of information. In the wrong hands, it can lead to theft of intellectual property or an unfair competitive edge.

Over the last two decades, bots have evolved from simple scripts with minimal capabilities to complex, intelligent programs that are sometimes able to convince websites and their security systems that they are humans.

We use the following process to classify automated clients and determine next steps.

You can use the following methods to classify and mitigate bots, including detecting scraping bots:

Use an analysis tool — You can identify and mitigate bots including site scapers by using a static analysis tool that examines structural web requests and header information. By co-relating that information with what a bot claims to be, you can determine its true identity and block as needed.

Employ a challenge-based approach — This approach is the next step in detecting a scraping bot. Use proactive web components to evaluate visitor behavior such as does it support cookies and JavaScript? You can also use scrambled imagery like CAPTCHA, which can block some attacks.

Take a behavioral approach — A behavioral approach to bot mitigation is the next step. Here you can look at the activity associated with a particular bot to determine if it is what it claims to be. Most bots link themselves to a parent program like JavaScript, Internet Explorer or Chrome. If the bot’s characteristics behave differently from the parent program, you can use the anomaly to detect, block and mitigate the problems in the future.

Using

You can use to shield your site from scraping bots, but it may not be effective in the long run. works by telling a bad bot that it’s not welcome. Since bad bots don’t adhere to rules, they will ignore any commands. In some situations, some malicious bots will look inside for hidden gems (private folders, admin pages) the site owner is trying to hide from Google’s index and exploit them.

So it’s even more important than ever that your bot defense solution can fully assess the impact of a specific bot before deciding whether or not to allow it to access your website. To see if your current solution is adequate ask these questions: Does this automated client add or subtract value to your business? Is it driving traffic toward your website, or away from your site? Answering these questions will help you determine which course to take to build bot detection and mitigation into your security systems.

Try Imperva for Free

Protect your business for 30 days on Imperva.

Start Now

Frequently Asked Questions about web scraper extension firefox

Is Web scraper extension free?

Why you should use it: Web Scraper is a chrome browser extension built for scraping data from websites. It’s a free web scraping tool for scraping dynamic web pages.Aug 3, 2021

How do I make a web scraper extension?

Site scraping can be a powerful tool. In the right hands, it automates the gathering and dissemination of information. In the wrong hands, it can lead to theft of intellectual property or an unfair competitive edge.Apr 18, 2016

Are web scrapers bad?

Top 8 Web Scraping ToolsParseHub.Scrapy.OctoParse.Scraper API.Mozenda.Webhose.io.Content Grabber.Common Crawl.Feb 6, 2021