Web Scrape Images Python

Image Scraping with Python – Towards Data Science

WEB SCRAPING WITH PYTHONA code-along guide to learn how to download images from Google with Python! Photo by Mr Cup / Fabien Barral on UnsplashTo train a model, you need images. You can most certainly download them by hand, possibly even somewhere in batch, but I think there is a much more enjoyable way. Let’s use Python and some web scraping techniques to download 2 (Feb 25, 2020): One of the problems with scraping webpages is that the target elements depend on the a selector of some sort. We use css-selectors to get the relevant elements from the page. Google seemed to have changed its site layout sometime in the past, which made it necessary to update the relevant selectors. The provided script should be working Since writing this article on image scraping, I have published the article on building an image-recognizing convolutional neural network. If you want to put the scraped images to good use, check out the following article! Scraping static pagesScraping interactive pagesScraping images from GoogleAfterword on legalityA Python environment (I suggest Jupyter Notebook). If you haven’t set this up, don’t worry. It is effortless and takes less than 10 by David Marcu on UnsplashScraping static pages (i. e., pages that don’t utilize JavaScript to create a high degree of interaction on the page) is extremely simple. A static webpage is pretty much just a large file written in a markup language that defines how the content should be presented to the user. You can very quickly get the raw content without the markup being applied. Say that we want to get the following table from this Wikipedia page:Screenshot from Wikipedia page shows country codes and corresponding namesWe could do this by utilizing an essential Python library called requests like this:using requests library to download static page contentAs you can see, this is not very useful. We don’t want all that noise but instead would like to extract only specific elements of the page (the table to be precise). Cases like this are where Beautiful Soup comes in extremely ExtractionBeautiful Soup allows us to navigate, search, or modify the parse tree easily. After running the raw content through the appropriate parser, we get a lovely clean parse tree. In this tree, we can search for an element of the type “table”, with the class “wikitable sortable. ” You can get the information about class and type by right-clicking on the table and clicking inspect to see the source then loop through that table and extract the data row by row, ultimately getting this result:parsed table from Wikipedia PageNeat Trick:Pandas has a built-in read_html method that becomes available after installing lxml (a powerful XML and HTML parser) by running pip install lxml. read_html allows you to do the following:the second result from read_htmlAs you can see, we call res[2] as ad_html() will dump everything it finds that even loosely resembles a table into an individual DataFrame. You will have to check which of the resulting DataFrames contains the desired data. It is worth to give read_html a try for nicely structured by Ross Findon on UnsplashHowever, most modern web pages are quite interactive. The concept of “single-page application” means that the web page itself will change without the user having to reload or getting redirected from page to page all the time. Because this happens only after specific user interactions, there are few options when it comes to scraping the data (as those actions do have to take place). Sometimes the user action might trigger a call to an exposed backend API. In which case, it might be possible to directly access the API and fetch the resulting data without having to go through the unnecessary steps in-between. Most of the time, however, you will have to go through the steps of clicking buttons, scrolling pages, waiting for loads and all of that … or at least you have to make the webpage think you are doing all of that. Selenium to the rescue! SeleniumSelenium can be used to automate web browser interaction with Python (also other languages). In layman’s term, selenium pretends to be a real user, it opens the browser, “moves” the cursor around and clicks buttons if you tell it to do so. The initial idea behind Selenium, as far as I know, is automated testing. However, Selenium is equally powerful when it comes to automating repetitive web-based ’s look at an example to illustrate the usage of Selenium. Unfortunately, a little bit of preparation is required beforehand. I will outline the installation and usage of Selenium with Google Chrome. In case you want to use another Browser (e. g., Headless) you will have to download the respective WebDriver. You can find more information Google Chrome (skip if its already installed)Identify your Chrome version. Typically found by clicking About Google Chrome. I currently have version 77. 0. 3865. 90 (my main version is thus 77, the number before the first dot). Download der corresponding ChromeDriver from here for your main version and put the executable into an accessible location (I use Desktop/Scraping)Install the Python Selenium package viapip install seleniumStarting a WebDriverRun the following snippet (for ease of demonstration do it in a Jupyter Notebook) and see how a ghostly browser opens selenium# This is the path I use# DRIVER_PATH = ‘… /Desktop/Scraping/chromedriver 2’# Put the path for your ChromeDriver hereDRIVER_PATH =

Image Scraping with Python – GeeksforGeeks

Scraping Is a very essential skill for everyone to get data from any website. In this article, we are going to see how to scrape images from websites using python. For scraping images, we will try different 1: Using BeautifulSoup and Requests Attention geek! Strengthen your foundations with the Python Programming Foundation Course and learn the basics. To begin with, your interview preparations Enhance your Data Structures concepts with the Python DS Course. And to begin with your Machine Learning Journey, join the Machine Learning – Basic Level Coursebs4: Beautiful Soup(bs4) is a Python library for pulling data out of HTML and XML files. This module does not come built-in with Python. To install this type the below command in the install bs4

requests: Requests allows you to send HTTP/1. 1 requests extremely easily. This module also does not come built-in with Python. To install this type the below command in the install requests

Approach:Import moduleMake requests instance and pass into URLPass the requests into a Beautifulsoup() functionUse ‘img’ tag to find them all tag (‘src ‘)Implementation:Python3import requests from bs4 import BeautifulSoup def getdata(url): r = (url) return soup = BeautifulSoup(htmldata, ”) for item in nd_all(‘img’): print(item[‘src’])Output: 2: Using urllib and BeautifulSoupurllib: It is a Python module that allows you to access, and interact with, websites with their URL. To install this type the below command in the install urllib

Approach:Import moduleRead URL with urlopen()Pass the requests into a Beautifulsoup() functionUse ‘img’ tag to find them all tag (‘src ‘)Implementation:Python3from quest import urlopenfrom bs4 import BeautifulSoupsoup = BeautifulSoup(htmldata, ”)images = nd_all(‘img’)for item in images: print(item[‘src’])Output:

Scraping Images with Python – Rubik’s Code

Process of building machine learning, deep learning or AI applications has several steps. One of them is analysis of the data and finding which parts of it are usable and which are not. We also need to pick machine learning algorithms or neural network architectures that we need to use in order to solve the problem. We might even choose to use reinforcement learning or transfer learning. However, often clients don’t have data that could solve their problem. More often than not, it is our job to get data from the web that is going to be utilized by machine learning algorithm or neural network.

This bundle of e-books is specially crafted for beginners. Everything from Python basics to the deployment of Machine Learning algorithms to production in one place. Become a Machine Learning Superhero TODAY!

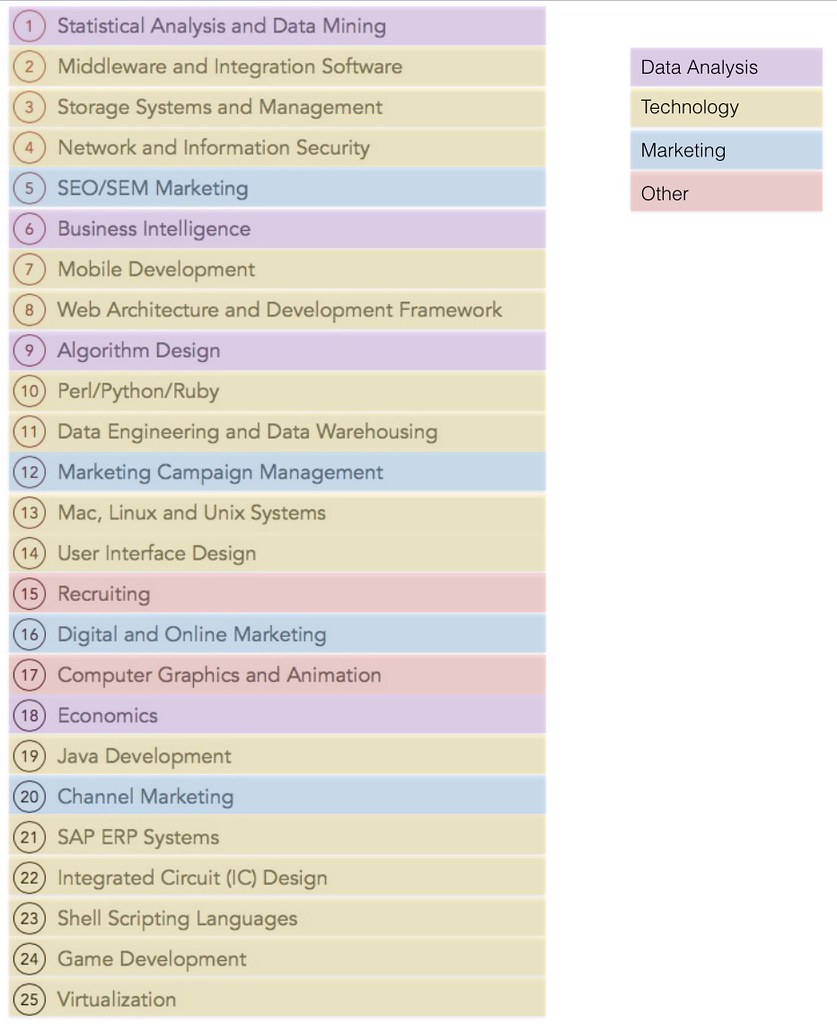

This is usually the rule when we work on computer vision tasks. Clients rely on your ability to gather the data that is going to feed your VGG, ResNet, or custom Convolutional Neural Network. So, in this article we focus on the step that comes before data analysis and all the fancy algorithms – data scraping, or to be more precise, image scraping. We are going to show three ways to get images from some web site using Python. In this article we cover several topics:

Prerequsites

Scraping images with BeutifulSoup

Scraping images with Srapy

Scraping images from Google with Selenium

In general, there are multiple ways that you can download images from a web page. There are even multiple Python packages and tools that can help you with this task. In this article, we explore three of those packages: Beautiful Soup, Scrapy and Selenium. They are all good libraries for pulling data out of HTML.

The first thing we need to do is to install them. To install Beautiful Soup run this command:

pip install beautifulsoup4

To install Scrapy, run this command:

Also, make sure that you installed Selenium:

In order for Selenium to work, you need to install Google Chrome and corresponding ChromeDriver. To do so, follow these steps:

Install Google Chrome

Detect version of installed Chrome. You can do so by going to About Google Chrome.

Finally, download ChromeDriver for your version from here.

Since these tools can not function without Pillow, make sure that this library is installed as well:

Both of these libraries are great tools so let’s see what problem we need to solve. In this example, we want to download featured image from all blog posts from our blog page. If we inspect that page we can see that URLs where those images are located are stored withing HTML tag and they are nested within and

Now when we know a little bit more about our task, let’s implement solution first with Beautiful Soup and then with Scrapy. Finally, we will see how we can download images from Google, using Selenium.

2. Scraping images with Beautiful Soup

This library is pretty intuitive to use. However, we need to import other libraries in order to finish this task:

from bs4 import BeautifulSoup

import requests

import quest

import shutil

These libraries are used to send web requests (requests and quest) and to store data in files (shutil). Now, we can send request to blog page, get response and parse it with Beautiful Soup:

url = ”

response = (url)

soup = BeautifulSoup(, “”)

aas = nd_all(“a”, class_=’entry-featured-image-url’)

We extracted all elements from HTML DOM that have tag and class entry-featured-image-url using find_all method. They are all stored within aas variable, if you print it out it will look something like this:

[,

,

,

,

,

,

,

,

]

Now we need to use that information and get data from each individual link. We also need to store it files. Let’s firs extract URL links and image names for each image from aas variable.

image_info = []

for a in aas:

image_tag = ndChildren(“img”)

((image_tag[0][“src”], image_tag[0][“alt”]))

We utilize findChildren function for each element in the aas array and append it’s attributes to image_info list. Here is the result:

[(”,

‘Create Deepfakes in 5 Minutes with First Order Model Method’),

(”,

‘Test Driven Development (TDD) with Python’),

‘Machine Learning with – Sentiment Analysis’),

‘Machine Learning with – NLP with BERT’),

‘Machine Learning With – Evaluation Metrics’),

‘Machine Learning with – Object detection with YOLO’),

‘The Rising Value of Big Data in Application Monitoring’),

‘Transfer Learning and Image Classification with ‘),

‘Machine Learning with – Recommendation Systems’)]

Great, we have links and image names, all we need to do is download data. For that purpose, we build download_image function:

def download_image(image):

response = (image[0], stream=True)

realname = ”(e for e in image[1] if alnum())

file = open(“. /images_bs/{}”(realname), ‘wb’)

= True

pyfileobj(, file)

del response

It is a simple function. first we send the request to the URL that we extracted form the HTML. Then based on the title we create file name. During this process we remove all spaces and special characters. Eventually we create a file with the proper name and copy all data from the response into that file using shutil. In the end we call all the function for each image in the list:

for i in range(0, len(image_info)):

download_image(image_info[i])

The result can be found in defined folder:

Of course, this solution can be fruther generalized and implemented in a form of a class. Something like this:

class BeautifulScrapper():

def __init__(self, url:str, classid:str, folder:str):

= url

assid = classid

= folder

def _get_info(self):

response = ()

aas = nd_all(“a”, class_= assid)

return image_info

def _download_images(self, image_info):

response = (image_info[0], stream=True)

realname = ”(e for e in image_info[1] if alnum())

file = open((realname), ‘wb’)

def scrape_images(self):

image_info = self. _get_info()

wnload_image(image_info[i])

It is pretty much the same thing, just now you can create multiple objects of this class with different URLs and configurations.

2. Scraping images with Scrapy

The other tool that we can use for downloading images is Scrapy. While Beautiful Soup is intuitive and very simple to use, you still need to use other libraries and things can get messy if we are working on bigger project. Scrapy is great for those situations. Once this library is installed, you can create new Scrapy project with this command:

scrapy startproject name_of_project

This is going to create project structure that is similar to the Django project structure. The files that are interesting are, and

The first thing we need to do is add file or image pipeline in In this example, we add image pipeline. Also, location where images are stored needs to be added. That is why we add these two lines to the settings:

ITEM_PIPELINES = {”: 1}

IMAGES_STORE = ‘C:/images/scrapy’

Then we move on to the Here we define the structure of downloaded items. In this case, we use Scrapy for downloading images, however, it is one powerful tool for downloading other types of data as well. Here is what that looks like:

import scrapy

class ImageItem():

images = ()

image_urls = ()

Here we defined ImageItem class which inherits Item class from Scrapy. We define two mandatory fields when we work with Image Pipeline: images and images_urls and we define them as (). It is important to notice that these fields must have these names. Apart from that, note that img_urls needs to be a list and needs to contain absolute URLs. For this reason we have to create a function to transform relative URLs to absolute URLs. Finally, we implement crawler within

from. import ImageItem

class ImgSpider():

name = “img_spider”

start_urls = [“]

def parse(self, response):

image = ImageItem()

img_urls = []

for img in (” img::attr(src)”). extract():

(img)

image[“image_urls”] = img_urls

return image

In this file, we create class ImgSpyder which inherits Spider class from Scrapy. This class essentially is used for crawling and downloading data. You can see that each Spider has a name. This name is used for running the process later on. Field start_urls defines which web pages are crawled. When we initiate Spider, it shoot requests to the pages defined in start_urls array.

Response is processed in parse method, which we override in ImgSpider class. Effectively this means that, when we run this example, it sends request to and then processes the response in parse method. In this method, we create instance of ImageItem. Then we use css selector to extract image URLs and store them in img_urls array. Finally, we put everything from img_urls array into the ImageItem object. Note that we don’t need to put anything in images field of the class, that is done by Scrapy. Let’s run this crawler with this command:

We use name defined within the class. The other way to run this crawler is like this:

scrapy runspider

4. Scraping images from Google with Selenium

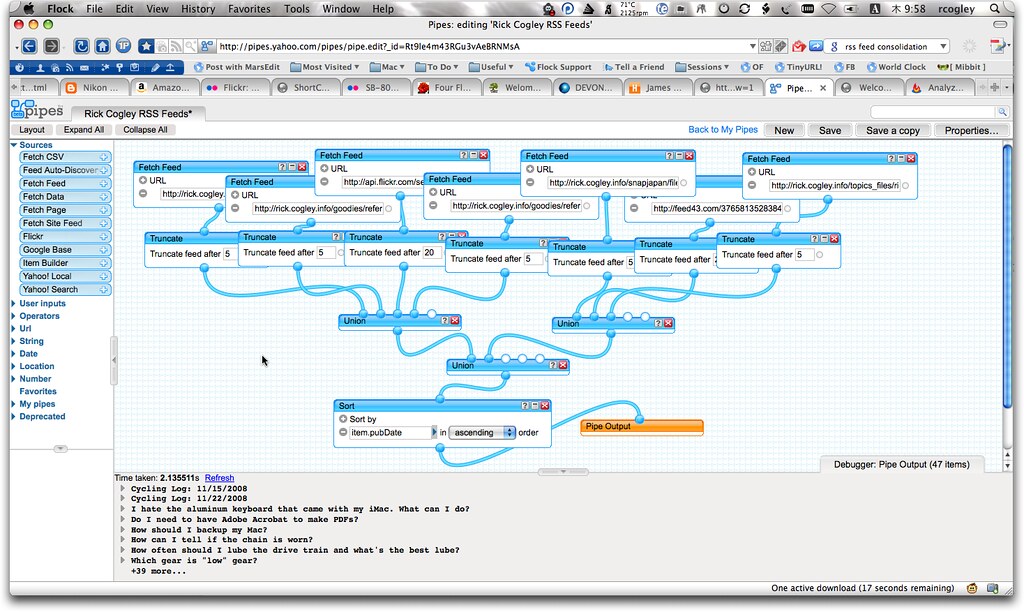

In both previous cases, we explored how we can download images from one site. However, if we want to download large amount of images, performing Google search is probably the best option. This process can be automated as well. For automation, we can use Selenium.

This tool is used for various types of automation, for example, it is good for test automation. In this tutorial, we use it to perform necessary search in Google and download images. Note, that for this to work, you have to install Google Chrome and use corresponding ChromeDriver as described in the first section of this article.

It is easy to use selenium, however first you need to import it:

import selenium

from selenium import webdriver

Once this is done you need to define the path to ChromDriver:

DRIVER_PATH = ‘C:\\Users\\n. zivkovic\\Documents\\chromedriver_win32\\chromedriver’

wd = (executable_path=DRIVER_PATH)

Once you do this, Google Chrome is automatically opened. From this moment on using the variable wd we can manipulate it. Let’s go to

(”)

Cool! Now, let’s implement a class that performs google search and downloades images from the first page:

class GoogleScraper():

”’Downloades images from google based on the query.

webdriver – Selenium webdriver

max_num_of_images – Maximum number of images that we want to download

”’

def __init__(self, webdriver:webdriver, max_num_of_images:int):

= webdriver

x_num_of_images = max_num_of_images

def _scroll_to_the_end(self):

wd. execute_script(“rollTo(0, );”)

(1)

def _build_query(self, query:str):

return f”query}&oq={query}&gs_l=img”

def _get_info(self, query: str):

image_urls = set()

(self. _build_query(query))

self. _scroll_to_the_end()

# img. Q4LuWd is the google tumbnail selector

thumbnails = (“img. Q4LuWd”)

print(f”Found {len(thumbnails)} images… “)

print(f”Getting the links… “)

for img in thumbnails[x_num_of_images]:

# We need to click every thumbnail so we can get the full image.

try:

()

except Exception:

print(‘ERROR: Cannot click on the image. ‘)

continue

images = nd_elements_by_css_selector(‘img. n3VNCb’)

(0. 3)

for image in images:

if t_attribute(‘src’) and ” in t_attribute(‘src’):

(t_attribute(‘src’))

return image_urls

def download_image(self, folder_path:str, url:str):

image_content = (url). content

except Exception as e:

print(f”ERROR: Could not download {url} – {e}”)

image_file = tesIO(image_content)

image = (image_file). convert(‘RGB’)

file = (folder_path, a1(image_content). hexdigest()[:10] + ”)

with open(file, ‘wb’) as f:

(f, “JPEG”, quality=85)

print(f”SUCCESS: saved {url} – as {file}”)

print(f”ERROR: Could not save {url} – {e}”)

def srape_images(self, query:str, folder_path=’. /images’):

folder = (folder_path, ‘_'(()(‘ ‘)))

if not (folder):

kedirs(folder)

image_info = self. _get_info(query)

print(f”Downloading images… “)

for image in image_info:

wnload_image(folder, image)

Ok, that is a lot of code. Let’s investigate it piece by piece. We start from the constructor of the class:

Through the constructor of the class, we inject webdriver. Also, we define number of images from the first page that we want to download. Next, let’s observe the only public method of this class – srape_images().

This method defines a flow and utilizes other methods from this class. Note that one of the prameters is query term which is used inside of Google Search. Also, the folder where images are stored is also injected in this method. The flow goes like this:

We create a folder for the specific search

We get all the links from images using _get_info method

We download images using _download_image method

Let’s explore these private methods a little bit more.

The purpose of the _get_info method is to get necessary links of the images. Note that after we perform search in google, we use find_elements_by_css_selector, with appropriate css identifier. This is very similar to the things we have done with BeautifulSoup and Srapy. Another thing we should pay attention to is that we need to click on each image in order to get the good resolution of the image. Then we need to use find_elements_by_css_selector once again. Once that is done, the correct link is obtained. The second, private method is _download_image.

This method is pretty straight forward. If we want to use GoogleScrapper, here is how we can do so:

DRIVER_PATH = ‘path_to_driver’

gs = GoogleScraper(wd, 10)

ape_images(‘music’)

We download 10 images for the search term music. Here is the result:

In this article, we explored three tools for downloading images from the web. We saw two examples of how this task can be performed and how mentioned tools can be utilized with Python.

Thank you for reading!

Frequently Asked Questions about web scrape images python

How do you scrape an image from a website in Python?

In this article, we are going to see how to scrape images from websites using python. For scraping images, we will try different approaches….Approach:Import module.Make requests instance and pass into URL.Pass the requests into a Beautifulsoup() function.Use ‘img’ tag to find them all tag (‘src ‘)Sep 8, 2021

Is Web scraping images Legal?

Web scraping and crawling aren’t illegal by themselves. … Web scraping started in a legal grey area where the use of bots to scrape a website was simply a nuisance. Not much could be done about the practice until in 2000 eBay filed a preliminary injunction against Bidder’s Edge.

Can BeautifulSoup scrape images?

Being efficient with BeautifulSoup means having a little bit of experience and/or understanding of HTML tags. But if you don’t, using Google to find out which tags you need in order to scrape the data you want is pretty easy. Since we want image data, we’ll use the img tag with BeautifulSoup.