Octoparse Linux

Use Octoparse on a non-Windows machine (Mac/Linux)

The latest version for this tutorial is available here. Go to have a check now!

Currently Octoparse supports running on a Windows environment. If you are using a non-Windows machine such as Mac or Linux, Here’re some suggestions for you to run Octoparse for data collection.

1. Use a virtual machine

A virtual machine can be very useful when you need to run apps that will only run on another operating system. It allows you to run 2 or more operating systems on one physical machine. But please note that the virtual machine also share your computer memory and when your system space is small, the VM may run unsmoothly.

If you think using a virtual machine is a decent solution, here are some options for your reference:

· Oracle VM Virtualbox – Free and supports a wide selection of pre-built virtual machines to download and use at no cost.

· Parallels Desktop 13 – A paid tool exclusive for running Windows on Mac. A few of the elegant things that Parallels can do is make Windows alerts appear in the Mac notification center, and operate a unified clipboard.

· VMware Fusion – A paid tool offers a very comprehensive selection of virtualization products. Similar to Parallel Desktop 13.

2. Use remote desktop connection

Remote Desktop Connection lets you remotely control a Windows machine from a PC or Mac. Remote desktops are based on the use of Microsoft terminal servers. You need to establish a connection to one or more servers and run the applications directly. Compared to using a virtual machine, using remote desktop would be simpler to set up. You can download the Microsoft Remote Desktop client for Mac from the Mac App Store.

Free Web Scrapers to Start Web Scraping | Octoparse

Web Scrapers Free to Try

(free version cancelled)

Parsehub

Mozenda

Content Grabber

Octoparse

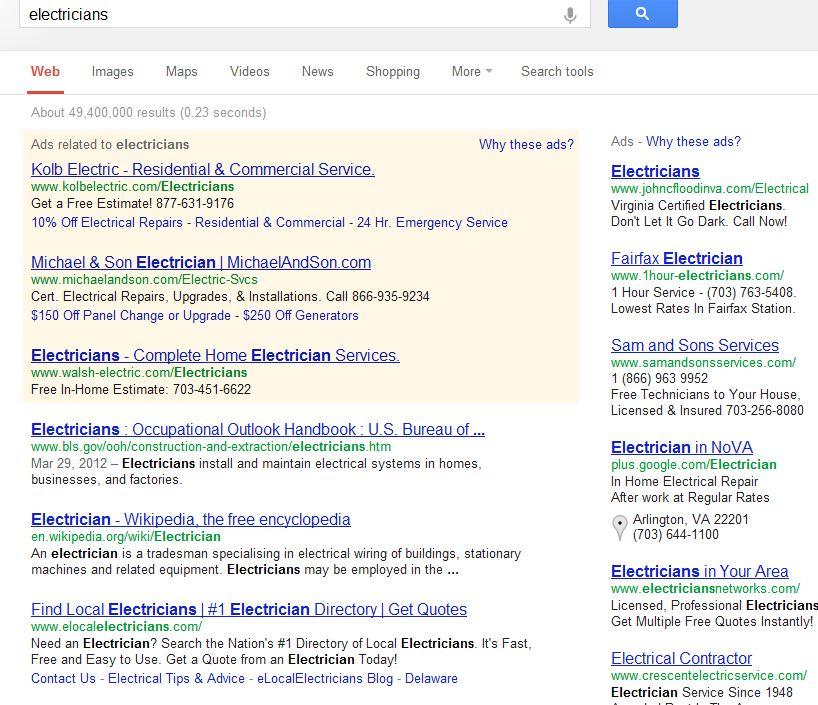

Just imagine if you want to search something in Google and copy all the result links into an excel file for later use, what should you do? It must drive you crazy when you click and copy and paste all the links manually. You may ask: “Is there any machine automatically doing all the work for me? ” Yes. There is such a thing as a web scraper!

A web scraper is a tool used for extracting data from websites. It can automatically gather or copy specific data from the web and put the data into a central local database or spreadsheet, for later retrieval or analysis.

There are free web scrapers to help you build your own scraper without coding. This article is going to introduce several web scrapers for you to choose from!

1.

is web-based software for web scraping. Using highly sophisticated machine learning algorithms, it extracts text, URLs, images, documents and even screenshots from both list and detail pages with just a URL you type in. Data could be accessed through APIs, XLSX/CSV, Google sheet, etc. It allows you to schedule when to get the data and supports almost any combination of time, days, weeks, and months, etc. The best thing is that it even can give you a data report after extraction.

Although with all these powerful functions, has canceled its free version and every user can just get a 7-day free trial. It currently has four paid versions with a different limit to extractors, queries, and functions: Essential ($299/month), Professional ($1, 999/year), Enterprise ($4, 999/year), and Premium ($9, 999/year).

2. Parsehub

Parsehub, a cloud-based desktop app for data mining, is another easy-to-use scraper with a graphics app interface.

It works with any interactive pages and easily searches through forms, opens dropdowns, logins to websites, clicks on maps and handles sites with infinite scroll, tabs, and pop-ups, etc. With it’s machine-learning relationship engine screening the page and understanding the hierarchy of elements, you’ll see the data pulled in seconds. It allows you to access data via API, CSV/Excel, Google sheet or Tableau.

Parsehub is free to start and it has a limit to extraction speed (200 pages in 40 minutes), pages per run (200 pages) and the number of projects (5 projects) in the free plan. If you need high extraction speed or more pages, you’d better apply for Standard plan ($149/month) or Professional plan ($499/month).

3. Mozenda

Another web-based scraper, Mozenda, also gets data magically by turning web data, regardless of type, into a structured format.

It automatically identifies lists and helps you build agents that collect precise data across many pages. Not only to scrape web pages, Mozenda even allows you to extract data from documents such as Excel, Word, PDF, etc. the same way you extract data from web pages. It supports publishing results in CSV, TSV, XML or JSON format to an existing database or directly to popular BI tools such as Amazon Web Services or Microsoft Azure® for rapid analytics and visualization.

Mozenda offers 30-day free trial and you can choose from its flexible pricing plans after that. It has Professional version ($100/month) and Enterprise version ($450/month), each having different limits to processing credits, storage, and agents.

ntent Grabber

Content Grabber, with a typical point and click user interface, is used for extracting pretty much any content from almost any website and saving it as structured data in a format of your choice, including Excel reports, XML, CSV, and most databases.

Designed with performance and scalability as the top priority, Content Grabber has a range of different browsers to achieve maximum performance in every scenario – from a fully dynamic web browser to the ultra-fast HTML5 parser only browser. It tackles the reliability issue head-on and adds strong support for debugging, error handling and logging.

You can download a 15-day free trial with all the features of a professional edition but a maximum of 50 pages per agent on Windows. The monthly subscription is $149 for professional edition and $299 for a premium subscription. Content Grabber allows users to purchase a license outright to own the software perpetually.

5. Octoparse

Octoparse is a cloud-based web crawler that helps you easily extract any web data without coding. With a user-friendly interface, it can easily deal with all sorts of websites, no matter JavaScript, AJAX, or any dynamic website. Its advanced machine learning algorithm can accurately locate the data at the moment you click on it.

Octoparse can be used under a free plan and free trial of paid versions is also available. It supports the Xpath setting to locate web elements precisely and Regex setting to re-format extracted data. The extracted data can be accessed via Excel/CSV or API, or exported to your own database. Octoparse has a powerful cloud platform to achieve important features like scheduled extraction and auto IP rotation.

Conclusions

All these web scrapers can basically satisfy various extraction needs and software like Octoparse, even has blogs to share news and cases of data extraction, but it is important to consider the functions, limitations and of course, price of different software according to your individual requirements when choosing one to stick to. It is lucky that all products offer a free trial before you buy it.

Hope web scraping is no longer a problem for you with these scrapers!

Artículo en español: ¡Sí, Existe Tal Cosa como Un Web Scraper Gratuito!

También puede leer artículos de web scraping en el sitio web oficial

Author: The Octoparse Team

Top 20 Web Scraping Tools to Scrape the Websites Quickly

Top 30 Big Data Tools for Data Analysis

Web Scraping Templates Take Away

How to Build a Web Crawler – A Guide for Beginners

Video: Create Your First Scraper with Octoparse 7. X

Octoparse Alternatives and Similar Software | AlternativeTo

79 alternativesPopular filtersFreeMacPlatformsOnlineWindowsSoftware as a Service (SaaS)MacLinuxGoogle ChromeSelf-HostedChrome OSMicrosoft EdgePythonJavaScriptNode. JSInstall Chrome ExtensionsFirefoxGo (Programming Language)JavaC#Windows SAndroid TabletBSDFeaturesData MiningApiSEOAnonymous web scrapingCrawlerNo coding requiredAutomationScreen scrapingWeb-BasedGoogle Chrome ExtensionsLead GenerationMarketing automationCommand line interfaceProxy supportRobot Process AutomationRobotic automationSEO AuditBusiness process automationAutomatic data extractionAPI IntegrationLicense FreeOpen SourcePaidOpen-source task and test automation tool and Selenium IDE. The RPA software is a browser extension that can do desktop automation as well! Use it for web automation, form filling, screen scraping and Robotic Process Automation (RPA). Scrapy is a free and open-source web-crawling framework written in Python. Originally designed for web scraping, it can also be used to extract data using APIs or as a general-purpose web crawler. Scrapy vs Octoparse opinions ParseHub is a web scraping tool built to handle the modern web. You can extract data from anywhere. ParseHub works with single-page apps, multi-page apps and just about any other modern web technology. Portia is an open source visual scraping tool, allows you to scrape websites without any programming knowledge required! Simply annotate pages you’re interested in, and Portia will create a spider to extract data from similar pages. is a web-based platform that puts the power of the machine readable web in your hands. Using our tools you can create an API or crawl an entire website in a fraction of the time of traditional methods, no coding required. A free, fully-featured, and extensible tool for automating any web or desktop application. UiPath Studio Community is free for individual developers, small professional teams, education and training purposes. Apify is a web scraping and automation platform – it extracts data from websites, crawls lists of URLs and automates workflows on the web. Turn any website into an API!. Diggernaut is a cloud-based service for web scraping, data extraction, and other ETL tasks. Schedule and run your scrapers in the cloud or compile and run on your PC. A web-based crawler with real-time crawl feedback. Advanced, fast & flexible SEO website crawler that can help identify technical or architectural issues with any site. Want to build a SaaS? Or find new customers? Or supercharge your marketing? ScrapeHunt gives you the benefits of scraping without the headache of scraping. Get a scraped database in less than 60 seconds Showing 10 of 79 alternatives

Frequently Asked Questions about octoparse linux

Is Octoparse free?

Octoparse can be used under a free plan and free trial of paid versions is also available. It supports the Xpath setting to locate web elements precisely and Regex setting to re-format extracted data.Jan 15, 2021

Is Octoparse open source?

Vision RPA, which is both free and Open Source. Other great apps like Octoparse are Scrapy (Free, Open Source), ParseHub (Freemium), Portia (Free, Open Source) and import.io (Paid).

How do I install Octoparse?

Please follow these steps to install Octoparse. Download the installer and unzip the downloaded file. Double click on the setup.exe file. Follow the installation instructions. Log in with your Octoparse account (Sign up here if you don’t have an account yet.)