Linkedin Webscraping

Use Selenium & Python to scrape LinkedIn profiles

It was last year when the legal battle between HiQ Labs v LinkedIn first made headlines, in which LinkedIn attempted to block the data analytics company from using its data for commercial benefit.

HiQ Labs used software to extract LinkedIn data in order to build algorithms for products capable of predicting employee behaviours, such as when an employee might quit their job.

This technique known as Web Scraping, is the automated process where the HTML of a web page is used to extract data.

How hard can it be?

LinkedIn have since made its site more restrictive to web scraping tools. With this in mind, I decided to attempt extracting data from LinkedIn profiles just to see how difficult it would, especially as I am still in my infancy of learning Python.

Tools Required

For this task I will be using Selenium, which is a tool for writing automated tests for web applications. The number of web pages you can scrape on LinkedIn is limited, which is why I will only be scraping key data points from 10 different user profiles.

Prerequisite Downloads & Installs

Download ChromeDriver, which is a separate executable that WebDriver uses to control Chrome. Also you will need to have a Google Chrome browser application for this to work.

Open your Terminal and enter the following install commands needed for this task.

pip3 install ipython

pip3 install selenium

pip3 install time

pip3 install parsel

pip3 install csv

Automate LinkedIn Login

In order to guarantee access to user profiles, we will need to login to a LinkedIn account, so will also automate this process.

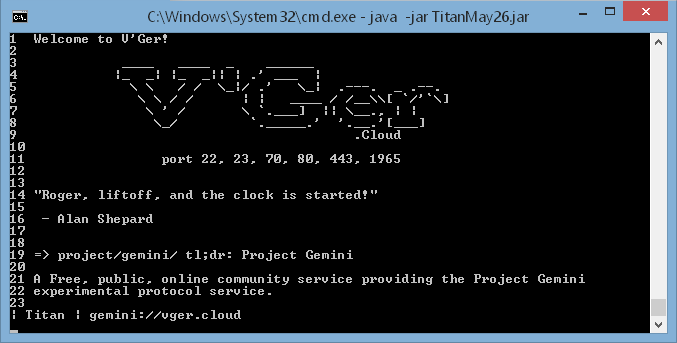

Open a new terminal window and type “ipython”, which is an interactive shell built with Python. Its offers different features including proper indentation and syntax highlighting.

We will be using the ipython terminal to execute and test each command as we go, instead of having to execute a file. Within your ipython terminal, execute each line of code listed below, excluding the comments. We will create a variable “driver” which is an instance of Google Chrome, required to perform our commands.

from selenium import webdriver

driver = (‘/Users/username/bin/chromedriver’)

(”)

The () method will navigate to the LinkedIn website and the WebDriver will wait until the page has fully loaded before another command can be executed. If you have installed everything listed and executed the above lines correctly, the Google Chrome application will open and navigate to the LinkedIn website.

The above notification banner should be displayed informing you that WebDriver is controlling the browser.

To populate the text forms on the LinkedIn homepage with an email address and password, Right Click on the webpage, click Inspect and the Dev Tools window will appear.

Clicking on the circled Inspect Elements icon, you can hover over any element on the webpage and the HTML markup will appear highlighted as seen above. The class and id attributes have the value “login-email”, so we can choose either one to use.

WebDriver offers a number of ways to find an element starting with “find_element_by_” and by using tab we can display all methods available.

The below lines will find the email element on the page and the send_keys() method contains the email address to be entered, simulating key strokes.

username = nd_element_by_class_name(‘login-email’)

nd_keys(”)

Finding the password attribute is the same process as the email attribute, with the values for its class and id being “login-password”.

password = nd_element_by_class_name(‘login-password’)

nd_keys(‘xxxxxx’)

Additionally we have to locate the submit button in order to successfully log in. Below are 3 different ways in which we can find this attribute but we only require one. The click() method will mimic a button click which submits our login request.

log_in_button = nd_element_by_class_name(‘login-submit’)

log_in_button = nd_element_by_class_id(‘login submit-button’)

log_in_button = nd_element_by_xpath(‘//*[@type=”submit”]’)

()

Once all command lines from the ipython terminal have successfully tested, copy each line into a new python file (Desktop/). Within a new terminal (not ipython) navigate to the directory that the file is contained in and execute the file using a similar command.

cd Desktop

python

That was easy!

If your LinkedIn credentials were correct, a new Google Chrome window should have appeared, navigated to the LinkedIn webpage and logged into your account.

Code so far…

“”” filename: “””

writer = (open(le_name, ‘wb’))

writer. writerow([‘Name’, ‘Job Title’, ‘Company’, ‘College’, ‘Location’, ‘URL’])

nd_keys(nkedin_username)

sleep(0. 5)

nd_keys(nkedin_password)

sign_in_button = nd_element_by_xpath(‘//*[@type=”submit”]’)

Searching LinkedIn profiles on Google

After successfully logging into your LinkedIn account, we will navigate back to Google to perform a specific search query. Similarly to what we have previously done, we will select an attribute for the main search form on Google.

We will use the “name=’q'” attribute to locate the search form and continuing on from our previous code we will add the following lines below.

search_query = nd_element_by_name(‘q’)

nd_keys(‘ AND “python developer” AND “London”‘)

nd_keys()

The search query ” AND “python developer” AND “London” will return 10 LinkedIn profiles per page.

Next we will be extracting the green URLs of each LinkedIn users profile. After inspecting the elements on the page these URLs are contained within a “cite” class. However, after testing within ipython to return the list length and contents, I seen that some advertisements were being extracted, which also include a URL within a “cite” class.

Using Inspect Element on the webpage I checked to see if there was any unique identifier separating LinkedIn URL’s from the advertisement URLs.

:/

As you can see above, the class value “iUh30” for LinkedIn URLs is different to that of the advertisement values of “UdQCqe”. To avoid extracting unwanted advertisements, we will only specify the “iUh30” class to ensure we only extract LinkedIn profile URL’s.

linkedin_urls = nd_elements_by_class_name(‘iUh30’)

We have to assign the “linkedin_urls” variable to equal the list comprehension, which contains a For Loop that unpacks each value and extracts the text for each element in the list.

linkedin_urls = [ for url in linkedin_urls]

Once you have assigned the variable ‘linkedin_urls” you can use this to return the full list contents or to return specific elements within our List as seen below.

linkedin_urls

linkedin_urls[0]

linkedin_urls[1]

In the ipython terminal below, all 10 account URLs are contained within the list.

Next we will create a new Python file called ” to contain variables such as search query, file name, email and password which will simplify our main “” file.

search_query = ‘ AND “python developer” AND “London”‘

file_name = ”

linkedin_username = ”

linkedin_password = ‘xxxxxx’

As we are storing these variables within a separate file called “” we need to import the file in order to reference these variables from within the “” file. Ensure both files “” and “” are in the same folder or directory.

import parameters

As we will be inheriting all the variables defined in “” using the imported parameters module above, we need to make changes within our “” file to reference these values from the “” file.

(arch_query)

nd_keys(linkedin_password)

As we previously imported the sleep method from the time module, we will use this to add pauses between different actions to allow the commands to be fully executed without interruption.

sleep(2)

from time import sleep

from import Keys

(‘:’)

sleep(3)

nd_keys(arch_query)

The fun part, scraping data

To scrape data points from a web page we will need to make use of Parsel, which is a library for extracting data points from websites. As we have already installed this at the start, we also need to import this module within our “”.

from parsel import Selector

After importing parsel within your ipython terminal, enter “ge_source” to load the full source code of the Google search webpage, which looks like something from the Matrix.

As we will want to extract data from a LinkedIn account we need to navigate to one of the profile URL’s returned from our search within the ipython terminal, not via the browser.

ge_source

We will create a For Loop to incorporate these commands into our “” file to iterate over each URL in the list. Using the method () it will update the “linked_url” variable with the current LinkedIn profile URL in the iteration….

for linkedin_url in linkedin_urls:

(linkedin_url)

sleep(5)

sel = Selector(ge_source)

Lastly we have defined a “sel” variable, assigning it with the full source code of the LinkedIn users account.

Finding Key Data Points

Using the below LinkedIn profile as an example, you can see that multiple key data points have been highlighted, which we can extract.

Like we have done previously, we will use the Inspect Element on the webpage to locate the HTML markup we need in order to correctly extract each data point. Below are two possible ways to extract the full name of the user.

name = (‘//h1/text()’). extract_first()

name = (‘//*[starts-with(@class, “pv-top-card-section__name”)]/text()’). extract_first()

When running the commands in the ipython terminal I noticed that sometimes the text isn’t always formatted correctly as seen below with the Job Title.

However, by using an IF statement for job_title we can use the () method which will remove the new line symbol and white spaces.

if job_title:

job_title = ()

Continue to locate each attribute and its value for each data point you want to extract. I recommend using the class name to locate each data point instead of heading tags e. g h1, h2. By adding further IF statements for each data point we can handle any text that may not be formatted correctly.

An example below of extracting all 5 data points previously highlighted.

name = (‘//*[starts-with(@class,

“pv-top-card-section__name”)]/text()’). extract_first()

if name:

name = ()

job_title = (‘//*[starts-with(@class,

“pv-top-card-section__headline”)]/text()’). extract_first()

company = (‘//*[starts-with(@class,

“pv-top-card-v2-section__entity-name pv-top-card-v2-section__company-name”)]/text()’). extract_first()

if company:

company = ()

college = (‘//*[starts-with(@class,

“pv-top-card-v2-section__entity-name pv-top-card-v2-section__school-name”)]/text()’). extract_first()

if college:

college = ()

location = (‘//*[starts-with(@class,

“pv-top-card-section__location”)]/text()’). extract_first()

if location:

location = ()

linkedin_url = rrent_url

Printing to console window

After extracting each data point we will output the results to the terminal window using the print() statement, adding a newline before and after each profile to make it easier to read.

print(‘\n’)

print(‘Name: ‘ + name)

print(‘Job Title: ‘ + job_title)

print(‘Company: ‘ + company)

print(‘College: ‘ + college)

print(‘Location: ‘ + location)

print(‘URL: ‘ + linkedin_url)

At the beginning of our code, below our imports section we will define a new variable “writer”, which will create the csv file and insert the column headers listed below.

The previously defined “file_name” has been inherited from the “” file and the second parameter ‘wb’ is required to write contents to the file. The writerow() method is used to write each column heading to the csv file, matching the order in which we will print them to the terminal console.

Printing to CSV

As we have printed the output to the console, we need to also print the output to the csv file we have created. Again we are using the writerow() method to pass in each variable to be written to the csv file.

writer. writerow([(‘utf-8’),

(‘utf-8’),

(‘utf-8’)])

We are encoding with utf-8 to ensure all characters extracted from each profile get loaded correctly.

Fixing things

If we were to execute our current code within a new terminal we will encounter an error similar to the one below. It is failing to concatenate a string to display the college value as there is no college displayed on this profile and so it contains no value.

To account for profiles with missing data points from which we are trying to extract, we can write a function”validate_field” which passing in “field” as variable. Ensure this function is placed at the start of this application, just under the imports section.

def validate_field(field):

field = ‘No results’

return field

In order for this function to actually work, we have to add the below lines to our code which validates if the field exists. If the field doesn’t exist the text “No results” will be assigned to the variable. Add these these lines before printing the values to the console window.

name = validate_field(name)

job_title = validate_field(job_title)

company = validate_field(company)

college = validate_field(college)

location = validate_field(location)

linkedin_url = validate_field(linkedin_url)

Lets run our code..

Finally we can run our code from the terminal, with the output printing to the console window and creating a new csv file called “”.

Things you could add..

You could easily amend my code to automate lots of cool things on any website to make your life much easier. For the purposes of demonstrating extra functionality and learning purposes within this application, I have overlooked aspects of this code which could be enhanced for better efficiency such as error handling.

Final code…

It was a long process to follow but I hope you found it interesting. Ultimately in the end LinkedIn, like most other sites, is pretty straight forward to scrape data from, especially using the Selenium tool. The full code can be requested by directly contacting me via LinkedIn.

Questions to be answered…

Are LinkedIn right in trying to prevent third party companies from extracting our publicly shared data for commercial purposes, such as HR departments or recruitment agencies?

Is LinkedIn is trying to protect our data or hoard it for themselves, holding a monopoly on our lucrative data?

Personally, I think that any software which can be used to help recruiters or companies match skilled candidates to better suited jobs is a good thing.

How to build a Web Scraper for Linkedin using Selenium and …

Photo by Alexander Shatov on UnsplashHey there! Building Machine Learning Algorithms to fetch and analyze Linkedin data is a popular idea among ML Enthusiasts. But one snag everyone comes across is the lack of data considering how tedious data collection from Linkedin this article, we will be going over how you can build and automate your very own Web Scraping tool to extract data from any Linkedin Profile using just Selenium and Beautiful Soup. This is a step-by-step guide complete with code snippets at each step. I’ve added the GitHub repository link at the end of the article for those who would want the complete (Obviously. 3+ recommended)Beautiful Soup (Beautiful Soup is a library that makes it easy to scrape information from web pages. )Selenium (The selenium package is used to automate web browser interaction from Python. )A WebDriver, I’ve used the Chrome ditionally, you’ll also need the pandas, time, and regex libraries. A Code editor, I used Jupyter Notebook, you may use Vscode/Atom/Sublime or any of your pip install selenium to install the Selenium pip install beautifulsoup4 to install the Beautiful Soup can download the Chrome WebDriver from here:s a complete list of all the topics covered in this to Automate Linkedin using to use BeautifulSoup to extract posts and their authors from the given Linkedin profiles. Writing the data to a or file to be made available for later to automate the process to get posts of multiple authors in one ’ll start by importing everything we’ll selenium import webdriverfrom import Keysfrom import Byfrom import WebDriverWaitfrom pport import expected_conditions as ECfrom selenium import webdriverfrom bs4 import BeautifulSoup as bsimport re as reimport timeimport pandas as pdIn addition to selenium and beautiful soup, we’ll also be using the regex library to get the author of the posts, the time library to use time functionalities such as sleep and pandas to handle large-scale data, and for writing into spreadsheets. More on that coming right up! Selenium needs a few things to start the automation process. The location of the web driver in your system, a username, and a password to log in with. So let’s start by getting those and storing them in variables PATH, USERNAME, and PASSWORD = input(“Enter the Webdriver path: “)USERNAME = input(“Enter the username: “)PASSWORD = input(“Enter the password: “)print(PATH)print(USERNAME)print(PASSWORD)Now we’ll initialize our web driver in a variable that Selenium will use to carry out all the operations. Let’s call it driver. We tell it the location of the web driver, i. e., PATHdriver = (PATH)Next, we’ll tell our driver the link it should fetch. In our case, it’s Linkedin’s home (“)(3)You’ll notice, I’ve used the sleep function in the above code snippet. You’ll find it used a lot in this article. The sleep function basically halts any process (in our case, the automation process) for the specified number of number of seconds. You can freely use it to pause the process anywhere you need to like, in cases where you have to bypass a captcha ’ll now tell the driver to login with the credentials we’ve (“username”)nd_keys(USERNAME)nd_element_by_id(“password”)nd_keys(PASSWORD)(3)nd_keys()Now let’s create a few lists to store data such as the profile links, the post content, and the author of each post. We’ll call them post_links, post_texts, and post_names by inlytics | LinkedIn Analytics Tool on UnsplashOnce that’s done, we’ll start the actual web scraping process. Let’s declare a function so we can use our web scraping code to fetch posts from multiple accounts in recursion. We’ll call it, that’s quite a long function. Worry not! I’ll explain it step by step. Let’s first go over what our function takes 3 arguments, i. e., post_links, post_texts, and post_names as a, b, and c we’ll go into the internal working of the function. It first takes the profile link, and slices off the profile we use the driver to fetch the “posts” section of the user’s profile. The driver scrolls through the posts collecting the posts’ data using beautiful soup and storing them in “containers” 17 of the above-mentioned code governs how long the driver gets to collect posts. In our case, it’s 20 seconds, but you may change it to suit your data round(end-start)>20: breakexcept: passWe also get the number of posts the user wants from each account and store it in a variable ‘nos’. Finally, we iterate through each “container”, fetching the post data stored in it and appending it to our post_texts list along with post_names. We break the loop when the desired number of posts is ’ll notice, we’ve enclosed the container iteration loop in a try-catch block. This is done as a safety measure against possible ’s all about our function! Now, Time to put our function to use! We get a list of the profiles from the user and send it to the function in recursion to repeat the data collection process for all function returns two lists: post_texts, containing all the posts’ data, and post_names, containing all the corresponding authors of the we’ve reached the most important part of our automation: Saving the data! data = { “Name”: post_names, “Content”: post_texts, }df = Frame(data)_csv(“”, encoding=’utf-8′, index=False)writer = pd. ExcelWriter(“”, engine=’xlsxwriter’)_excel(writer, index =False)()We create a dictionary with the lists returned by the function and save it to a variable ‘df’ using could either choose to save the collected data as a file or a _csv(“”, encoding=’utf-8′, index=False)For xlsx:writer = pd. ExcelWriter(“”, engine=’xlsxwriter’)_excel(writer, index =False)()In the above code snippets, I’ve given a sample file name, ‘text1’. You may give any file name of your choice! Phew! That was long, but we’ve managed to successfully create a fully automated web scraper that’ll get you any Linkedin post’s data in a jiffy! Hope that was helpful! Do keep an eye out for more such articles in the future! Here’s the link to the complete code on GitHub: for stopping by! Happy Learning!

Supreme Court revives LinkedIn case to protect user data from …

The Supreme Court has given LinkedIn another chance to stop a rival company from scraping personal information from users’ public profiles, a practice LinkedIn says should be illegal but one that could have broad ramifications for internet researchers and archivists.

LinkedIn lost its case against Hiq Labs in 2019 after the U. S. Ninth Circuit Court of Appeals ruled that the CFAA does not prohibit a company from scraping data that is publicly accessible on the internet.

The Microsoft-owned social network argued that the mass scraping of its users’ profiles was in violation of the Computer Fraud and Abuse Act, or CFAA, which prohibits accessing a computer without authorization.

Hiq Labs, which uses public data to analyze employee attrition, argued at the time that a ruling in LinkedIn’s favor “could profoundly impact open access to the Internet, a result that Congress could not have intended when it enacted the CFAA over three decades ago. ”

The Supreme Court said it would not take on the case, but instead ordered the appeal’s court to hear the case again in light of its recent ruling, which found that a person cannot violate the CFAA if they improperly access data on a computer they have permission to use.

The CFAA was once dubbed the “worst law” in the technology law books by critics who have long argued that its outdated and vague language failed to keep up with the pace of the modern internet.

Journalists and archivists have long scraped public data as a way to save and archive copies of old or defunct websites before they shut down. But other cases of web scraping have sparked anger and concerns over privacy and civil liberties. In 2019, a security researcher scraped millions of Venmo transactions, which the company does not make private by default. Clearview AI, a controversial facial recognition startup, claimed it scraped over 3 billion profile photos from social networks without their permission.

An earlier version of this article misattributed a Facebook lawsuit. We regret the error.