Hackernoon Web Scraping

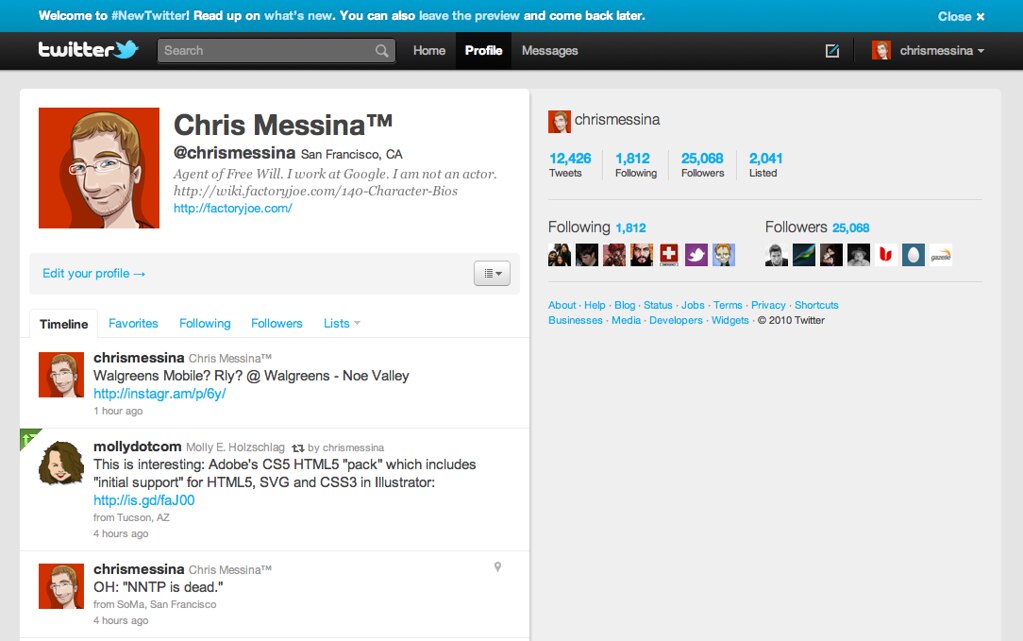

Building a Web Scraper from start to finish | Hacker Noon

The basic idea of web scraping is that we are taking existing HTML data, using a web scraper to identify the data, and convert it into a useful format. The prerequisites we’ll talk about next are: Python Basics Python Libraries Storing data as JSON (JavaScript Object Notation) The end stage is to have this data stored as either. or in another useful format, such as. HTML structures, and data formats (JCPN) and other useful is a Web Scraper? A Web Scraper is a program that quite literally scrapes or gathers data off of websites. Take the below hypothetical example, where we might build a web scraper that would go to twitter, and gather the content of the above example, we might use a web scraper to gather data from Twitter. We might limit the gathered data to tweets about a specific topic, or by a specific author. As you might imagine, the data that we gather from a web scraper would largely be decided by the parameters we give the program when we build it. At the bare minimum, each web scraping project would need to have a URL to scrape from. In this case, the URL would be Secondly, a web scraper would need to know which tags to look for the information we want to scrape. In the above example, we can see that we might have a lot of information we wouldn’t want to scrape, such as the header, the logo, navigation links, etc. Most of the actual tweets would probably be in a paragraph tag, or have a specific class or other identifying feature. Knowing how to identify where the information on the page is takes a little research before we build the this point, we could build a scraper that would collect all the tweets on a page. This might be useful. Or, we could further filter the scrape, but specifying that we only want to scrape the tweets if it contains certain content. Still looking at our first example, we might be interested in only collecting tweets that mention a certain word or topic, like “Governor. ” It might be easier to collect a larger group of tweets and parse them later on the back end. Or, we could filter some of the results here are web scrapers useful? We’ve partially answered this question in the first section. Web scraping could be as simple as identifying content from a large page, or multiple pages of information. However, one of the great things about scraping the web, is that it gives us the ability to not only identify useful and relevant information, but allows us to store that information for later use. In the above example, we might want to store the data we’ve collected from tweets so that we could see when tweets were the most frequent, what the most common topics were, or what individuals were mentioned the most prerequisites do we need to build a web scraper? Before we get into the nuts and bolts of how a web scraper works, let’s take a step backward, and talk about where web-scraping fits into the broader ecosystem of web technologies. Take a look at the simple workflow below:The basic idea of web scraping is that we are taking existing HTML data, using a web scraper to identify the data, and convert it into a useful format. The end stage is to have this data stored as either JSON, or in another useful format. As you can see from the diagram, we could use any technology we’d prefer to build the actual web scraper, such as Python, PHP or even Node, just to name a few. For this example, we’ll focus on using Python, and it’s accompanying library, Beautiful Soup. It’s also important to note here, that in order to build a successful web scraper, we’ll need to be at least somewhat familiar with HTML structures, and data formats like make sure that we’re all on the same page, we’ll cover each of these prerequisites in some detail, since it’s important to understand how each technology fits into a web scraping project. The prerequisites we’ll talk about next are:HTML structuresPython BasicsPython LibrariesStoring data as JSON (JavaScript Object Notation)If you’re already familiar with any of these, feel free to skip ahead. 1. HTML Structures1. a. Identifying HTML TagsIf you’re unfamiliar with the structure of HTML, a great place to start is by opening up Chrome developer tools. Other browsers like Firefox and Internet explorer also have developer tools, but for this example, I’ll be using Chrome. If you click on the three vertical dots in the upper right corner of the browser, and then ‘More Tools’ option, and then ‘Developer Tools’, you will see a panel that pops up which looks like the following:We can quickly see how the current HTML site is structured. All of the content as contained in specific ‘tags’. The current heading is in an “

” tag, while most of the paragraphs are in “

” tags. Each of the tags also have other attributes like “class” or “name”. We don’t need to know how to build an HTML site from scratch. In building a web scraper, we only need to know the basic structure of the web, and how to identify specific web elements. Chrome and other browser developer tools allow us to see what tags contain the information we want to scrape, as well as other attributes like “class”, that might help us select only specific ’s look at what a typical HTML structure might look like:This is similar to what we just looked at in the chrome dev tools. Here, we can see that all the elements in the HTML are contained within the opening and closing ‘body’ tags. Every element has also has it’s own opening and closing tag. Elements that are nested or indented in an HTML structure indicate that the element is a child element of it’s container, or parent element. Once we start making our Python web scraper, we can also identify elements that we want to scrape based not only on the tag name, but whether it the element is a child of another element. For example, we can see here that there is a

- tag in this structure, indicating an unordered list. Each list element

- is a child of the parent

- tag. If we wanted to select and scrape the entire list, we might want to tell Python to grab all of the child elements of the

- elementsNow, let’s take a closer look at HTML elements. Building off of the previous example, here is our

or header element:Knowing how to specify which elements we want to scrape can be very important. For example, if we told Python we want the

element, that would be fine, unless there are several

elements on the page. If we only want the first

or the last, we might need to be specific in telling Python exactly what we want. Most elements also give us “class” and “id” attributes. If we wanted to select only this

element, we might be able to do so by telling Python, in essence, “Give me the

element that has the class “myClass”. ID selectors are even more specific, so sometimes, if a class attribute returns more elements than we want, selecting with the ID attribute may do the trick. 2. Python Basics2. Setting Up a New ProjectOne advantage to building a web scraper in Python, is that the syntax of Python is simple and easy to understand. We could be up and running in a matter of minutes with a Python web scraper. If you haven’t already installed Python, go ahead and do that now:We’ll also need to decide on a text editor. I’m using ATOM, but there are plenty of other similar choices, which all do relatively the same thing. Because web scrapers are fairly straight-forward, our choice in which text editor to use is entirely up to us. If you’d like to give ATOM a try, feel free to download it here:Now that we have Python installed, and we’re using a text editor of our choice, let’s create a new Python project folder. First, navigate to wherever we want to create this project. I prefer throwing everything on my already over-cluttered desktop. Then create a new folder, and inside the folder, create a file. We’ll name this file “”. We’ll also want to make a second file called “” in the same folder. At this point, we should have something similar to this:One obvious difference is that we don’t yet have any data. The data will be what we’ve retrieved from the web. If we think about what our workflow might be for this project, we might imagine it looking something like this:First, there’s the raw HTML data that’s out there on the web. Next, we use a program we create in Python to scrape/collect the data we want. The data is then stored in a format we can use. Finally, we can parse the data to find relevant information. The scraping and the parsing will both be handled by separate Python scripts. The first will collect the data. The second will parse through the data we’ve you’re more comfortable setting up this project via the command line, feel free to do that instead. b. Python Virtual EnvironmentsWe’re not quite done setting up the project yet. In Python, we’ll often use libraries as part of our project. Libraries are like packages that contain additional functionality for our project. In our case, we’ll use two libraries: Beautiful Soup, and Requests. The Request library allows us to make requests to urls, and access the data on those HTML pages. Beautiful Soup contains some easy ways for us to identify the tags we discussed earlier, straight from our Python we installed these packages globally on our machines, we could face problems if we continued to develop other applications. For example, one program might use the Requests library, version 1, while a later application might use the Requests library, version 2. This could cause a conflict, making either or both applications difficult to solve this problem, it’s a good idea to set up a virtual environment. These virtual environments are like capsules for the application. This way we could run version 1 of a library in one application and version 2 in another, without conflict, if we created an virtual environment for each let’s bring up the terminal window, as the next few commands are easiest to do from the terminal. On OS X, we’ll open the Applications folder, then open the Utilities folder. Open the Terminal application. We may want to add this to our dock as Windows, we can also find terminal/command line by opening our Start Menu and searching. It’s simply an app located at C:Windows that we have the terminal open we should navigate to our project folder and use the following command to build the virtual environment:python3 -m venv tutorial-envThis step creates the virtual environment, but right now it’s just dormant. In order to use the virtual environment, we’ll also need to activate it. We can do this by running the following command in our terminal:On Mac:source tutorial-env/bin/activateOr Windows:tutorial-envScriptst3 Python Libraries3. Installing LibrariesNow that we have our virtual environment set up and activated, we’ll want to install the Libraries we mentioned earlier. To do this, we’ll use the terminal again, this time installing the Libraries with the pip installer. Let’s run the following commands:Install Beautiful Soup:Install Requests:And we’re done. Well, at least we have our environment and Libraries up and running. 3. Importing Installed LibrariesFirst, let’s open up our file. Here, we’ll set up all of the logic that will actually request the data from the site we want to very first thing that we’ll need to do is let Python know that we’re actually going to use the Libraries that we just installed. We can do this by importing them into our Python file. It might be a good idea to structure our file so that all of our importing is at the top of the file, and then all of our logic comes afterward. To import both of our libraries, we’ll just include the following lines at the top of our file:from bs4 import BeautifulSoupimport requestsIf we wanted to install other libraries to this project, we could do so through the pip installer, and then import them into the top of our file. One thing to be aware of is that some libraries are quite large, and can take up a lot of space. It may be difficult to to deploy a site we’ve worked on if it is bloated with too many large packages. c. Python’s Requests LibraryRequests with Python and Beautiful Soup will basically have three parts:The URL, RESPONSE & URL is simply a string that contains the address of the HTML page we intend to RESPONSE is the result of a GET request. We’ll actually use the URL variable in the GET request here. If we look at what the response is, it’s actually an HTTP status code. If the request was successful, we’ll get a successful status code like 200. If there was a problem with the request, or the server doesn’t respond to the request we made, the status code could be unsuccessful. If we don’t get what we want, we can look up the status code to troubleshoot what the error might be. Here’s a helpful resource in finding out what the codes mean, in case we do need to troubleshoot them:Finally, the CONTENT is the content of the response. If we print the entire response content, we’ll get all the content on the entire page of the url we’ve requested. 4. Storing Data as JSONIf you don’t want to spend the time scraping, and want to jump straight to manipulating data, here are several of the datasets I used for this exercise:. Viewing Scraped DataNow that we know more or less how our scraper will be set up, it’s time to find a site that we can actually scrape. Previously, we looked at some examples of what a twitter scraper might look like, and some of the use cases of such a scraper. However we probably won’t actually scraper Twitter here for a couple of reasons. First, whenever we’re dealing with dynamically generated content, which would be the case on Twitter, it’s a little harder to scrape, meaning that the content isn’t readily visible. In order to do this, we would need to use something like Selenium, which we won’t get into here. Secondly, Twitter provides several API’s which would probably be more useful in these stead, here’s a “Fake Twitter” site that’s been set up for just this exercise. the above “Fake Twitter” site, we can see a selection of actual tweets by Jimmy Fallon between 2013 and 2017. If we follow the above link, we should see something like this:Here, if we wanted to scrape all of the Tweets, there are several things associated with each Tweet that we could also scrape:The TweetThe Author (JimmyFallon)The Date and TimeThe Number of LikesThe Number of SharesThe first question to ask before we start scraping is what we want to accomplish. For example, if all we wanted to do was know when most of the tweets occured, the only data we actually need to scrape would be the date. Just for ease however, we’ll go ahead and scrape the entire Tweet. Let’s open up the Developer Tools again in Chrome to take a look at how this is structured, and see if there are any selectors that would be useful in gathering this data:Under the hood, it looks like each element here is in it’s own class. The author is in an

tag with the class named “author”. The Tweet is in a

tag with a class named “content”. Likes and Shares are also in

tags with classes named “likes” and “shares”. Finally, our Date/Time is in an

tag with a class “dateTime” we use the same format we used above to scrape this site, and print the results, we will probably see something that looks similar to this:What we’ve done here, is simply followed the steps outlined earlier. We’ve started by importing bs4 and requests, and then set URL, RESPONSE and CONTENT as variables, and printed the content variable. Now, the data we’ve printed here isn’t very useful. We’ve simply printed the entire, raw HTML structure. What we would prefer is to get the scraped data into a useable format. b Selectors in Beautiful SoupIn order to get a tweet, we’ll need to use the selectors that beautiful soup provides. Let’s try this:tweet = ndAll(‘p’, attrs={“class”: “content”}). textprint tweetInstead of printing the entire content, we’ll try to get the tweets. Let’s take another look at our example html from earlier, and see how it relates to the above code snippet:The previous code snippet is using the class attribute “content” as a selector. Basically the ‘p’, attrs={“class”: “content”} is saying, “we want to select the all of the paragraph tags

, but only the ones which have the class named “content”, if we stopped there, and printed the results, we would get the entire tag, the ID, the class and the content. The result would look like:

How to Build a Web Scraper With Python [Step-by-Step Guide]

How to Build a Web Scraper With Python [Step-by-Step Guide] The guide will take you through understanding HTML web pages, building a web scraper using Python, and creating a DataFrame with pandas. It’ll cover data quality, data cleaning, and data-type conversion — entirely step by step and with instructions, code, and explanations on how every piece of it works. We don’t want to scrape any data we’re not actually need. Angelica Dietzel| Self-Taught Programmer | Learning Data ScienceOn my self-taught programming journey, my interests lie within machine learning (ML) and artificial intelligence (AI), and the language I’ve chosen to master is skills in Python are basic, so if you’re here with not a lot of skills in coding, I hope this guide helps you gain more knowledge and Perfect Beginner ProjectTo source data for ML, AI, or data science projects, you’ll often rely on databases, APIs, or ready-made CSV datasets. But what if you can’t find a dataset you want to use and analyze? That’s where a web scraper comes ing on projects is crucial to solidifying the knowledge you gain. When I began this project, I was a little overwhelmed because I truly didn’t know a icking with it, finding answers to my questions on Stack Overflow, and a lot of trial and error helped me really understand how programming works — how web pages work, how to use loops, and how to build functions and keep data clean. It makes building a web scraper the perfect beginner project for anyone starting out in we’ll coverThis guide will take you through understanding HTML web pages, building a web scraper using Python, and creating a DataFrame with pandas. I hope you code along and enjoy! DisclaimerWebsites can restrict or ban scraping data from their website. Users can be subject to legal ramifications depending on where and how you attempt to scrape information. Websites usually describe this in their terms of use and in their file found at their site, which usually looks something like this:. So scrape responsibly, and respect the ’s Web Scraping? Web scraping consists of gathering data available on websites. This can be done manually by a human or by using a bot. A bot is a program you build that helps you extract the data you need much quicker than a human’s hand and eyes Are We Going to Scrape? It’s essential to identify the goal of your scraping right from the start. We don’t want to scrape any data we don’t actually this project, we’ll scrape data from IMDb’s “Top 1, 000” movies, specifically the top 50 movies on this page. Here is the information we’ll gather from each movie listing:The titleThe year it was releasedHow long the movie isIMDb’s rating of the movieThe Metascore of the movieHow many votes the movie gotThe U. S. gross earnings of the movieHow Do Web Scrapers Work? Web scrapers gather website data in the same way a human would: They go to a web page of the website, get the relevant data, and move on to the next web page — only much website has a different structure. These are a few important things to think about when building a web scraper:What’s the structure of the web page that contains the data you’re looking for? How do we get to those web pages? Will you need to gather more data from the next page? The URLTo begin, let’s look at the URL of the page we want to is what we see in the URL:We notice a few things about the URL:? acts as a separator — it indicates the end of the URL resource path and the start of the parametersgroups=top_1000 specifies what the page will be about&ref_adv_prv takes us to the the next or the previous page. The reference is the page we’re currently on. adv_nxt and adv_prv are two possible values — translated to advance to next page and advance to previous you navigate back and forth through the pages, you’ll notice only the parameters change. Keep this structure in mind as it’s helpful to know as we build the HTMLHTML stands for hypertext markup language, and most web pages are written using it. Essentially, HTML is how two computers speak to each other over the internet, and websites are what they you access an URL, your computer sends a request to the server that hosts the site. Any technology can be running on that server (JavaScript, Ruby, Java, etc. ) to process your request. Eventually, the server returns a response to your browser; oftentimes, that response will be in the form of an HTML page for your browser to describes the structure of a web page semantically, and originally included cues for the appearance of the spect HTMLChrome, Firefox, and Safari users can examine the HTML structure of any page by right-clicking your mouse and pressing the Inspect option. A menu will appear on the bottom or right-hand side of your page with a long list of all the HTML tags housing the information displayed to your browser window. If you’re in Safari (photo above), you’ll want to press the button to the left of the search bar, which looks like a target. If you’re in Chrome or Firefox, there’s a small box with an arrow icon in it at the top left that you’ll use to clicked, if you move your cursor over any element of the page, you’ll notice it’ll get highlighted along with the HTML tags in the menu that they’re associated with, as seen owing how to read the basic structure of a page’s HTML page is important so we can turn to Python to help us extract the HTML from the olsThe tools we’re going to use are:Repl (optional) is a simple, interactive computer-programming environment used via your web browser. I recommend using this just for code-along purposes if you don’t already have an IDE. If you use Repl, make sure you’re using the Python quests will allow us to send HTTP requests to get HTML filesBeautifulSoup will help us parse the HTML filespandas will help us assemble the data into a DataFrame to clean and analyze itNumPy will add support for mathematical functions and tools for working with arraysNow, Let’s CodeYou can follow along below inside your Repl environment or IDE, or you can go directly to the entire code here. Have fun! Import toolsFirst, we’ll import the tools we’ll need so we can use them to help us build the scraper and get the data we in EnglishIt’s very likely when we run our code to scrape some of these movies, we’ll get the movie names translated into the main language of the country the movie originated this code to make sure we get English-translated titles from all the movies we scrape:Request contents of the URLGet the contents of the page we’re looking at by requesting the URL:Breaking URL requests down:url is the variable we create and assign the URL toresults is the variable we create to store our (url, headers=headers) is the method we use to grab the contents of the URL. The headers part tells our scraper to bring us English, based on our previous line of BeautifulSoupMake the content we grabbed easy to read by using BeautifulSoup:Breaking BeautifulSoup down:soup is the variable we create to assign the method BeatifulSoup to, which specifies a desired format of results using the HTML parser — this allows Python to read the components of the page rather than treating it as one long stringprint(ettify()) will print what we’ve grabbed in a more structured tree format, making it easier to readThe results of the print will look more ordered, like this:Initialize your storageWhen we write code to extract our data, we need somewhere to store that data. Create variables for each type of data you’ll extract, and assign an empty list to it, indicated by square brackets []. Remember the list of information we wanted to grab from each movie from earlier:Your code should now look something like this. Note that we can delete our print function until we need to use it the right div containerIt’s time to check out the HTML code in our web to the web page we’re scraping, inspect it, and hover over a single movie in its entirety, like below:We need to figure out what distinguishes each of these from other div containers we ‘ll notice the list of div elements to the right with a class attribute that has two values: lister-item and you click on each of those, you’ll notice it’ll highlight each movie container on the left of the page, like we do a quick search within inspect (press Ctrl+F and type lister-item mode-advanced), we’ll see 50 matches representing the 50 movies displayed on a single page. We now know all the information we seek lies within this specific div all lister-item mode-advanced divsOur next move is to tell our scraper to find all of these lister-item mode-advanced divs:Breaking find_all down:movie_div is the variable we’ll use to store all of the div containers with a class of lister-item mode-advanced the find_all() method extracts all the div containers that have a class attribute of lister-item mode-advanced from what we have stored in our variable ready to extract each itemIf we look at the first movie on our list: We’re missing gross earnings! If you look at the second movie, they’ve included it mething to always consider when building a web scraper is the idea that not all the information you seek will be available for you to these cases, we need to make sure our web scraper doesn’t stop working or break when it reaches missing data and build around the idea we just don’t know whether or not that’ll tting into each lister-item mode-advanced divWhen we grab each of the items we need in a single lister-item mode-advanced div container, we need the scraper to loop to the next lister-item mode-advanced div container and grab those movie items too. And then it needs to loop to the next one and so on — 50 times for each page. For this to execute, we’ll need to wrap our scraper in a for eaking down the for loop:A for loop is used for iterating over a sequence. Our sequence being every lister-item mode-advanced div container that we stored in movie_div container is the name of the variable that enters each div. You can name this whatever you want (x, loop, banana, cheese), and it wont change the function of the can be read like this:Extract the title of the movieBeginning with the movie’s name, let’s locate its corresponding HTML line by using inspect and clicking on the see the name is contained within an anchor tag, . This tag is nested within a header tag,

. The

tag is nested within a

tag. Thisis the third of the divs nested in the container of the first eaking titles down:name is the variable we’ll use to store the title data we findcontainer is what used in our for loop — it’s used for iterating over each time. h3 and. a is attribute notation and tells the scraper to access each of those tells the scraper to grab the text nested in the (name) tells the scraper to take what we found and stored in name and to add it into our empty list called titles, which we created in the beginningExtract year of releaseLet’s locate the movie’s year and its corresponding HTML line by using inspect and clicking on the see this data is stored within the tag below the tag that contains the title of the movie. The dot notation, which we used for finding the title data (. h3. a), worked because it was the first tag after the h3 tag. Since the tag we want is the second tag, we have to use a different stead, we can tell our scraper to search by the distinctive mark of the second . We’ll use the find() method, which is similar to find_all() except it only returns the first eaking years down:year is the variable we’ll use to store the year data we findcontainer is what we used in our for loop — it’s used for iterating over each time. h3 is attribute notation, which tells the scraper to access that () is a method we’ll use to access this particular tag(‘span’, class_ = ‘lister-item-year’) is the distinctive tag we (year) tells the scraper to take what we found and stored in year and to add it into our empty list called years (which we created in the beginning)Extract length of movieLocate the movie’s length and its correspondent HTML line by using inspect and clicking on the total data we need can be found in a tag with a class of runtime. Like we did with year, we can do something similar:Breaking time down:runtime is the variable we’ll use to store the time data we findcontainer is what we used in our for loop — it’s used for iterating over each () is a method we’ll use to access this particular tag(‘span’, class_ = ‘runtime’) is the distinctive tag we wantif (‘span’, class_=’runtime’) else ‘-’ says if there’s data there, grab it — but if the data is missing, then put a dash there tells the scraper to grab that text in the (runtime) tells the scraper to take what we found and stored in runtime and to add it into our empty list called time (which we created in the beginning)Extract IMDb ratingsFind the movie’s IMDb rating and its corresponding HTML line by using inspect and clicking on the IMDb, we’ll focus on extracting the IMDb rating. The data we need can be found in a tag. Since I don’t see any other tags, we can use attribute notation (dot notation) to grab this eaking IMDb ratings down:imdb is the variable we’ll use to store the IMDB ratings data it findscontainer is what we used in our for loop — it’s used for iterating over each is attribute notation that tells the scraper to access that tells the scraper to grab that textThe float() method turns the text we find into a float — which is a (imdb) tells the scraper to take what we found and stored in imdb and to add it into our empty list called imdb_ratings (which we created in the beginning). Extract MetascoreFind the movie’s Metascore rating and its corresponding HTML line by using inspect and clicking on the Metascore data we need can be found in a tag that has a class that says metascore we settle on that, you should notice that, of course, a 96 for “Parasite” shows a favorable rating, but are the others favorable? If you highlight the next movie’s Metascore, you’ll see “JoJo Rabbit” has a class that says metascore mixed. Since these tags are different, it’d be safe to tell the scraper to use just the class metascore when scraping:Breaking Metascores down:m_score is the variable we’ll use to store the Metascore-rating data it findscontainer is what we used in our for loop — it’s used for iterating over each () is a method we’ll use to access this particular tag(‘span’, class_ = ‘metascore’) is the distinctive tag we tells the scraper to grab that textif (‘span’, class_=’metascore’) else ‘-’ says if there is data there, grab it — but if the data is missing, then put a dash thereThe int() method turns the text we find into an (m_score) tells the scraper to take what we found and stored in m_score and to add it into our empty list called metascores (which we created in the beginning)Extract votes and gross earningsWe’re finally onto the final two items we need to extract, but we saved the toughest for ’s where things get a little tricky. As mentioned earlier, you should have noticed that when we look at the first movie on this list, we don’t see a gross-earnings number. When we look at the second movie on the list, we can see ’s just have a look at the second movie’s HTML code and go from the votes and the gross are highlighted on the right. After looking at the votes and gross containers for movie #2, what do you notice? As you can see, both of these are in a tag that has a name attribute that equals nv and a data-value attribute that holds the values of the distinctive number we need for can we grab the data for the second one if the search parameters for the first one are the same? How do we tell our scraper to skip over the first one and scrape the second? This one took a lot of brain flexing, tons of coffee, and a couple late nights to figure out. Here’s how I did it:Breaking votes and gross down:nv is an entirely new variable we’ll use to hold both the votes and the gross tagscontainer is what we used in our for loop for iterating over each timefind_all() is the method we’ll use to grab both of the tags(‘span’, attrs = ‘name’: ’nv’) is how we can grab attributes of that specific is the variable we’ll use to store the votes we find in the nv tagnv[0] tells the scraper to go into the nv tag and grab the first data in the list — which are the votes because votes comes first in our HTML code (computers count in binary — they start count at 0, not 1) tells the scraper to grab that (vote) tells the scraper to take what we found and stored in vote and to add it into our empty list called votes (which we created in the beginning)grosses is the variable we’ll use to store the gross we find in the nv tagnv[1] tells the scraper to go into the nv tag and grab the second data in the list — which is gross because gross comes second in our HTML codenv[1] if len(nv) > 1 else ‘-’ says if the length of nv is greater than one, then find the second datum that’s stored. But if the data that’s stored in nv isn’t greater than one — meaning if the gross is missing — then put a dash (grosses) tells the scraper to take what we found and stored in grosses and to add it into our empty list called us_grosses (which we created in the beginning)Your code should now look like this:Let’s See What We Have So FarNow that we’ve told our scraper what elements to scrape, let’s use the print function to print out each list we’ve sent our scraped data to:Our lists looks like this[‘Parasite’, ‘Jojo Rabbit’, ‘1917’, ‘Knives Out’, ‘Uncut Gems’, ‘Once Upon a Time… in Hollywood’, ‘Joker’, ‘The Gentlemen’, ‘Ford v Ferrari’, ‘Little Women’, ‘The Irishman’, ‘The Lighthouse’, ‘Toy Story 4’, ‘Marriage Story’, ‘Avengers: Endgame’, ‘The Godfather’, ‘Blade Runner 2049’, ‘The Shawshank Redemption’, ‘The Dark Knight’, ‘Inglourious Basterds’, ‘Call Me by Your Name’, ‘The Two Popes’, ‘Pulp Fiction’, ‘Inception’, ‘Interstellar’, ‘Green Book’, ‘Blade Runner’, ‘The Wolf of Wall Street’, ‘Gone Girl’, ‘The Shining’, ‘The Matrix’, ‘Titanic’, ‘The Silence of the Lambs’, ‘Three Billboards Outside Ebbing, Missouri’, “Harry Potter and the Sorcerer’s Stone”, ‘The Peanut Butter Falcon’, ‘The Handmaiden’, ‘Memories of Murder’, ‘The Lord of the Rings: The Fellowship of the Ring’, ‘Gladiator’, ‘The Martian’, ‘Bohemian Rhapsody’, ‘Watchmen’, ‘Forrest Gump’, ‘Thor: Ragnarok’, ‘Casino Royale’, ‘The Breakfast Club’, ‘The Godfather: Part II’, ‘Django Unchained’, ‘Baby Driver’]

[‘(2019)’, ‘(2019)’, ‘(2019)’, ‘(2019)’, ‘(2019)’, ‘(2019)’, ‘(2019)’, ‘(2019)’, ‘(2019)’, ‘(2019)’, ‘(2019)’, ‘(I) (2019)’, ‘(2019)’, ‘(2019)’, ‘(2019)’, ‘(1972)’, ‘(2017)’, ‘(1994)’, ‘(2008)’, ‘(2009)’, ‘(2017)’, ‘(2019)’, ‘(1994)’, ‘(2010)’, ‘(2014)’, ‘(2018)’, ‘(1982)’, ‘(2013)’, ‘(2014)’, ‘(1980)’, ‘(1999)’, ‘(1997)’, ‘(1991)’, ‘(2017)’, ‘(2001)’, ‘(2019)’, ‘(2016)’, ‘(2003)’, ‘(2001)’, ‘(2000)’, ‘(2015)’, ‘(2018)’, ‘(2009)’, ‘(1994)’, ‘(2017)’, ‘(2006)’, ‘(1985)’, ‘(1974)’, ‘(2012)’, ‘(2017)’]

[‘132 min’, ‘108 min’, ‘119 min’, ‘131 min’, ‘135 min’, ‘161 min’, ‘122 min’, ‘113 min’, ‘152 min’, ‘135 min’, ‘209 min’, ‘109 min’, ‘100 min’, ‘137 min’, ‘181 min’, ‘175 min’, ‘164 min’, ‘142 min’, ‘152 min’, ‘153 min’, ‘132 min’, ‘125 min’, ‘154 min’, ‘148 min’, ‘169 min’, ‘130 min’, ‘117min’, ‘180 min’, ‘149 min’, ‘146 min’, ‘136 min’, ‘194 min’, ‘118 min’, ‘115 min’, ‘152 min’, ’97 min’, ‘145 min’, ‘132 min’, ‘178 min’, ‘155 min’, ‘144 min’, ‘134 min’, ‘162 min’, ‘142 min’, ‘130 min’, ‘144 min’, ’97 min’, ‘202 min’, ‘165 min’, ‘113 min’]

[8. 6, 8. 0, 8. 5, 8. 0, 7. 6, 7. 7, 8. 1, 8. 2, 8. 7, 7. 8, 8. 5, 9. 0, 9. 3, 9. 3, 7. 9, 7. 9, 8. 4, 8. 2, 7. 8, 7. 9, 9. 4, 7. 6]

[’96 ‘, ’58 ‘, ’78 ‘, ’82 ‘, ’90 ‘, ’83 ‘, ’59 ‘, ’51 ‘, ’81 ‘, ’91 ‘, ’94 ‘, ’83 ‘, ’84 ‘, ’93 ‘, ’78 ‘, ‘100 ‘, ’81 ‘, ’80 ‘, ’84 ‘, ’69 ‘, ’93 ‘, ’75 ‘, ’94 ‘, ’74 ‘, ’74 ‘, ’69 ‘, ’84 ‘, ’75 ‘, ’79 ‘, ’66 ‘, ’73 ‘, ’75 ‘, ’85 ‘, ’88 ‘, ’64 ‘, ’70 ‘, ’84 ‘, ’82 ‘, ’92 ‘, ’67 ‘, ’80 ‘, ’49 ‘, ’56 ‘, ’82 ‘, ’74 ‘, ’80 ‘, ’62 ‘, ’90 ‘, ’81 ‘, ’86 ‘]

[‘282, 699’, ‘142, 517’, ‘199, 638’, ‘195, 728’, ‘108, 330’, ‘396, 071’, ‘695, 224′, ’42, 015’, ‘152, 661′, ’65, 234’, ‘249, 950′, ’77, 453’, ‘160, 180’, ‘179, 887’, ‘673, 115’, ‘1, 511, 929’, ‘414, 992’, ‘2, 194, 397’, ‘2, 176, 865’, ‘1, 184, 882’, ‘178, 688′, ’76, 291’, ‘1, 724, 518’, ‘1, 925, 684’, ‘1, 378, 968’, ‘293, 695’, ‘656, 442’, ‘1, 092, 063’, ‘799, 696’, ‘835, 496’, ‘1, 580, 250’, ‘994, 453’, ‘1, 191, 182’, ‘383, 958’, ‘595, 613′, ’34, 091′, ’92, 492’, ‘115, 125’, ‘1, 572, 354’, ‘1, 267, 310’, ‘715, 623’, ‘410, 199’, ‘479, 811’, ‘1, 693, 344’, ‘535, 065’, ‘555, 756’, ‘330, 308’, ‘1, 059, 089’, ‘1, 271, 569’, ‘398, 553’]

[‘-‘, ‘$0. 35M’, ‘-‘, ‘-‘, ‘-‘, ‘$135. 37M’, ‘$192. 73M’, ‘-‘, ‘-‘, ‘-‘, ‘-‘, ‘$0. 43M’, ‘$433. 03M’, ‘-‘, ‘$858. 37M’, ‘$134. 97M’, ‘$92. 05M’, ‘$28. 34M’, ‘$534. 86M’, ‘$120. 54M’, ‘$18. 10M’, ‘-‘, ‘$107. 93M’, ‘$292. 58M’, ‘$188. 02M’, ‘$85. 08M’, ‘$32. 87M’, ‘$116. 90M’, ‘$167. 77M’, ‘$44. 02M’, ‘$171. 48M’, ‘$659. 33M’, ‘$130. 74M’, ‘$54. 51M’, ‘$317. 58M’, ‘$13. 12M’, ‘$2. 01M’, ‘$0. 01M’, ‘$315. 54M’, ‘$187. 71M’, ‘$228. 43M’, ‘$216. 43M’, ‘$107. 51M’, ‘$330. 25M’, ‘$315. 06M’, ‘$167. 45M’, ‘$45. 88M’, ‘$57. 30M’, ‘$162. 81M’, ‘$107. 83M’]

So far so good, but we aren’t quite there yet. We need to clean up our data a bit. Looks like we have some unwanted elements in our data: dollar signs, Ms, mins, commas, parentheses, and extra white space in the ing a DataFrame With pandasThe next order of business is to build a DataFrame with pandas to store the data we have nicely in a table to really understand what’s going ’s how we do it:Breaking our dataframe down:movies is what we’ll name our Frame is how we initialize the creation of a DataFrame with pandasThe keys on the left are the column namesThe values on the right are our lists of data we’ve scrapedView Our DataFrameWe can see how it all looks by simply using the print function on our DataFrame — which we called movies — at the bottom of our program:Our pandas DataFrame looks like thisData QualityBefore embarking on projects like this, you must know what your data-quality criteria is — meaning, what rules or constraints should your data follow. Here are some examples:Data-type constraints: Values in your columns must be a particular data type: numeric, boolean, date, ndatory constraints: Certain columns can’t be emptyRegular expression patterns: Text fields that have to be in a certain pattern, like phone numbersWhat Is Data Cleaning? Data cleaning is the process of detecting and correcting or removing corrupt or inaccurate records from your doing data analysis, it’s also important to make sure we’re using the correct data ecking Data TypesWe can check what our data types look like by running this print function at the bottom of our program:Our data type resultsLets analyze this: Our movie data type is an object, which is the same as a string, which would be correct considering they’re titles of movies. Our IMDb score is also correct because we have floating-point numbers in this column (decimal numbers) our year, timeMin, metascore, and votes show they’re objects when they should be integer data types, and our us_grossMillions is an object instead of a float data type. How did this happen? Initially, when we were telling our scraper to grab these values from each HTML container, we were telling it to grab specific values from a string. A string represents text rather than numbers — it’s comprised of a set of characters that can also contain example, the word cheese and the phrase I ate 10 blocks of cheese are both strings. If we were to get rid of everything except the 10 from the I ate 10 blocks of cheese string, it’s still a string — but now it’s one that only says Cleaning With pandasNow that we have a clear idea of what our data looks like right now, it’s time to start cleaning it can be a tedious task, but it’s one that’s very eaning year dataTo remove the parentheses from our year data and to convert the object into an integer data type, we’ll do this:Breaking cleaning year data down:movies[‘year’] tells pandas to go to the column year in our (‘(d+’) this method: (‘(d+’) says to extract all the digits in the stringThe (int) method converts the result to an integerNow, if we run print(movies[‘year’]) into the bottom of our program to see what our year data looks like, this is the result:You should see your list of years without any parentheses. And the data type showing is now an integer. Our year data is officially eaning time dataWe’ll do exactly what we did cleaning our year data above to our time data by grabbing only the digits and converting our data type to an eaning Metascore dataThe only cleaning we need to do here is converting our object data type into an integer:Cleaning votesWith votes, we need to remove the commas and convert it into an integer data type:Breaking cleaning votes down:movies[‘votes’] is our votes data in our movies DataFrame. We’re assigning our new cleaned up data to our votes (‘, ’, ‘’) grabs the string and uses the replace method to replace the commas with an empty quote (nothing)The (int) method converts the result into an integerCleaning gross dataThe gross data involves a few hurdles to jump. What we need to do is remove the dollar sign and the Ms from the data and convert it into a floating-point number. Here’s how to do it:Breaking cleaning gross down:Top cleaning code:movies[‘us_grossMillions’] is our gross data in our movies DataFrame. We’ll be assigning our new cleaned up data to our us_grossMillions [‘us_grossMillions’] tells pandas to go to the column us_grossMillions in our DataFrameThe () function calls the specified function for each item of an iterablelambda x: x is an anonymous functions in Python (one without a name). Normal functions are defined using the def (‘$’)(‘M’) is our function arguments. This tells our function to strip the $ from the left side and strip the M from the right conversion code:movies[‘us_grossMillions’] is stripped of the elements we don’t need, and now we’ll assign the conversion code data to it to finish it _numeric is a method we can use to change this column to a float. The reason we use this is because we have a lot of dashes in this column, and we can’t just convert it to a float using (float) — this would catch an ’coerce’ will transform the nonnumeric values, our dashes, into NaN (not-a-number) values because we have dashes in place of the data that’s missingReview the Cleaned and Converted CodeLet’s see how we did. Run the print function to see our data and the data types we have:The result of our cleaned dataThe result of our data typesLooks good! Final Finished CodeHere’s the final code of your single page web scraper:Saving Your Data to a CSVWhat’s the use of our scraped data if we can’t save it for any future projects or analysis? Below is the code you can add to the bottom of your program to save your data to a CSV file:Breaking the CSV file _csv(”)In order for this code to run successfully, you’ll need to create an empty file and name it whatever you want — making sure it has the extension. I named mine, as you can see above, but feel free to name it whatever you like. Just make sure to change the code above to match you’re in Repl, you can create an empty CSVfile by hovering near Files and clicking the “Add file” option. Name it, and save it with a extension. Then, add the code to the end of your _csv(‘’)All your data should populate over into your CSV. Once you download it onto your computer/open it up, your file will look like this:ConclusionWe’ve come a long way from requesting the HTML content of our web page to cleaning our entire DataFrame. You should now know how to scrape web pages with the same HTML and URL structure I’ve shown you above. Here’s a summary of what we’ve accomplished:Next stepsI hope you had fun making this! If you’d like to build on what you’ve learned, here are a few ideas to try out:Grab the movie data for all 1, 000 movies on that listScrape other data about each movie — e. g., genre, director, starring, or the summary of the movieFind a different website to scrape that interests youIn my next piece, I’ll explain how to loop through all of the pages of this IMDb list to grab all of the 1, 000 movies, which will involve a few alterations to the final code we have coding! Previously published at Hacker Noon Create your free account to unlock your custom reading experience.

The Ultimate Tutorial On How To Do Web Scraping – Hacker …

The Ultimate Tutorial On How To Do Web Scraping is the process of automatically collecting web data with specialized software. It collects information all around the internet without the restrictions of an API. It is one of the most important and tricky parts of communication. Websites strongly focus on this data to determine whether a request comes from a human or a bot. There are two main types: GET to retrieve data. And POST to submit data (usually user-agent, Cookies, Browser Language, all go BilbaoFounder @ Entrepreneur with deep technical background, with 15+ years in startups, security & Scraping is the process of automatically collecting web data with specialized day trillions of GBs are created, making it impossible to keep track of every new data point. Simultaneously, more and more companies worldwide rely on various data sources to nurture their knowledge to gain a competitive advantage. It’s not possible to keep up with the pace ‘s where Web Scraping comes into is Web Scraping Used For? As communication between systems is becoming critical, APIs are increasing in popularity. An API is a gate a website exposes to communicate with other systems. They open up functionality to the public. Unfortunately, many services don’t provide an API. Others only allow limited Scraping overcomes this limitation. It collects information all around the internet without the restrictions of an erefore web scraping is used in varied scenarios:Price MonitoringE-commerce: tracking competition prices and and financial services: detect price changes, volume activity, anomalies, GenerationExtract contact information: names, email addresses, phones, or job entify new opportunities, i. e., in Yelp, YellowPages, Crunchbase, ResearchReal Estate: supply/demand analysis, market opportunities, trending areas, price tomotive/Cars: dealers distribution, most popular models, best deals, supply by and Accommodation: available rooms, hottest areas, best discounts, prices by Postings: most demanded jobs. Industries on the rise. Biggest employers. Supply by sector, Media: brand presence and growing influencers tracking. New acquisition channels, audience targeting, Discovery: track new restaurants, commercial streets, shops, trending areas, gregation: News from many mpare prices between, i. e., insurance services, traveling, nking: organize all information into one ventory and Product Tracking: Collect product details and (Search Engine Optimization): Keywords’ relevance and performance. Competition tracking, brand relevance, new players’ – Data Science: Collect massive amounts of data to train machine learning models; image recognition, predictive modeling, downloads: PDFs or massive Image extraction at Scraping ProcessWeb Scraping works mainly as a standard HTTP client-server browser (client) connects to a website (server) and requests the content. The server then returns HTML content, a markup language both sides understand. The browser is responsible for rendering HTML to a graphical ‘s it. Easy, isn’t it? There are more content types, but let’s focus on this one for now. Let’s dig deeper on how the underlying communication works – it’ll come in handy later quest – made by the browserA request is a text the browser sends to the website. It consists of four elements:URL: the specific address on the there are two main types: GET to retrieve data. And POST to submit data (usually forms). Headers. User-Agent, Cookies, Browser Language, all go here. Websites strongly focus on this data to determine whether a request comes from a human or a commonly user-generated input. Used when submitting sponse – returned by the serverWhen a website responds to a browser, it returns three Code: a number indicating the status of the request. 200 means everything went OK. The infamous 404 means URL not found. 500 is an internal server error. You can learn more about HTTP content: HTML. Responsible for rendering the website. Auxiliary content types include: CSS styles (appearance), Images, XML, JSON or PDF. They improve the user experience. Headers. Just like Request Headers, these play a crucial role in communication. Amongst others, it instructs the browser to “Set-Cookie”s. We will get back to that to this point, this reflects an ordinary client-server process. Web Scraping, though, adds a new concept: data Extraction – ParsingHTML is just a long text. Once we have the HTML, we want to obtain specific data and structure it to make it usable. Parsing is the process of extracting selected data and organizing it into a well-defined nically, HTML is a tree structure. Upper elements (nodes) are parents, and the lower are children. Two popular technologies facilitate walking the tree to extract the most relevant pieces:CSS Selectors: broadly used to modify the look of websites. Powerful and easy to they are more powerful but harder to use. They’re not suited for extraction process begins by analyzing a website. Some elements are valuable at first sight. For example, Title, Price, or Description are all easily visible on the screen. Other information, though, is only visible in the HTML code:Hidden inputs: it commonly contains information such as internal IDs that are pretty Inputs on Amazon ProductsXHR: websites execute requests in the background to enhance user experience. They regularly store rich content already structured in JSON ynchronous Request on InstagramJSON inside HTML: JSON is a commonly used data-interchange format. Many times it’s within the HTML code to serve other services – like Analytics or within HTML on AlibabaHTML attributes: add semantical meaning to other HTML attributes on CraiglistOnce data is structured, databases store it for later use. At this stage, we can export it to other formats such as Excel, PDF or transform it to make them available to other Scraping ChallengesSuch a valuable process does not come free of obstacles, s actively avoid being tracked/scraped. It’s common for them to build protective solutions. High traffic websites put advanced industry-level anti-scraping solutions into place. This protection makes the task extremely are some of the challenges web scrapers face when dealing with relevant websites (low traffic websites are usually low value and thus have weak anti-scraping systems):IP Rate LimitAll devices connected to the internet have an identification address, called IP. It’s like an ID Card. Websites use this identifier to measure the number of requests of a device and try to block it. Imagine an IP requesting 120 pages per minute. Two requests per second. Real users cannot browse at such a pace. So to scrape at scale, we need to bring a new concept: tating ProxiesA proxy, or proxy server, is a computer on the internet with an IP address. It intermediates between the requestor and the website. It permits hiding the original request IP behind a proxy IP and tricks the website into thinking it comes from another place. They’re typically used as vast pools of IPs and switched between them depending on various factors. Skilled scrapers tune this process and select proxies depending on the domain, geolocation, etc. Headers / Cookies validationRemember Request/Response Headers? A mismatch between the expected and resulting values tells the website something is wrong. The more headers shared between browser and server, the harder it gets for automated software to communicate smoothly without being detected. It gets increasingly challenging when websites return the “Set-Cookie” header that expects the browser to use it in the following eally, you’d want to make requests with as few headers as possible. Unfortunately, something it’s not possible leading to another challenge:Reverse Engineering Headers / Cookies generationAdvanced websites don’t respond if Headers and Cookies are not in place, forcing us to reverse-engineering. Reverse engineering is the process of understanding how a process’ built to try to simulate it. It requires tweaking IPs, User-Agent (browser identification), Cookies, script ExecutionMost websites these days rely heavily on Javascript. Javascript is a programming language executed on the browser. It adds extra difficulty to data collection as a lot of tools don’t support Javascript. Websites do complex calculations in Javascript to ensure a browser is really a browser. Leading us to:Headless BrowsersA headless browser is a web browser without a graphical user interface controlled by software. It requires a lot of RAM and CPU, making the process way more expensive. Selenium and Puppeteer (created by Google) are two of the most used tools for the task. You guessed: Google is the largest web scraper in the ptcha / reCAPTCHA (Developed by Google)Captcha is a challenge test to determine whether or not the user is human. It used to be an effective way to avoid bots. Some companies like Anti-Captcha and 2Captcha offer solutions to bypass Captchas. They offer OCR (Optical Character Recognition) services and even human labor to solve the ttern RecognitionWhen collecting data, you may feel tempted to go the easy way and follow a regular pattern. That’s a huge red flag for websites. Arbitrary requests are not reliable either. How’s someone supposed to land on page 8? It should’ve certainly been on page 7 before. Otherwise, it indicates that something’s weird. Nailing the right path is nclusionHopefully, this grasps the overview of how data automation looks. We could stay forever talking about it, but we will get deeper into details in the coming collection at scale is full of secrets. Keeping up the pace is arduous and expensive. It’s hard, very hard. A preferred solution is to use batteries included services like ZenRows that turn websites into data. We offer a hassle-free API that takes care of all the work, so you only need to care about the data. We urge you to try it for FREE. We are delighted to help and even tailor-made a custom solution that works for you. Disclaimer: Aurken Bilbao is Founder of mPreviously published at Hacker Noon Create your free account to unlock your custom reading experience.

Frequently Asked Questions about hackernoon web scraping