Amazon Web Crawler

Amazon Kendra releases Web Crawler to enable web site …

Posted On: Jul 7, 2021

Amazon Kendra is an intelligent search service powered by machine learning, enabling organizations to provide relevant information to customers and employees, when they need it. Starting today, AWS customers can use the Amazon Kendra web crawler to index and search webpages.

Critical information can be scattered across multiple data sources in an enterprise, including internal and external websites. Amazon Kendra customers can now use the Kendra web crawler to index documents made available on websites (HTML, PDF, MS Word, MS PowerPoint, and Plain Text) and search for information across this content using Kendra Intelligent Search. Organizations can provide relevant search results to users seeking answers to their questions, for example, product specification detail that resides on a support website or company travel policy information that’s listed on an intranet webpage.

The Amazon Kendra web crawler is available in all AWS regions where Amazon Kendra is available. To learn more about the feature, visit the documentation page. To explore Amazon Kendra, visit the Amazon Kendra website.

Note: The Kendra web crawler honors access rules in, and customers using the Kendra web crawler will need to ensure they are authorized to index those webpages in order to return search results for end users.

Amazon Product Data Crawler – Botsol

How it works

Here is a brief description of how to use this app to get all Amazon products data:

Launch the app.

Search for products on (from the Chrome window opened by the app).

Click Start Button

Wait while the crawler scrapes all the product data shown in the search results.

When the bot is finished, you can export the scraped data to a CSV or Excel file. You can also manually stop the bot if needed.

What it extracts?

Business Fields

Product Name

ASIN

Price

Old Price

Reviews

Rating

Seller Name

Badge (e. g. “Best Seller”)

Image URL

Product URL

Do you need any other field? Please please let us know.

If you want any additional feature in this app, please let us know we will make the change for you at a very reasonable price.

![]()

5 Major Challenges That Make Amazon Data Scraping Painful

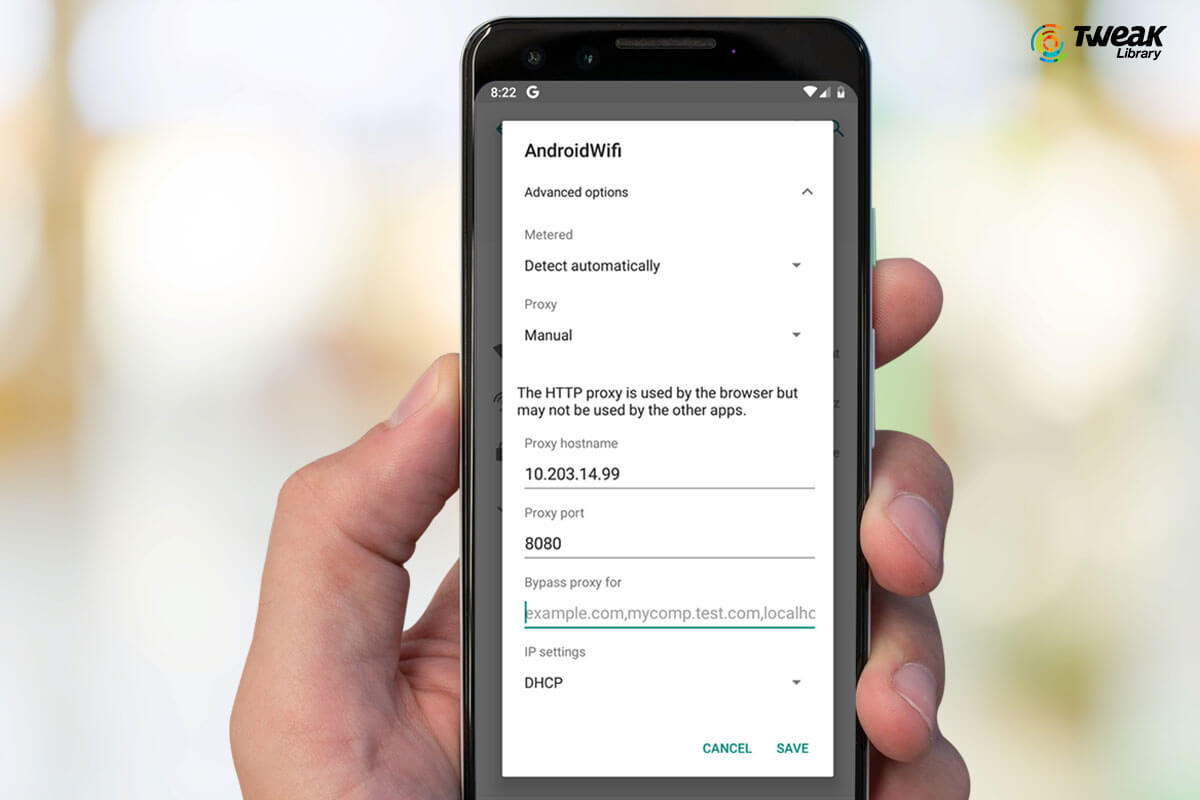

Amazon has been on the cutting edge of collecting, storing, and analyzing a large amount of data. Be it customer data, product information, data about retailers, or even information on the general market trends. Since Amazon is one of the largest e-commerce websites, a lot of analysts and firms depend on the data extracted from here to derive actionable growing e-commerce industry demands sophisticated analytical techniques to predict market trends, study customer temperament, or even get a competitive edge over the myriad of players in this sector. To augment the strength of these analytical techniques, you need high-quality reliable data. This data is called alternative data and can be derived from multiple sources. Some of the most prominent sources of alternative data in the e-commerce industry are customer reviews, product information, and even geographical data. E-commerce websites are a great source for a lot of these data elements. It is no news that Amazon has been at the forefront of the e-commerce industry, for quite some time now. Retailers fight tooth and nail to scrape data from Amazon. However, Amazon data scraping is not easy! Let us go through a few issues you may face while scraping data from is Amazon Data Scraping Challenging? Before you start Amazon data scraping, you should know that the website discourages scraping in its policy and page-structure. Due to its vested interest in protecting its data, Amazon has basic anti-scraping measures put in place. This might stop your scraper from extracting all the information you need. Besides that, the structure of the page might or might not differ for various products. This might fail your scraper code and logic. The worst part is, you might not even foresee this issue springing up and might even run into some network errors and unknown responses. Furthermore, captcha issues and IP (Internet Protocol) blocks might be a regular roadblock. You will feel the need to have a database and the lack of one might be a huge issue! You will also need to take care of exceptions while writing the algorithm for your scraper. This will come in handy if you are trying to circumvent issues due to complex page structures, unconventional (non-ASCII) characters, and other issues like funny URLs and huge memory requirements. Let us talk about a few of these issues in detail. We shall also cover how to solve them. Hopefully, this will help you scrape data from Amazon successfully. 1. Amazon can detect Bots and block their IPsSince Amazon prevents web scraping on its pages, it can easily detect if an action is being executed by a scraper bot or through a browser by a manual agent. A lot of these trends are identified by closely monitoring the behavior of the browsing agent. For example, if your URLs are repeatedly changed by only a query parameter at a regular interval, this is a clear indication of a scraper running through the page. It thus uses captchas and IP bans to block such bots. While this step is necessary to protect the privacy and integrity of the information, one might still need to extract some data from the Amazon web page. To do so, we have some workarounds for the same. Let us look at some of these:Rotate the IPs through different proxy servers if you need to. You can also deploy a consumer-grade VPN service with IP rotation random time-gaps and pauses in your scraper code to break the regularity of page the query parameters from the URLs to remove identifiers linking requests the scraper headers to make it look like the requests are coming from a browser and not a piece of code. 2. A lot of product pages on Amazon have varying page structuresIf you have ever attempted to scrape product descriptions and scrape data from Amazon, you might have run into a lot of unknown response errors and exceptions. This is because most of your scrapers are designed and customized for a particular structure of a page. It is used to follow a particular page structure, extract the HTML information of the same, and then collect the relevant data. However, if this structure of the page changes, the scraper might fail if it is not designed to handle exceptions. A lot of products on Amazon have different pages and the attributes of these pages differ from a standard template. This is often done to cater to different types of products that may have different key attributes and features that need to be highlighted. To address these inconsistencies, write the code so as to handle exceptions. Furthermore, your code should be resilient. You can do this by including ‘try-catch’ phrases that ensure that the code does not fail at the first occurrence of a network error or a time-out error. Since you will be scraping some particular attributes of a product, you can design the code so that the scraper can look for that particular attribute using tools like ‘string matching’. You can do so after extracting the complete HTML structure of the target page. Also Read: Competitive Pricing Analysis: Hitting the Bullseye in Profit Generation3. Your scraper might not be efficient enough! Ever got a scraper that has been running for hours to get you some hundred thousands of rows? This might be because you haven’t taken care of the efficiency and speed of the algorithm. You can do some basic math while designing the algorithm. Let us see what you can do to solve this problem! You will always have the number of products or sellers you need to extract information about. Using this data, you can roughly calculate the number of requests you need to send every second to complete your data scraping exercise. Once you compute this, your aim is to design your scraper to meet this condition! It is highly likely that single-threaded, network blocking operations will fail if you want to speed things up! Probably, you would want to create multi-threaded scrapers! This allows your CPU to work in a parallel fashion! It will be working on one response or another, even when each request is taking several seconds to complete. This might be able to give you almost 100x the speed of your original single-threaded scraper! you will need an efficient scraper to crawl through Amazon as there is a lot of information on the site! 4. You might need a cloud platform and other computational aids! A very high-performance machine will be able to speed the process up for you! You can thus avoid burning the resources of your local system! To be able to scrape a website like Amazon, you might need high capacity memory resources! You will also need network pipes and cores with high efficiency! A cloud-based platform should be able to provide these resources to you! You do not want to run into memory issues! If you store big lists or dictionaries in memory, you might put an extra burden on your machine-resources! We advise you to transfer your data to permanent storage places as soon as possible. This will also help you speed the process is an array of cloud services that you can use for reasonable prices. You can avail one of these services using simple steps. It will also help you avoid unnecessary system crashes and delays in the process. 5. Use a database for recording informationIf you scrape data from Amazon or any other retail website, you will be collecting high volumes of data. Since the process of scraping consumes power and time, we advise you to keep storing this data in a database. Store each product or sellers’ record that you crawl as a row in a database table. You can also use databases to perform operations like basic querying, exporting, and deduping on your data. This makes the process of storing, analyzing, and reusing your data convenient and faster! Also Read: How Scraping Amazon Data can help you price your products rightSummaryA lot of businesses and analysts, especially in the retail and e-commerce sector need Amazon data scraping. They use this data to make prices comparison, studying market trends across demographics, forecasting product sales, reviewing customer sentiment, or even estimating competition rates. This can be a repetitive exercise. If you create your own scraper, it can be a time-consuming, challenging ever, Datahut can scrape e-commerce product information for you from a wide range of web sources and provide this data in readable file formats like ‘CSV’ or other database locations as per client needs. You can then use this data for all your subsequent analyses. This will help you save resources and time. We advise you to conduct thorough research on the various data scraping services in the market. You may then avail the service that suits your requirements the wnload Amazon Data sampleWish to know more about how Datahut can help in your e-commerce data scraping needs? Contact us today. #datascraping #amazon #amazonscraping #ecommerce #issuewithscraping #retail

Frequently Asked Questions about amazon web crawler

What is an Amazon crawler?

A simple and easy-to-use app that will extract the product information from Amazon.com. Amazon crawler can be used to extract product information from Amazon.com. Just search for anything on Amazon, and extract information about all products that appear in the search results.

Is it OK to scrape Amazon?

Before you start Amazon data scraping, you should know that the website discourages scraping in its policy and page-structure. Due to its vested interest in protecting its data, Amazon has basic anti-scraping measures put in place. This might stop your scraper from extracting all the information you need.Oct 27, 2020

Does AWS allow web crawling?

Starting today, AWS customers can use the Amazon Kendra web crawler to index and search webpages. Critical information can be scattered across multiple data sources in an enterprise, including internal and external websites. … Note: The Kendra web crawler honors access rules in robots.Jul 7, 2021