Web Scraping Using Node Js

Web Scraping Using Node JS in JavaScript – Analytics Vidhya

This article was published as a part of the Data Science Blogathon.

to f

INTRODUCTION

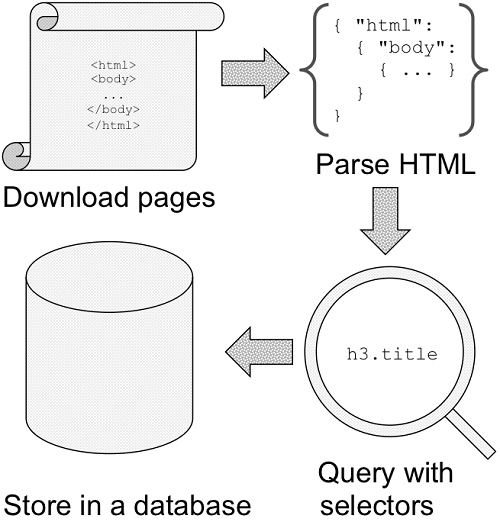

Gathering information across the web is web scraping, also known as Web Data Extraction & Web Harvesting. Nowadays data is like oxygen for startups & freelancers who want to start a business or a project in any domain. Suppose you want to find the price of a product on an eCommerce website. It’s easy to find but now let’s say you have to do this exercise for thousands of products across multiple eCommerce websites. Doing it manually; not a good option at all.

Get to know the Tool

JavaScript is a popular programming language and it runs in any web browser.

Node JS is an interpreter and provides an environment for JavaScript with some specific useful libraries.

In short, Node JS adds several functionality & features to JavaScript in terms of libraries & make it more powerful.

Hands-On-Session

Let’s get to understand web scraping using Node JS with an example. Suppose you want to analyze the price fluctuations of some products on an eCommerce website. Now, you have to list out all the possible factors of the cause & cross-check it with each product. Similarly, when you want to scrape data, then you have to list out parent HTML tags & check respective child HTML tag to extract the data by repeating this activity.

Steps Required for Web Scraping

Creating the file

Install & Call the required libraries

Select the Website & Data needed to Scrape

Set the URL & Check the Response Code

Inspect & Find the Proper HTML tags

Include the HTML tags in our Code

Cross-check the Scraped Data

I’m using Visual Studio to run this task.

Step 1- Creating the file

To create a file, I need to run npm init and give a few details as needed in the below screenshot.

Create

Step 2- Install & Call the required libraries

Need to run the below codes to install these libraries.

Install Libraries

Once the libraries are properly installed then you will see these messages are getting displayed.

logs after packages get installed

Call the required libraries:

Call the library

Step 3- Select the Website & Data needed to Scrape.

I picked this website “ and want to scrape data of gold rates along with dates.

Data we want to scrape

Step 4- Set the URL & Check the Response Code

Node JS code looks like this to pass the URL & check the response code.

Passing URL & Getting Response Code

Step 5- Inspect & Find the Proper HTML tags

It’s quite easy to find the proper HTML tags in which your data is present.

To see the HTML tags; right-click and select the inspect option.

Inspecting the HTML Tags

Select proper HTML Tags:-

If you noticed there are three columns in our table, so our HTML tag for table row would be “HeaderRow” & all the column names are present with tag “th” (Table Header).

And for each table row (“tr”) our data resides in “DataRow” HTML tag

Now, I need to get all HTML tags to reside under “HeaderRow” & need to find all the “th” HTML tags & finally iterate through “DataRow” HTML tag to get all the data within it.

Step 6- Include the HTML tags in our Code

After including the HTML tags, our code will be:-

Code Snippet

Step 7- Cross-check the Scraped Data

Print the Data, so the code for this is like:-

Our Scraped Data

If you go to a more granular level of HTML Tags & iterate them accordingly, you will get more precise data.

That’s all about web scraping & how to get rare quality data like gold.

Conclusion

I tried to explain Web Scraping using Node JS in a precise way. Hopefully, this will help you.

Find full code on

Vgyaan’s–GithubRepo

If you have any questions about the code or web scraping in general, reach out to me on

Vgyaan’s–Linkedin

We will meet again with something new.

Till then,

Happy Coding..!

4 Tools for Web Scraping in Node.js – Twilio

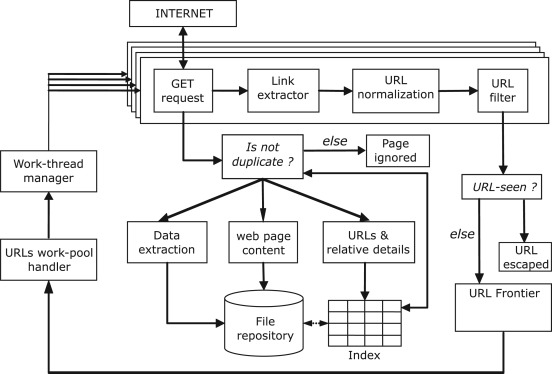

Sometimes the data you need is available online, but not through a dedicated REST API. Luckily for JavaScript developers, there are a variety of tools available in for scraping and parsing data directly from websites to use in your projects and applications.

Let’s walk through 4 of these libraries to see how they work and how they compare to each other.

Make sure you have up to date versions of (at least 12. 0. 0) and npm installed on your machine. Run the terminal command in the directory where you want your code to live:

For some of these applications, we’ll be using the Got library for making HTTP requests, so install that with this command in the same directory:

Let’s try finding all of the links to unique MIDI files on this web page from the Video Game Music Archive with a bunch of Nintendo music as the example problem we want to solve for each of these libraries.

Tips and tricks for web scraping

Before moving onto specific tools, there are some common themes that are going to be useful no matter which method you decide to use.

Before writing code to parse the content you want, you typically will need to take a look at the HTML that’s rendered by the browser. Every web page is different, and sometimes getting the right data out of them requires a bit of creativity, pattern recognition, and experimentation.

There are helpful developer tools available to you in most modern browsers. If you right-click on the element you’re interested in, you can inspect the HTML behind that element to get more insight.

You will also frequently need to filter for specific content. This is often done using CSS selectors, which you will see throughout the code examples in this tutorial, to gather HTML elements that fit a specific criteria. Regular expressions are also very useful in many web scraping situations. On top of that, if you need a little more granularity, you can write functions to filter through the content of elements, such as this one for determining whether a hyperlink tag refers to a MIDI file:

const isMidi = (link) => {

// Return false if there is no href attribute.

if(typeof === ‘undefined’) { return false}

return (”);};

It is also good to keep in mind that many websites prevent web scraping in their Terms of Service, so always remember to double check this beforehand. With that, let’s dive into the specifics!

jsdom

jsdom is a pure-JavaScript implementation of many web standards for, and is a great tool for testing and scraping web applications. Install it in your terminal using the following command:

The following code is all you need to gather all of the links to MIDI files on the Video Game Music Archive page referenced earlier:

const got = require(‘got’);

const jsdom = require(“jsdom”);

const { JSDOM} = jsdom;

const vgmUrl= ”;

const noParens = (link) => {

// Regular expression to determine if the text has parentheses.

const parensRegex = /^((?! \(). )*$/;

return (link. textContent);};

(async () => {

const response = await got(vgmUrl);

const dom = new JSDOM();

// Create an Array out of the HTML Elements for filtering using spread syntax.

const nodeList = [(‘a’)];

(isMidi)(noParens). forEach(link => {

();});})();

This uses a very simple query selector, a, to access all hyperlinks on the page, along with a few functions to filter through this content to make sure we’re only getting the MIDI files we want. The noParens() filter function uses a regular expression to leave out all of the MIDI files that contain parentheses, which means they are just alternate versions of the same song.

Save that code to a file named, and run it with the command node in your terminal.

If you want a more in-depth walkthrough on this library, check out this other tutorial I wrote on using jsdom.

Cheerio

Cheerio is a library that is similar to jsdom but was designed to be more lightweight, making it much faster. It implements a subset of core jQuery, providing an API that many JavaScript developers are familiar with.

Install it with the following command:

npm install cheerio@1. 0-rc. 3

The code we need to accomplish this same task is very similar:

const cheerio = require(‘cheerio’);

const isMidi = (i, link) => {

const noParens = (i, link) => {

return (ildren[0]);};

const $ = ();

$(‘a’)(isMidi)(noParens)((i, link) => {

const href =;

(href);});})();

Here you can see that using functions to filter through content is built into Cheerio’s API, so we don’t need any extra code for converting the collection of elements to an array. Replace the code in with this new code, and run it again. The execution should be noticeably quicker because Cheerio is a less bulky library.

If you want a more in-depth walkthrough, check out this other tutorial I wrote on using Cheerio.

Puppeteer

Puppeteer is much different than the previous two in that it is primarily a library for headless browser scripting. Puppeteer provides a high-level API to control Chrome or Chromium over the DevTools protocol. It’s much more versatile because you can write code to interact with and manipulate web applications rather than just reading static data.

npm install puppeteer@5. 5. 0

Web scraping with Puppeteer is much different than the previous two tools because rather than writing code to grab raw HTML from a URL and then feeding it to an object, you’re writing code that is going to run in the context of a browser processing the HTML of a given URL and building a real document object model out of it.

The following code snippet instructs Puppeteer’s browser to go to the URL we want and access all of the same hyperlink elements that we parsed for previously:

const puppeteer = require(‘puppeteer’);

const vgmUrl = ”;

const browser = await ();

const page = await wPage();

await (vgmUrl);

const links = await page. $$eval(‘a’, elements => (element => {

return (”) && (element. textContent);})(element =>));

rEach(link => (link));

await ();})();

Notice that we are still writing some logic to filter through the links on the page, but instead of declaring more filter functions, we’re just doing it inline. There is some boilerplate code involved for telling the browser what to do, but we don’t have to use another Node module for making a request to the website we’re trying to scrape. Overall it’s a lot slower if you’re doing simple things like this, but Puppeteer is very useful if you are dealing with pages that aren’t static.

For a more thorough guide on how to use more of Puppeteer’s features to interact with dynamic web applications, I wrote another tutorial that goes deeper into working with Puppeteer.

Playwright

Playwright is another library for headless browser scripting, written by the same team that built Puppeteer. It’s API and functionality are nearly identical to Puppeteer’s, but it was designed to be cross-browser and works with FireFox and Webkit as well as Chrome/Chromium.

npm install playwright@0. 13. 0

The code for doing this task using Playwright is largely the same, with the exception that we need to explicitly declare which browser we’re using:

const playwright = require(‘playwright’);

This code should do the same thing as the code in the Puppeteer section and should behave similarly. The advantage to using Playwright is that it is more versatile as it works with more than just one type of browser. Try running this code using the other browsers and seeing how it affects the behavior of your script.

Like the other libraries, I also wrote another tutorial that goes deeper into working with Playwright if you want a longer walkthrough.

The vast expanse of the World Wide Web

Now that you can programmatically grab things from web pages, you have access to a huge source of data for whatever your projects need. One thing to keep in mind is that changes to a web page’s HTML might break your code, so make sure to keep everything up to date if you’re building applications that rely on scraping.

I’m looking forward to seeing what you build. Feel free to reach out and share your experiences or ask any questions.

Email:

Twitter: @Sagnewshreds

Github: Sagnew

Twitch (streaming live code): Sagnewshreds

How to Scrape Websites with Node.js and Cheerio – freeCodeCamp

There might be times when a website has data you want to analyze but the site doesn’t expose an API for accessing those data.

To get the data, you’ll have to resort to web scraping.

In this article, I’ll go over how to scrape websites with and Cheerio.

Before we start, you should be aware that there are some legal and ethical issues you should consider before scraping a site. It’s your responsibility to make sure that it’s okay to scrape a site before doing so.

The sites used in the examples throughout this article all allow scraping, so feel free to follow along.

Prerequisites

Here are some things you’ll need for this tutorial:

You need to have installed. If you don’t have Node, just make sure you download it for your system from the downloads page

You need to have a text editor like VSCode or Atom installed on your machine

You should have at least a basic understanding of JavaScript,, and the Document Object Model (DOM). But you can still follow along even if you are a total beginner with these technologies. Feel free to ask questions on the freeCodeCamp forum if you get stuck

What is Web Scraping?

Web scraping is the process of extracting data from a web page. Though you can do web scraping manually, the term usually refers to automated data extraction from websites – Wikipedia.

What is Cheerio?

Cheerio is a tool for parsing HTML and XML in, and is very popular with over 23k stars on GitHub.

It is fast, flexible, and easy to use. Since it implements a subset of JQuery, it’s easy to start using Cheerio if you’re already familiar with JQuery.

According to the documentation, Cheerio parses markup and provides an API for manipulating the resulting data structure but does not interpret the result like a web browser.

The major difference between cheerio and a web browser is that cheerio does not produce visual rendering, load CSS, load external resources or execute JavaScript. It simply parses markup and provides an API for manipulating the resulting data structure. That explains why it is also very fast – cheerio documentation.

If you want to use cheerio for scraping a web page, you need to first fetch the markup using packages like axios or node-fetch among others.

How to Scrape a Web Page in Node Using Cheerio

In this section, you will learn how to scrape a web page using cheerio. It is important to point out that before scraping a website, make sure you have permission to do so – or you might find yourself violating terms of service, breaching copyright, or violating privacy.

In this example, we will scrape the ISO 3166-1 alpha-3 codes for all countries and other jurisdictions as listed on this Wikipedia page. It is under the Current codes section of the ISO 3166-1 alpha-3 page.

This is what the list of countries/jurisdictions and their corresponding codes look like:

You can follow the steps below to scrape the data in the above list.

Step 1 – Create a Working Directory

In this step, you will create a directory for your project by running the command below on the terminal. The command will create a directory called learn-cheerio. You can give it a different name if you wish.

mkdir learn-cheerio

You should be able to see a folder named learn-cheerio created after successfully running the above command.

In the next step, you will open the directory you have just created in your favorite text editor and initialize the project.

Step 2 – Initialize the Project

In this step, you will navigate to your project directory and initialize the project. Open the directory you created in the previous step in your favorite text editor and initialize the project by running the command below.

npm init -y

Successfully running the above command will create a file at the root of your project directory.

In the next step, you will install project dependencies.

Step 3 – Install Dependencies

In this step, you will install project dependencies by running the command below. This will take a couple of minutes, so just be patient.

npm i axios cheerio pretty

Successfully running the above command will register three dependencies in the file under the dependencies field. The first dependency is axios, the second is cheerio, and the third is pretty.

axios is a very popular client which works in node and in the browser. We need it because cheerio is a markup parser.

For cheerio to parse the markup and scrape the data you need, we need to use axios for fetching the markup from the website. You can use another HTTP client to fetch the markup if you wish. It doesn’t necessarily have to be axios.

pretty is npm package for beautifying the markup so that it is readable when printed on the terminal.

In the next section, you will inspect the markup you will scrape data from.

Step 4 – Inspect the Web Page You Want to Scrape

Before you scrape data from a web page, it is very important to understand the HTML structure of the page.

In this step, you will inspect the HTML structure of the web page you are going to scrape data from.

Navigate to ISO 3166-1 alpha-3 codes page on Wikipedia. Under the “Current codes” section, there is a list of countries and their corresponding codes. You can open the DevTools by pressing the key combination CTRL + SHIFT + I on chrome or right-click and then select “Inspect” option.

This is what the list looks like for me in chrome DevTools:

In the next section, you will write code for scraping the web page.

Step 5 – Write the Code to Scrape the Data

In this section, you will write code for scraping the data we are interested in. Start by running the command below which will create the file.

touch

Successfully running the above command will create an file at the root of the project directory.

Like any other Node package, you must first require axios, cheerio, and pretty before you start using them. You can do so by adding the code below at the top of the file you have just created.

const axios = require(“axios”);

const cheerio = require(“cheerio”);

const pretty = require(“pretty”);

Before we write code for scraping our data, we need to learn the basics of cheerio. We’ll parse the markup below and try manipulating the resulting data structure. This will help us learn cheerio syntax and its most common methods.

The markup below is the ul element containing our li elements.

const markup = `

- Mango

- Apple

`;

Add the above variable declaration to the file

How to Load Markup in Cheerio

You can load markup in cheerio using the method. The method takes the markup as an argument. It also takes two more optional arguments. You can read more about them in the documentation if you are interested.

Below, we are passing the first and the only required argument and storing the returned value in the $ variable. We are using the $ variable because of cheerio’s similarity to Jquery. You can use a different variable name if you wish.

Add the code below to your file:

const $ = (markup);

(pretty($()));

If you now execute the code in your file by running the command node on the terminal, you should be able to see the markup on the terminal. This is what I see on my terminal:

How to Select an Element in Cheerio

Cheerio supports most of the common CSS selectors such as the class, id, and element selectors among others. In the code below, we are selecting the element with class fruits__mango and then logging the selected element to the console. Add the code below to your file.

const mango = $(“. fruits__mango”);

(()); // Mango

The above lines of code will log the text Mango on the terminal if you execute using the command node

How to Get the Attribute of an Element in Cheerio

You can also select an element and get a specific attribute such as the class, id, or all the attributes and their corresponding values.

const apple = $(“. fruits__apple”);

((“class”)); //fruits__apple

The above code will log fruits__apple on the terminal. fruits__apple is the class of the selected element.

How to Loop Through a List of Elements in Cheerio

Cheerio provides the method for looping through several selected elements.

Below, we are selecting all the li elements and looping through them using the method. We log the text content of each list item on the terminal.

Add the code below to your file.

const listItems = $(“li”);

(); // 2

(function (idx, el) {

($(el)());});

// Mango

// Apple

The above code will log 2, which is the length of the list items, and the text Mango and Apple on the terminal after executing the code in

How to Append or Prepend an Element to a Markup in Cheerio

Cheerio provides a method for appending or prepending an element to a markup.

The append method will add the element passed as an argument after the last child of the selected element. On the other hand, prepend will add the passed element before the first child of the selected element.

const ul = $(“ul”);

(“

“);

epend(“

“);

After appending and prepending elements to the markup, this is what I see when I log $() on the terminal:

Those are the basics of cheerio that can get you started with web scraping.

To scrape the data we described at the beginning of this article from Wikipedia, copy and paste the code below in the file:

// Loading the dependencies. We don’t need pretty

// because we shall not log html to the terminal

const fs = require(“fs”);

// URL of the page we want to scrape

const url = “;

// Async function which scrapes the data

async function scrapeData() {

try {

// Fetch HTML of the page we want to scrape

const { data} = await (url);

// Load HTML we fetched in the previous line

const $ = (data);

// Select all the list items in plainlist class

const listItems = $(“. plainlist ul li”);

// Stores data for all countries

const countries = [];

// Use method to loop through the li we selected

((idx, el) => {

// Object holding data for each country/jurisdiction

const country = { name: “”, iso3: “”};

// Select the text content of a and span elements

// Store the textcontent in the above object

= $(el). children(“a”)();

o3 = $(el). children(“span”)();

// Populate countries array with country data

(country);});

// Logs countries array to the console

(countries);

// Write countries array in file

fs. writeFile(“”, ringify(countries, null, 2), (err) => {

if (err) {

(err);

return;}

(“Successfully written data to file”);});} catch (err) {

(err);}}

// Invoke the above function

scrapeData();

Do you understand what is happening by reading the code? If not, I’ll go into some detail now. I have also made comments on each line of code to help you understand.

In the above code, we require all the dependencies at the top of the file and then we declared the scrapeData function. Inside the function, the markup is fetched using axios. The fetched HTML of the page we need to scrape is then loaded in cheerio.

The list of countries/jurisdictions and their corresponding iso3 codes are nested in a div element with a class of plainlist. The li elements are selected and then we loop through them using the method. The data for each country is scraped and stored in an array.

After running the code above using the command node, the scraped data is written to the file and printed on the terminal. This is part of what I see on my terminal:

Conclusion

Thank you for reading this article and reaching the end! We have covered the basics of web scraping using cheerio. You can head over to the cheerio documentation if you want to dive deeper and fully understand how it works.

Feel free to ask questions on the freeCodeCamp forum if there is anything you don’t understand in this article.

Finally, remember to consider the ethical concerns as you learn web scraping.

Learn to code for free. freeCodeCamp’s open source curriculum has helped more than 40, 000 people get jobs as developers. Get started

Frequently Asked Questions about web scraping using node js

Is node good for web scraping?

Luckily for JavaScript developers, there are a variety of tools available in Node. js for scraping and parsing data directly from websites to use in your projects and applications.Apr 29, 2020

How do I scrape data from a website using node JS?

How to Scrape a Web Page in Node Using CheerioStep 1 – Create a Working Directory. … Step 2 – Initialize the Project. … Step 3 – Install Dependencies. … Step 4 – Inspect the Web Page You Want to Scrape. … Step 5 – Write the Code to Scrape the Data.Jul 19, 2021

Can you use JavaScript to web scrape?

Since some websites rely on JavaScript to load their content, using an HTTP-based tool like Axios may not yield the intended results. With Puppeteer, you can simulate the browser environment, execute JavaScript just like a browser does, and scrape dynamic content from websites.Sep 27, 2020